This is part 8 of the VCP6-DCV Delta Study Guide. It covers storage enahncements in vSphere 6.0 including the NFS 4.1 and Virtual Volumes. After this section you should be able to configure NFS 4.1 based datastores and Virtual Volumes with Storage Policies.

Storage Policies

Virtual machine storage policies can help you to define storage requirements for the virtual machine and control which type of storage is provided for the virtual machine. It also controls how the virtual machine is placed within the storage, and which data services are offered for the virtual machine.

When you define a storage policy, you specify storage requirements for applications that run on virtual machines. After you apply this storage policy to a virtual machine, the virtual machine is placed in a specific datastore that can satisfy the storage requirements. In software-defined storage environments, such as Virtual SAN and Virtual Volumes, the storage policy also determines how the virtual machine storage objects are provisioned and allocated within the storage resource to guarantee the required level of service.

Rules Based on Tags

Rules based on tags reference datastore tags that you associate with specific datastores. You can apply more than one tag to a datastore. All tags associated with datastores appear in the VM Storage Policies interface. You can use the tags when you define rules for the storage policies.

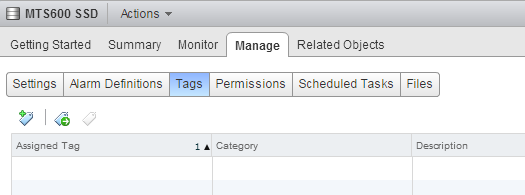

To assign tags to datastores open it in the datastore view an navigate to Manage > Tags. If you haven't created tags, click the New Tag button.

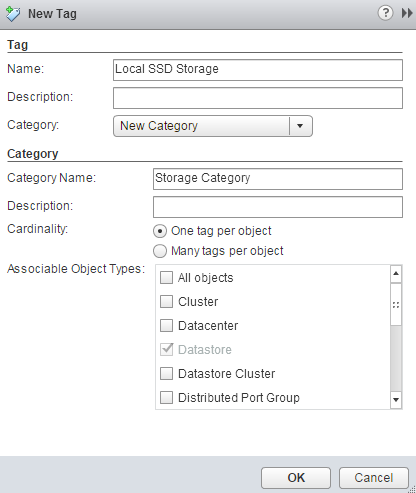

Type a name for the tag and select, or create a new category. In the following example I am going to create a new category called Storage Category and assign the datastore the Tag named Local SSD Storage.

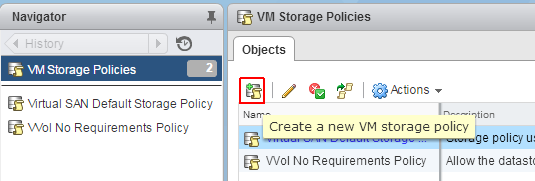

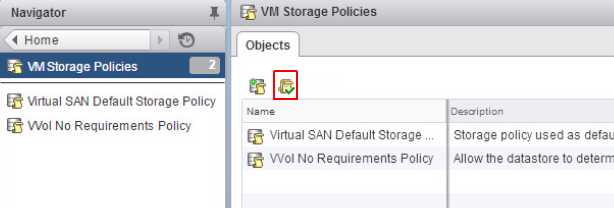

To create a tag based storage policy navigate to VM Storage Policies and click the Create a new VM storage policy button.

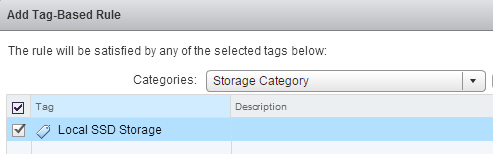

Enter a Name for the policy and click Next to assign a Rule. In the Rule-Set configuration Click on Add tag-based rule...

Select the tag you want to assign to the storage policy and finish the wizard.

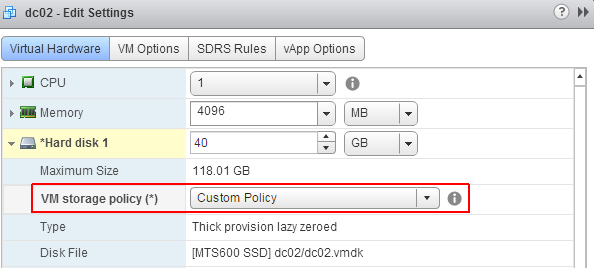

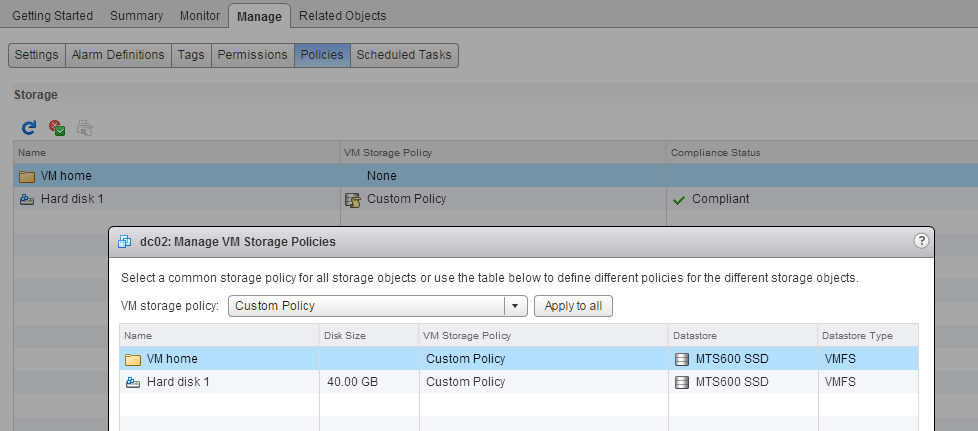

VM storage policies can be selected in the virtual machine configuration.

To verify storage policies, navigate to the Mange > Policies tab from the virtual machine. You can also verify that the virtual machine is compliant, which means that the objects are on a appropriate datastore.

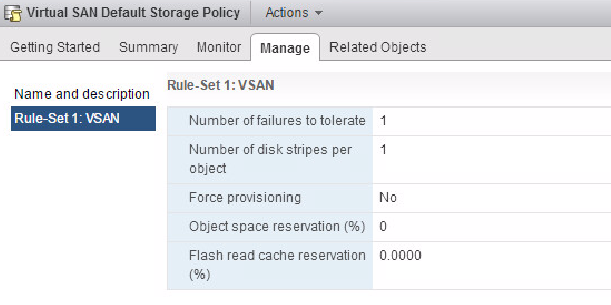

Rules Based on Storage-Specific Data Services

These rules are based on data services that storage entities such as Virtual SAN and Virtual Volumes advertise. Virtual SAN and Virtual Volumes use VASA providers to supply information about the underlying storage to the vCenter Server. Storage information and datastore characteristics appear in the VM Storage Policies interface of the vSphere Web Client as data services offered by the specific datastore type.

A single datastore can offer multiple services. The data services are grouped in a datastore profile that outlines the quality of service that the datastore can deliver. When you create rules for a VM storage policy, you reference data services that a specific datastore advertises. To the virtual machine that uses this policy, the datastore guarantees that it can satisfy the storage requirements of the virtual machine. The datastore also can provide the virtual machine with a specific set of characteristics for capacity, performance, availability, redundancy, and so on.

Storage-Specific rules can be assigned in the same way as tag based rules, but they allow more detailed configuration of the underlying storage system. The following example is the default policy from a Virtual SAN.

NFS 4.1

vSphere 6.0 introduces NFS 4.1 support. A NFS client is built into ESXi hosts to mount NFS volumes located on a NAS server as datastore. Currently, vSphere supports both, NFS 3.0 and NFS 4.1. When you use NFS 4.1, the following considerations apply:

- NFS 4.1 provides multipathing for servers that support session trunking. When trunking is available, you can use multiple IP addresses to access a single NFS volume. Client ID trunking is not supported.

- NFS 4.1 does not support hardware acceleration. This limitation does not allow you to create thick virtual disks on NFS 4.1 datastores.

- NFS 4.1 supports the Kerberos authentication protocol to secure communication with the NFS server. For more information, see Using Kerberos Credentials for NFS 4.1.

- NFS 4.1 uses share reservations as a locking mechanism.

- NFS 4.1 supports inbuilt file locking.

- NFS 4.1 supports nonroot users to access files when used with Kerberos.

- NFS 4.1 supports traditional non-Kerberos mounts. In this case, use security and root access guidelines recommended for NFS version 3.

The following limitations apply when you use NFS 4.1:

- NFS 4.1 Does not support simultaneous AUTH_SYS and Kerberos mounts.

- NFS 4.1 with Kerberos does not support IPv6. NFS 4.1 with AUTH_SYS supports both IPv4 and IPv6.

- NFS 4.1 does not support Storage DRS.

- NFS 4.1 does not support Storage I/O Control.

- NFS 4.1 does not support Site Recovery Manager.

- NFS 4.1 does not support Virtual Volumes.

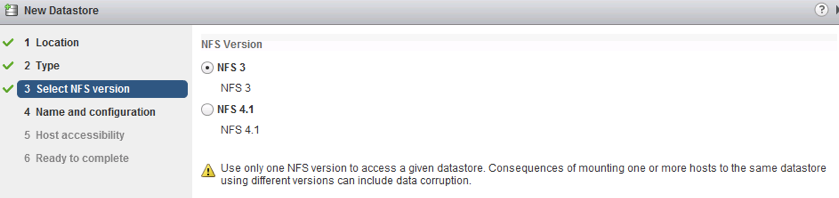

Mount NFS Datastores

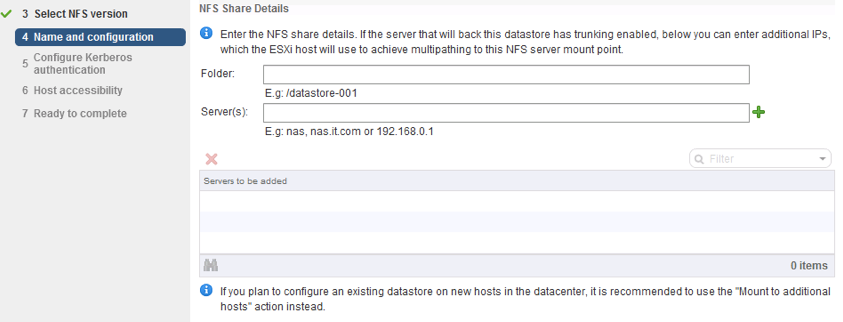

During the Datastore Wizard, you can select which NFS version should be used for the mount. You should should be aware that an NFS volume can not be mounted as NFS v3 to one ESXi host, and NFS v4.1 to another ESXi host. NFS 3 uses a propriety client side cooperative locking while NFS 4.1 uses server-side locking. When creating an NFS datastore make sure to use a consistent version or data corruption might occur.

NFS Multipathing

NFS v4.1 introduces better performance and availability through multipathing. During the new datastore wizard you can enter multiple IP addresses that are available on your NAS to achieve multipathing to the NFS server.

Kerberos Authentication

NFS 4.1 supports Kerberos (non-root user) authentication. Kerberos authentication requires an NFS user that is defined on each ESXi host. This user is used for remote file access. It is recommended to use the same user on all hosts. If two hosts are using different users, a vMotion task will fail.

To mount datastores with Kerberos authentication:

- Configure NTP on all ESXi host (Host > Manage > Settings > Time Configuration)

- ESXi host must joined the domain (Host > Manage > Authentication Services)

- Set NFS Kerberos credentials (Host > Manage > Authentication Services)

Virtual Volumes

vSphere 6.0 introduces Virtual Volumes. The Virtual Volumes functionality changes the storage management paradigm from managing space inside datastores to managing abstract storage objects handled by storage arrays. With Virtual Volumes, an individual virtual machine, not the datastore, becomes a unit of storage management, while storage hardware gains complete control over virtual disk content, layout, and management.

vSphere 6.0 introduces Virtual Volumes. The Virtual Volumes functionality changes the storage management paradigm from managing space inside datastores to managing abstract storage objects handled by storage arrays. With Virtual Volumes, an individual virtual machine, not the datastore, becomes a unit of storage management, while storage hardware gains complete control over virtual disk content, layout, and management.

The Virtual Volumes functionality helps to improve granularity and allows you to differentiate virtual machine services on a per application level by offering a new approach to storage management. Rather than arranging storage around features of a storage system, Virtual Volumes arranges storage around the needs of individual virtual machines, making storage virtual-machine centric.

Virtual Volumes maps virtual disks and their derivatives, clones, snapshots, and replicas, directly to objects, called virtual volumes, on a storage system. This mapping allows vSphere to offload intensive storage operations such as snapshot, cloning, and replication to the storage system.

By creating a volume for each virtual disk, you can set policies at the optimum level. You can decide in advance what the storage requirements of an application are, and communicate these requirements to the storage system, so that it creates an appropriate virtual disk based on these requirements. For example, if your virtual machine requires an active-active storage array, you no longer must select a datastore that supports the active-active model, but instead, you create an individual virtual volume that will be automatically placed to the active-active array.

Virtual Volumes Overview

- Virtualizes SAN and NAS devices

- Virtual disks are natively represented on arrays

- Enables finer control with VM level storage operations using array-based data services

- Storage Policy-Based Management enables automated consumption at scale

- Supports existing storage I/O protocols (FC, iSCSI, NFS)

- Industry-wide initiative supported by major storage vendors

- Included with vSphere

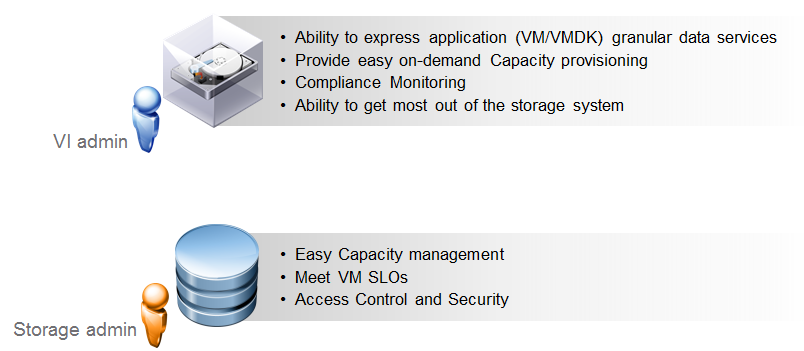

Why Virtual Volumes?

For the vSphere administrator, Virtual Volumes enables on-demand access to storage and storage services needed for virtual machines, and for the storage admin it provides a more efficient way to provision and manage storage for vSphere environments.

High Level Storage Architecture

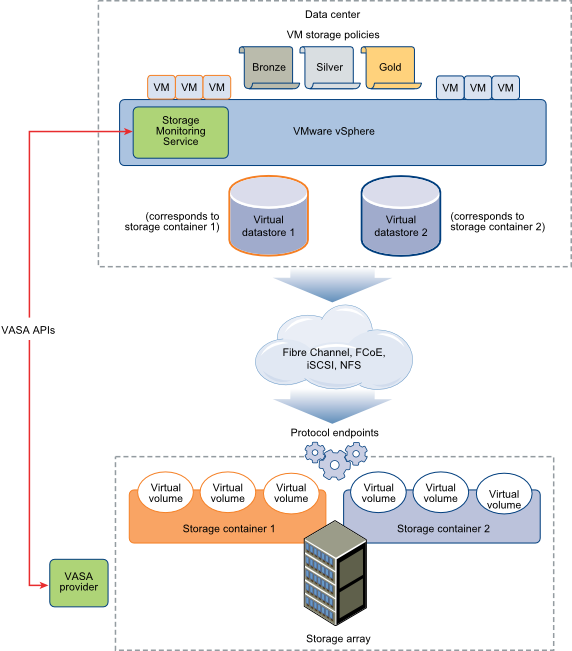

Virtual Volumes do not require a File System. The array is managed by the ESXi host through the vSphere APIs for storage awareness (VASA). The array is logically partitioned into containers, called Storage Containers. VM virtual disks are stored inside these Storage Containers.

IO from ESXi hosts to the array is addressed through an access point called Protocol Endpoint (PE). Data services are offloaded to the array and can be managed through the storage policy-based management framework

Protocol Endpoints are the channel through which data is sent between the virtual machines and the array. They can be thought of as the "data plane" component of Virtual Volumes. PEs are configured as a part of the physical storage fabric, and are accessed by standard storage protocols, such as iSCSI, NFS or Fibre Chanel. By having a separate data channel, Vvols performance is not affected by policy management activities.

Storage containers are a logical construct for grouping Virtual Volumes. They are configured in the storage array by the storage administrator. Containers provide the ability to isolate or partition storage according to SLA requirements. You can also simply create one storage container for the entire array.

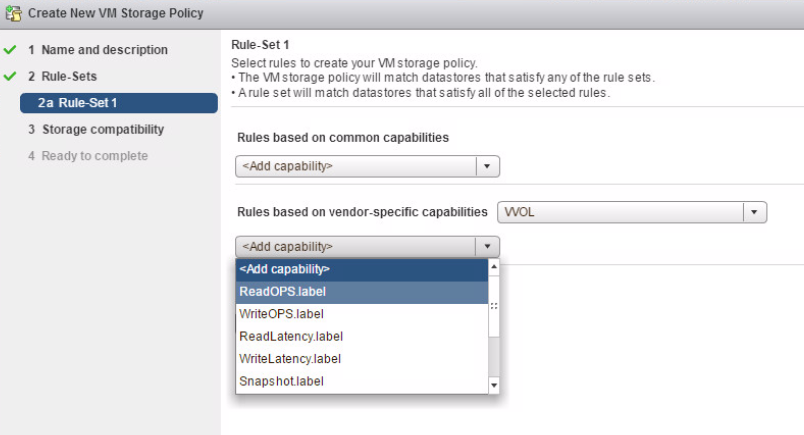

Storage Policy Based Management (SPBM) uses the VASA APIs to query the storage array about data services and provides theses services as capabilities. Capabilities can then be grouped together into rules and rulesets, which are then assigned to Virtual Machines. When configuring the array, the storage admin can choose which capabilities to expose or not expose to vSphere.

Work with Virtual Volumes

To work with Virtual Volumes, you must make sure that your storage and vSphere environment are set up correctly. The storage system or storage array that you use must be able to support Virtual Volumes and integrate with vSphere through vSphere APIs for Storage Awareness (VASA). A Virtual Volumes storage provider must be deployed and protocol endpoints, storage containers, and storage profiles must be configured on the storage side. Use Network Time Protocol (NTP) to synchronize all components in the storage array with vCenter Server and all ESXi hosts.

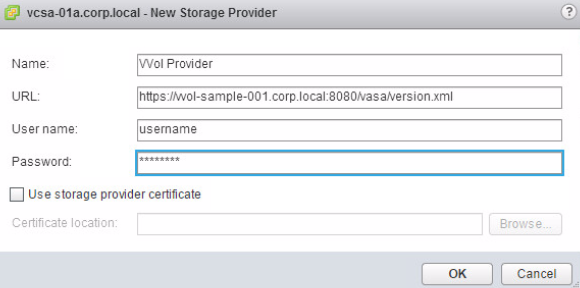

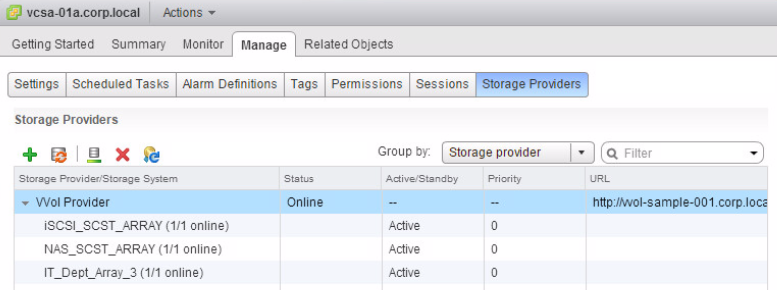

- Add a storage provider. To add a storage provider, navigate to vCenter > Manage > Storage Providers and press the green +.

- Enter the storage providers URL and login credentials.

The storage provider will be registered and appers in the list of storage providers.

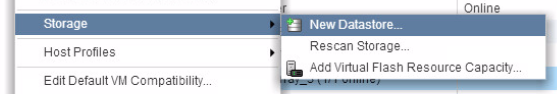

- To create a storage container, open the New Datastore... wizard.

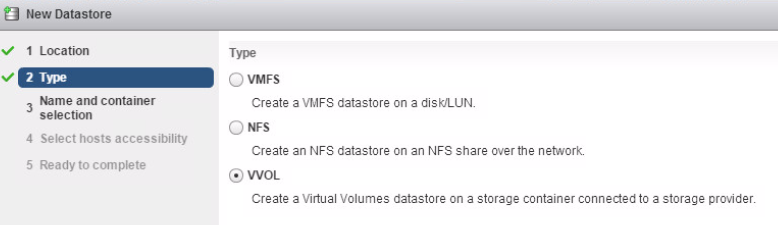

- Select VVOL as datastore type

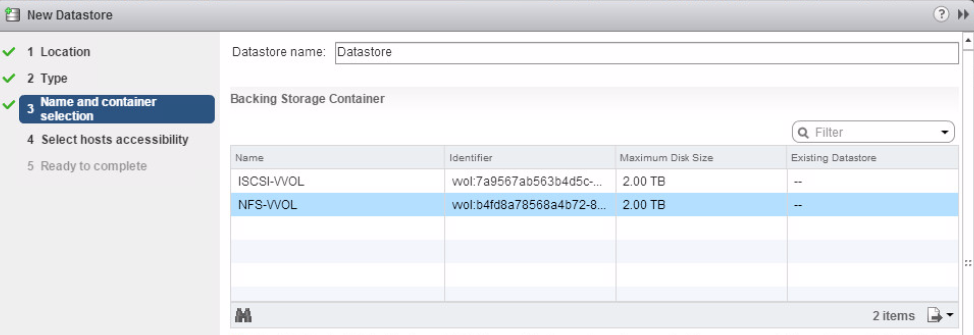

- Select the storage container that you want to add.

- Verify the storage container in the clusters Related Object tab

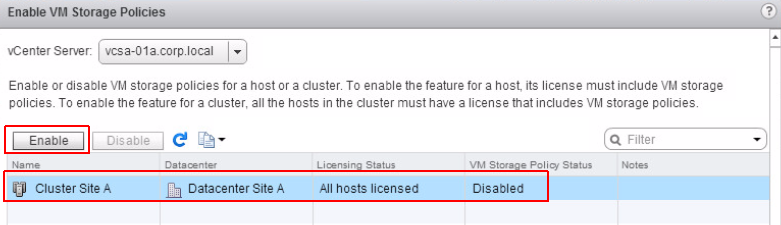

- Navigate to VM Storage Policies and enable VM Storage Policies for the Clusters

- Now you can create VM storage policies with VVOL vendor specific capabilities and assign them to your virtual machines.

VCP6-DCV Delta Study Guide

Part 1 - vSphere 6 Summary

Part 2 - How to prepare for the Exam?

Part 3 - Installation and Upgrade

Part 4 - ESXi Enhancements

Part 5 - Management Enhancements

Part 6 - Availability Enhancements

Part 7 - Network Enhancements

Part 8 - Storage Enhancements

Thanks very much for this guide, I have found it invaluable to my revision.

Great reading for preparing for the VCP6-Delta exam