Intel NUCs with ESXi are a proven standard for virtualization home labs. I'm currently running a homelab consisting of 3 Intel NUCs with a FreeNAS based All-Flash Storage. If you are generally interested in running ESXi on Intel NUCs, read this post first. One major drawback is that they only have a single Gigabit network adapter. This might be sufficient for a standalone ESXi with a few VMs, but when you want to use shared Storage or VMware NSX, you totally want to have additional NICs.

A few month ago, this problem has been solved by an unofficial driver that has been made available by VMware engineer William Lam.

[UPDATE 2017-02: I've updated this post because we have another great driver by Jose Gomes and some changes in ESXi 6.5]

[UPDATE 2020-06: Update to add USB NIC Fling]

Prerequisites

These drivers are intended to be used with systems like the Intel NUC that does not have PCIe slots for additional network adapters. They are not officially supported by VMware. Do not install them in production.

The drivers are made for USB NICs with the AX88179 chipset which is available for about $25. The following adapters have been verified to work:

- Anker Uspeed USB 3.0 to 10/100/1000 Gigabit Ethernet LAN Network Adapter

- StarTech USB 3.0 to Gigabit Ethernet NIC Adapter

- j5create USB 3.0 to Gigabit Ethernet NIC Adapter

- Vantec CB-U300GNA USB 3.0 Ethernet Adapter

Make sure that the system supports USB 3.0 and network adapter are mapped to the USB 3.0 hub. Legacy BIOS Settings might prevent ESXi to correctly map devices as explained here.

Verify the USB configuration with lsusb -tv

# lsusb -tv Bus# 2 `-Dev# 1 Vendor 0x1d6b Product 0x0003 Linux Foundation 3.0 root hub `-Dev# 2 Vendor 0x0b95 Product 0x1790 ASIX Electronics Corp. AX88179 Gigabit Ethernet

Choose a driver

As of today, we have two different drivers made by William Lam and Jose Gomes. Both drivers are working without issues, so it's up to you which one you choose:

- USB NIC Fling for ESXi 7.0 / 6.7 / 6.5 (Songtao Zheng & William Lam)

- ASIX Driver for ESXi 6.5 (William Lam)

- ASIX Driver for ESXi 5.5 & 6.0 (William Lam)

- USB-C / Thunderbolt 3 Driver for ESXi 5.5, 6.0 & 6.5 (William Lam)

- ASIX and Realtek Driver for ESXi 5.1, 5.5 & 6.0 (Jose Gomes)

- ASIX and Realtek Driver for ESXi 6.5 (Jose Gomes)

Installation

This is an example based on William Lams ASIX driver and vSphere 6.0. If you are using another driver, please refer to the guide provided by the link in the "Choose a driver" section.

- Download Driver VIB from here.

- Upload the drivers to a Datastore

- Install the driver

# esxcli software vib install -v /vmfs/volumes/datastore/vghetto-ax88179-esxi60u2.vib -f

- Verify that the drivers have been loaded successfully:

# esxcli network nic list Name PCI Device Driver Admin Status Link Status Speed Duplex MAC Address MTU Description ------ ------------ ------------ ------------ ----------- ----- ------ ----------------- ---- ------------------------------------------------- vmnic0 0000:00:19.0 e1000e Up Up 1000 Full b8:ae:ed:75:08:68 1500 Intel Corporation Ethernet Connection (3) I218-LM vusb0 Pseudo ax88179_178a Up Up 1000 Full 00:23:54:8c:43:45 1600 Unknown Unknown # esxcfg-nics -l Name PCI Driver Link Speed Duplex MAC Address MTU Description vmnic0 0000:00:19.0 e1000e Up 1000Mbps Full b8:ae:ed:75:08:68 1500 Intel Corporation Ethernet Connection (3) I218-LM vusb0 Pseudo ax88179_178aUp 1000Mbps Full 00:23:54:8c:43:45 1600 Unknown Unknown

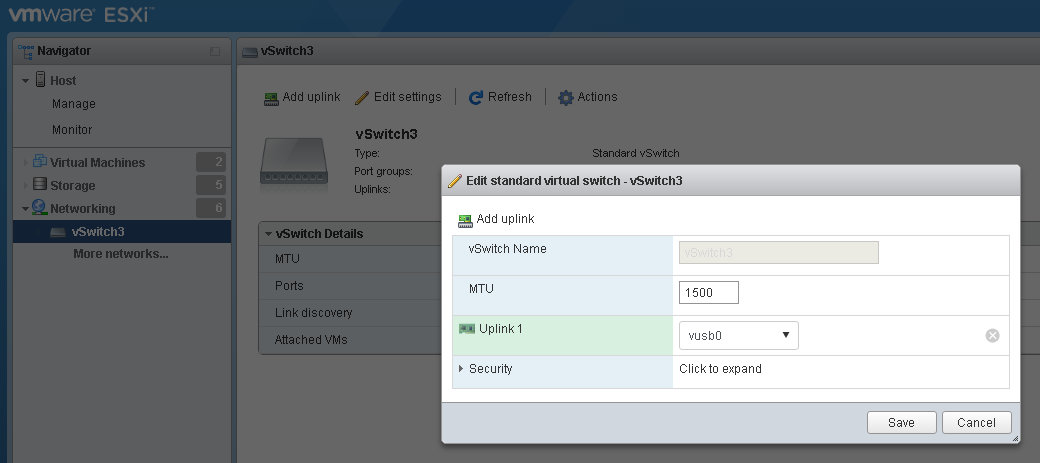

- Add the USB uplink to a Standard Switch or dvSwitch. You can do that with the vSphere Web Client or the VMware Host Client.

- Command Line# esxcli network vswitch standard uplink add -u vusb0 -v vSwitch0

Please note that this does not work with the vSphere Client. USB network adapters are not visible when adding adapters to vSwitches with the C# Client.

ESXi Installation with USB NIC

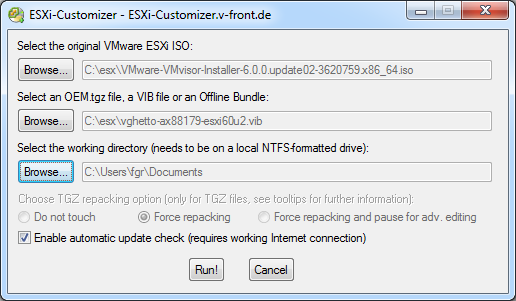

It is possible to create a customized ESXi Image including the AX88179 driver. This might be useful if you want to install ESXi on a system without any compatible network adapter.

Creating a custom ESXi Image that includes the driver is very easy with ESXi-Customizer by Andreas Peetz.

Performance

I have tested the Anker and Startech adapters on two different NUCs, an NUC5i5MYHE and the new NUC6i7KYK. The receiving end was my shared storage which has an Intel Quad Port Gigabit Adapter connected to a Cisco C2960G Switch. I've compared the performance with the NUCs onboard NIC.

Latency

I've measured the latency in both directions. The performance of both adapters is quite the same, and both are slightly slower, probably caused by the USB overhead. The results are not bad ad all:

Onboard NIC min/avg/max: 0.168/0.222/0.289 ms

AX88179 NIC min/avg/max: 0.193/0.310/0.483 ms

Bandwidth

To measure the bandwidth I've used iPerf, which is available on ESXi by default.

RX Performance Onboard NIC: 938 Mbits/sec

RX Performance Startech AX88179: 829 Mbits/sec

RX Performance Anker AX88179: 839 Mbits/sec

TX Performance Onboard NIC: 927 Mbits/sec

TX Performance Startech AX88179: 511 Mbits/sec

TX Performance Anker AX88179: 527 Mbits/sec

You can use multiple adapters to stack the performance. The NUC has 4 USB 3.0 ports while the first is used for the flash drive where ESXi boots from. I've tested the performance of 3 Startech Adapters:

RX Performance 3x Startech AX88179: 2565 Mbits/sec

TX Performance 3x Startech AX88179: 1522 Mbits/sec

When 3 network adapters are not enough, where is the limit? USB 3.0 supports up to 5000 MBits/s. I've connected all 3 network adapters to the same port with a USB 3.0 Hub. Here are the results:

RX Performance 3x Startech AX88179: 1951 Mbits/sec

TX Performance 3x Startech AX88179: 1555 Mbits/sec

As you can see, the overall performance, especially TX Performance (That is sending data out of the NUC) can not saturate the full bandwidth, but for a homelab the performance is sufficient and can be extended with multiple adapters. USB Adapters can also be used with Jumbo Frames, so creating an NSX Lab is possible.

Very nice, except that I got the driver running before William, and at full speed... But, I am not a VMware Engineer!

Anyway, if you want a full driver for ASIX and Realtek based USB adapters, they are here http://www.devtty.uk/homelab/Want-a-USB-Ethernet-driver-for-ESXi-You-can-have-two/

Otherwise, just keep the slower speed drivers....

Yep, I am bitter...

Thanks for your comment. Can you clarify that "just keep the slow speed drivers"?

I've replaced Williams with your ASIX driver an the performance doesnt change at all:

RX 775 Mbits/sec

TX 333 Mbits/sec

Any ideas?

Hi Florian,

First of all, let me apologise for my comment — I had one too many glasses of wine last night…

As for the throughput not changing for you I am not sure. The one thing we noticed whilst developing the drive was that specific BIOS settings impacted the USB 3.0 throughput. On some NUCs (and other servers) there are 4 options for the USB 3.0 ports: “Smart”, “Smart Auto”, “Enabled” and “Disabled”. The setting must be explicitly set to "Enabled" for the port to operate properly.

Other than the above, the only other time I noticed such bad speeds, it was related to an issue with changes to MAC addresses not being updated. This I described in my post about the driver…

On my own tests (and those of other people who are using the driver) the figures achieved are, consistently:

TX ~940 Mbits/sec

RX ~894 Mbtis/sec

The other driver and adapters I use (Realtek) have just a tad faster RX figures; both TX and RX are the same at ~940 Mbits/sec

The USB 3.0 Smart Auto Problem was with the 5th gen NUC.Devices are then connected to the USB2 hub and slow. See http://www.virten.net/2015/10/usb-3-0-devices-detected-as-usb-2-in-esxi-6-0-and-5-5/

The Skull Canyon NUC comes with a "USB Legacy" Setting which is disabled by default, so this should not be the problem. All devices are connected to the usb3 hub

I've also checked that ARP problem you are describing but I cant reproduce that. These vmk ports should get a random 00:50:56 MAC Address, except the first during installation, and so they do.

I'm trying to figure out what's wrong...

After a "dont-known-where-to-troubleshoot-reboot", performance is better:

RX 785 Mbits/sec

TX 892 Mbits/sec

Yes, the issue I mentioned is related to the 5th Gen NUC — I just didn't know what settings are available on the Skull Canyon (don't have one).

Anyway, I can see there is a bit of an improvement after the reboot, but still much lower than I have seen. The main difference between William's and my driver is that I have enabled TSO and SG, which improves TX tremendously. Your figures are still lower than I would have expected…

The only other thing I can say, is to check if it is something related to VLANs. Just saying that because both I and Glen Kemp were seeing odd results when sending traffic from one VLAN to another.

Also, can you check if SG and TSO are indeed enabled? The command would be "ethtool -k vusb0" and the result should be something like:

Offload parameters for vusb0:

Cannot get device udp large send offload settings: Function not implemented

Cannot get device generic segmentation offload settings: Function not implemented

rx-checksumming: on

tx-checksumming: on

scatter-gather: on

tcp segmentation offload: on

udp fragmentation offload: off

generic segmentation offload: off

Could you expand on whether your using ESXi as a type-1 or type-2 hypervisor? I'm planning to use ESXi on my intel nuc as a type-1 hypervisor, so I was wondering if the instructions on how to install the driver change slightly.

It would be nice if you could write something up on doing this for a hypervisor type-1 (ESXi installed bare-metal).

Thank you, great write up.

It's for the bare-metal hypervisor (aka. type-1), but it shouldn't make a difference. You can also use the driver when you have a virtual ESXi and use USB passthrough. (Not sure why anyone wants use a physical USB NIC in a virtual ESXi, but it sould work)

Pingback: VMware Homeserver – ESXi on 6th Gen Skull Canyon Intel NUC | Virten.net

I get this error: urlopen error [Errno 2] No such file or directory: '/var/log/vmware/vibvghetto-ax88179-esxi60u2.vib/index.xml'>"))

Great post!

If you use ESXi 6.0 or ESXi 6.5 or newer, you must use ESXi-Customizer-PS. The Windows app ESXi-Customizer is deprecated.

ESXi-Customizer-PS is a tool from the same author (Andreas Peetz) that runs under PowerCLI and you can also inject a driver into ISO ESXi install.

Extracted from: http://www.sysadmit.com/2017/01/vmware-esxi-instalar-driver.html

Does this still work with 6.5? I tried to install the 6.0 drivers and it successfully installed but it was not recognized when i ran the esxcli network nic list.

You have to disable vmkusb and use a new driver as explained here.

Thanks. I currently have a external HDD as a USB-datastore, will that stop working if i do this? That would not be optimal since some of my VMs are on the USB-datastore.

No, you can have both. Vmkusb is just a new type of driver introduced in vSphere 6.5. When you disable it, USB behaves just like you know it from ESXi 6.0.

Thanks again. It is now recognized by ESXi. Although it says it is in half duplex, but maybe that is because nothing is connected to it? I bought the Startech one.

I want to use this with pfsense, should i create a new vswitch or should i add this uplink to the existing vswitch?

Hi,

Thanks you so much for the guide and the topic.

I had tested the drivers in a ugreen USB3.0 network and everything is correct.

I also give muy thanks to the getto-guru.

Thank you so mucha again.

Adding my thanks using Startech on 6.0u2

Am using USB-C to NIC on Skull Canyon running fully patched 6.5, have tried both driver options, both run fine for a while, days in some cases, but always end up not passing traffic eventually, anybody else seeing this? Or even better have any suggestions to fix? Cheers!

Hi Scot,

I had the same problem.

Neither of the two USB nics that i have works.

But the problem get worst when my ESXi put the usb nic before the vmnic0, i lost connection and

i have to go to the nuc and disconnect physically the usb.

So... I waste my money...

I have the same problem, NICs not passing any traffic after a couple of houres.

I can confirm that on my 5th gen Nuc using the Startech single adapter, I get the same result, after a few hours or day or so, the nic stops passing traffic. I have a 6th gen with the same nic and it never drops traffic, uptime is over 10 days. I finally just removed the usb nic from my 5th gen. I'll pick up another 6th gen and see if that cures my issue.

I have a 6th gen NUC (NUC6I5SYK) with this StarTech USB NIC (AX88179 chipset) and it suddenly drops traffic usually on medium to heavy traffic load. I installed different firewall VMs (OPNsense, Sophos UTM, Untangle, etc.) on my ESXi 6.0 with this 2 NIC setup but with those traffic drops it is not usable. Would be nice if someone could update that driver to a version which is 100% working!

I'm seeing this weird issue where whenever I reboot the host the USB nic detaches itself from the vSwitch. In the host UI I can reselect it as the uplink, but in vCenter I have to remove it first and then re-add it. Only thing I can think is that my vSwitch is named something non-standard and was defined separately in each host (rather than creating is in vCenter and accepting "vSwitch(X)" as the switch name.

Apart from that, the driver is working beautifully.

I'm seeing this weird issue where whenever I reboot the host the USB nic detaches itself from the vSwitch. In the host UI I can reselect it as the uplink, but in vCenter I have to remove it first and then re-add it. Only thing I can think is that my vSwitch is named something non-standard and was defined separately in each host (rather than creating is in vCenter and accepting "vSwitch(X)" as the switch name.

Apart from that, the driver is working beautifully.

Hi, I'm seeing this error when switching from standard switch to DvSwitch.

Addition or Reconfiguration of network adapters attached to non-ephemeral distributed virtual port groups (port group name) is not supported. Any help here please.

Mario.