vSAN 6.6 RVC Guide |

The "vSAN 6.6 RVC Guide" series explains how to manage your VMware Virtual SAN environment with the Ruby vSphere Console. RVC is an interactive command line tool to control and automate your platform. If you are new to RVC, make sure to read the Getting Started with Ruby vSphere Console Guide. All commands are from the latest vSAN 6.6 version.

The sixth part is about troubleshooting vSAN deployments.

- vsan.obj_status_report

- vsan.check_state

- vsan.fix_renamed_vms

- vsan.reapply_vsan_vmknic_config

- vsan.vm_perf_stats

- vsan.clear_disks_cache

- vsan.lldpnetmap

- vsan.recover_spbm

- vsan.support_information

- vsan.purge_inaccessible_vswp_objects

- vsan.scrubber_info

vsan.obj_status_report [-t|-f|-u|-i] ~cluster|~host

Provides information about objects and their health status. With this command, you can identify that all object components are healthy, which means that witness and all mirrors are available and synced. It also identifies possibly orphaned objects.

- -t, --print-table: Print a table of object and their status, default all objects

- -f, --filter-table: Filter the obj table based on status displayed in histogram, e.g. 2/3

- -u, --print-uuids: In the table, print object UUIDs instead of vmdk and vm paths

- -i, --ignore-node-uuid: Estimate the status of objects if all comps on a given host were healthy.

Example 1 – Example 1 - Simple component status histogram:

We can see 45 objects with 3/3 healthy components and 23 objects with 7/7 healthy components. With default policies, 3/3 are disks (2 mirror+witness) and 7/7 are namespace directories. We can also see an orphand object in this example.

> vsan.obj_status_report ~cluster Querying all VMs on vSAN ... Querying all objects in the system from vesx1.virten.lab ... Querying all disks in the system from vesx1.virten.lab ... Querying all components in the system from vesx1.virten.lab ... Querying all object versions in the system ... Got all the info, computing table ... Histogram of component health for non-orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 3/3 | 45 | | 7/7 | 23 | +-------------------------------------+------------------------------+ Total non-orphans: 68 Histogram of component health for possibly orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 1/3 | 1 | +-------------------------------------+------------------------------+ Total orphans: 1 Total v1 objects: 0 Total v2 objects: 0 Total v2.5 objects: 0 Total v3 objects: 0 Total v5 objects: 68

Example 2 - Add a table with all object and their status to the command output. That output reveals which object actually is orphaned.

> vsan.obj_status_report ~cluster -t Querying all VMs on VSAN ... Querying all objects in the system from esx1.virten.lab ... Querying all disks in the system ... Querying all components in the system ... Got all the info, computing table ... Histogram of component health for non-orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 3/3 | 45 | | 7/7 | 23 | +-------------------------------------+------------------------------+ Total non-orphans: 68 Histogram of component health for possibly orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 1/3 | 1 | +-------------------------------------+------------------------------+ Total orphans: 1 +-----------------------------------------------------------------------------+---------+---------------------------+ | VM/Object | objects | num healthy / total comps | +-----------------------------------------------------------------------------+---------+---------------------------+ | perf9 | 1 | | | [vsanDatastore] 735ec152-da7c-64b1-ebfe-eca86bf99b3f/perf9.vmx | | 7/7 | | perf8 | 1 | | | [vsanDatastore] 195ec152-92a3-491b-801d-eca86bf99b3f/perf8.vmx | | 7/7 | [...] +-----------------------------------------------------------------------------+---------+---------------------------+ | Unassociated objects | | | [...] | 795dc152-6faa-9ae7-efe5-001b2193b9a4 | | 3/3 | | d068ab52-b882-c6e8-32ca-eca86bf99b3f | | 1/3* | | ce5dc152-c5f6-3efb-9a44-001b2193b3b0 | | 3/3 | +-----------------------------------------------------------------------------+---------+---------------------------+ +------------------------------------------------------------------+ | Legend: * = all unhealthy comps were deleted (disks present) | | - = some unhealthy comps deleted, some not or can't tell | | no symbol = We cannot conclude any comps were deleted | +------------------------------------------------------------------+

Example 3 - Add a filtered table with unhealthy components only. We filter the table to show 1/3 health components only

> vsan.obj_status_report ~cluster -t -f 1/3 [...] +-----------------------------------------+---------+---------------------------+ | VM/Object | objects | num healthy / total comps | +-----------------------------------------+---------+---------------------------+ | Unassociated objects | | | | d068ab52-b882-c6e8-32ca-eca86bf99b3f | | 1/3* | +-----------------------------------------+---------+---------------------------+ [...]

vsan.check_state [-r|-e|-f] ~cluster|~host

Checks state of VMs and VSAN objects. This command can also re-register vms where objects are out of sync.

- -r, --refresh-state: Not just check state, but also refresh

- -e, --reregister-vms: Not just check for vms with VC/hostd/vmx out of sync but also fix them by un-registering and re-registering them

- -f, --force: Force to re-register vms, without confirmation

Example 1 – Check state:

> vsan.check_state ~cluster Step 1: Check for inaccessible vSAN objects Detected 0 objects to be inaccessible Step 2: Check for invalid/inaccessible VMs Step 3: Check for VMs for which VC/hostd/vmx are out of sync Did not find VMs for which VC/hostd/vmx are out of sync

vsan.fix_renamed_vms [-f] ~vm

This command fixes virtual machines that are renamed by the vCenter in case of storage inaccessibility when they are get renamed to their vmx file path. It is possible for some VMs to get renamed to vmx file path. eg. "/vmfs/volumes/vsanDatastore/foo/foo.vmx". This command will rename this VM to "foo".

It is a best effort command, as the real VM name is unknown.

- -f, --force: Force to fix name (No asking)

Example 1 – Fix a renamed VM:

> vsan.fix_renamed_vms ~/vms/vmfs/volumes/vsanDatastore/vma.virten.lab/vma.virten.lab.vmx/ Continuing this command will rename the following VMs: /vmfs/volumes/vsanDatastore/vma.virten.lab/vma.virten.lab.vmx -> vma.virten.lab Do you want to continue [y/N]? y Renaming... Rename /vmfs/volumes/vsanDatastore/vma.virten.lab/vma.virten.lab.vmx: success

vsan.reapply_vsan_vmknic_config [-v|-d] ~host

Unbinds and rebinds vSAN to its VMkernel ports. Could be useful when you have network configuration problems in your VSAN Cluster, for example when vSAN detects the network to be partitioned and you have fixed the network config.

- -v, --vmknic: Refresh a specific vmknic. default is all vmknics

- -d, --dry-run: Do a dry run: Show what changes would be made

Example 1 – Run the command in dry mode:

> vsan.reapply_vsan_vmknic_config -d ~esx

Host: vesx1.virten.lab

Would reapply config of vmknic vmk2:

AgentGroupMulticastAddress: 224.2.3.4

AgentGroupMulticastPort: 23451

IPProtocol: IP

InterfaceUUID: 8cbca458-2ccd-0606-125a-005056b968bd

MasterGroupMulticastAddress: 224.1.2.3

MasterGroupMulticastPort: 12345

MulticastTTL: 5

Example 1 – Rebind vSAN to VMkernel port:

> vsan.reapply_vsan_vmknic_config ~esx

Host: vesx1.virten.lab

Reapplying config of vmk2:

AgentGroupMulticastAddress: 224.2.3.4

AgentGroupMulticastPort: 23451

IPProtocol: IP

InterfaceUUID: 8cbca458-2ccd-0606-125a-005056b968bd

MasterGroupMulticastAddress: 224.1.2.3

MasterGroupMulticastPort: 12345

MulticastTTL: 5

Unbinding vSAN from vmknic vmk2 ...

Rebinding vSAN to vmknic vmk2 ...

vsan.vm_perf_stats [-i|-s] ~vm

Displays virtual machine performance statistics.

- -i, --interval: Time interval to compute average over (default: 20)

- -s, --show-objects: Show objects that are part of VM

The following metrics are supported:

- IOPS (read/write)

- Throughput? in KB/s (read/write

- Latency in ms (read/write)

Example 1 – Display performance stats (Default: 20sec interval):

> vsan.vm_perf_stats ~vm Querying info about VMs ... Querying VSAN objects used by the VMs ... Fetching stats counters once ... Sleeping for 20 seconds ... Fetching stats counters again to compute averages ... Got all data, computing table +-----------+--------------+------------------+--------------+ | VM/Object | IOPS | Tput (KB/s) | Latency (ms) | +-----------+--------------+------------------+--------------+ | win7 | 325.1r/80.6w | 20744.8r/5016.8w | 2.4r/12.2w | +-----------+--------------+------------------+--------------+

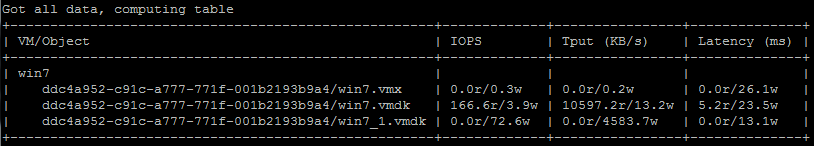

Example 2 - Display all VM objects performance stats with an interval of 5 seconds:

> vsan.vm_perf_stats --show-objects --interval=5 ~vm Querying info about VMs ... Querying VSAN objects used by the VMs ... Fetching stats counters once ... Sleeping for 5 seconds ... Fetching stats counters again to compute averages ... Got all data, computing table +-----------------------------------------------------+-------------+----------------+--------------+ | VM/Object | IOPS | Tput (KB/s) | Latency (ms) | +-----------------------------------------------------+-------------+----------------+--------------+ | win7 | | | | | ddc4a952-c91c-a777-771f-001b2193b9a4/win7.vmx | 0.0r/0.3w | 0.0r/0.2w | 0.0r/26.1w | | ddc4a952-c91c-a777-771f-001b2193b9a4/win7.vmdk | 166.6r/3.9w | 10597.2r/13.2w | 5.2r/23.5w | | ddc4a952-c91c-a777-771f-001b2193b9a4/win7_1.vmdk | 0.0r/72.6w | 0.0r/4583.7w | 0.0r/13.1w | +-----------------------------------------------------+-------------+----------------+--------------+

vsan.clear_disks_cache

Clears cached disk information. $disksCache is an internal variable.

Example 1 – Clear disks cache (No output):

> vsan.clear_disks_cache

vsan.lldpnetmap ~cluster|~host

Displays the mapping between the physical network interface and physical switches by using Link Layer Discovery Protocol (LLDP).

Example 1 – Display LLDP information from all hosts:

> vsan.lldpnetmap ~cluster This operation will take 30-60 seconds ... +-------------------+--------------+ | Host | LLDP info | +-------------------+--------------+ | esx2.virten.local | s320: vmnic1 | | esx3.virten.local | s320: vmnic1 | | esx1.virten.local | s320: vmnic1 | +-------------------+--------------+

vsan.recover_spbm [-d|-f] ~cluster|~host

This command recovers Storage Policy Based Management associations.

- -d, --dry-run: Don't take any automated actions

- -f, --force: Answer all question with 'yes'

Example 1 – Recover SPBM profile association:

> vsan.recover_spbm -f ~cluster Fetching Host info Fetching Datastore info Fetching VM properties Fetching policies used on VSAN from CMMDS Fetching SPBM profiles Fetching VM <-> SPBM profile association Computing which VMs do not have a SPBM Profile ... Fetching additional info about some VMs Got all info, computing after 0.66 sec Done computing SPBM Profiles used by VSAN: +---------------------+---------------------------+ | SPBM ID | policy | +---------------------+---------------------------+ | Not managed by SPBM | proportionalCapacity: 100 | | | hostFailuresToTolerate: 1 | +---------------------+---------------------------+ | Not managed by SPBM | hostFailuresToTolerate: 1 | +---------------------+---------------------------+

vsan.support_information [-s] ~cluster

Use this command to gather information for support cases with VMware.

- -s, --skip-hostvm-info: Skip collecting host and vm information in vSAN cluster

This commands runs several other commands:

- vsan.support_information

- vsan.cluster_info

- vsan.host_info

- vsan.vm_object_info

- vsan.host_info

- vsan.vm_object_info

- vsan.host_info

- vsan.vm_object_info

- vsan.disks_info

- vsan.disks_stats

- vsan.check_limits

- vsan.check_state

- vsan.lldpnetmap

- vsan.obj_status_report

- vsan.resync_dashboard

- vsan.disk_object_info

Example 1 – Gather Support Information (Command output omitted):

> vsan.support_information ~cluster VMware virtual center af2287bd-1dff-42a2-812d-bbaa9777221e *** command> vsan.support_information vSAN65 *** command> vsan.cluster_info vSAN65 *** command> vsan.host_info vesx1.virten.lab *** command> vsan.vm_object_info *** command> vsan.host_info vesx3.virten.lab *** command> vsan.vm_object_info *** command> vsan.host_info vesx2.virten.lab *** command> vsan.vm_object_info *** command> vsan.disks_info *** command> vsan.disks_stats *** command> vsan.check_limits vSAN65 *** command> vsan.check_state vSAN65 *** command> vsan.lldpnetmap vSAN65 *** command> vsan.obj_status_report vSAN65 *** command> vsan.resync_dashboard vSAN65 *** command> vsan.disk_object_info vSAN65, disk_uuids Total time taken - 62.86973495 seconds

vsan.purge_inaccessible_vswp_objects [-f] ~cluster

Search and delete inaccessible vswp objects on a virtual SAN cluster.

VM vswp file is used for memory swapping for running VMs by ESX. In VMware virtual SAN a vswp file is stored as a separate virtual SAN object. When a vswp object goes inaccessible, memory swapping will not be possible and the VM may crash when next time ESX tries to swap the memory for the VM. Deleting the inaccessible vswp object will not make thing worse, but it will eliminate the possibility for the object to regain accessibility in future time if this is just a temporary issue (e.g. due to network failure or planned maintenance).

Due to a known issue in vSphere 5.5, it is possible for vSAN to have done incomplete deletions of vswp objects. In such cases, the majority of components of such objects were deleted while a minority of components were left unavailable (e.g. due to one host being temporarily down at the time of deletion). It is then possible for the minority to resurface and present itself as an inaccessible object because a minority can never gain quorum. Such objects waste space and cause issues for any operations involving data evacuations from hosts or disks. This command employs heuristics to detect this kind of left-over vswp objects in order to delete them.

It will not cause data loss by deleting the vswp object. The vswp object will be regenerated when the VM is powered on next time.

- -f, --force: Force to delete the inaccessible vswp objects quietly (no interactive confirmations)

Example 1 – Search and delete inaccessible vswp objects:

> vsan.purge_inaccessible_vswp_objects ~cluster Collecting all inaccessible vSAN objects... Found 0 inaccessbile objects.

vsan.scrubber_info ~cluster

For every host, the command will list each VM and its disks, and display several metrics related to the Virtual SAN background task known as "scrubbing".

Scrubbing is responsible for periodically reading through the entire address space of every object stored on Virtual SAN, for the purpose of finding latent sector errors on the physical disks backing VSAN.

Note that this is a background task performed by Virtual SAN automatically. It is running quite slowly in order to not impact production workloads. This command is intended to give some insight into this background task.

Example 1 – Display scrubber info:

> vsan.scrubber_info ~cluster +------+------------------+ | Host | vesx2.virten.lab | +------+------------------+ +-----------------------------------------------------------------+-------------------+-----------------+------------------+ | Obj Name | Total object Size | Errors detected | Errors recovered | +-----------------------------------------------------------------+-------------------+-----------------+------------------+ | VM: vMA | | | | | [vsanDatastore] b28df658-c089-2d82-5649-005056b9f17c/vMA.vmx | 510.0 GB | 0 | 0 | | [vsanDatastore] b28df658-c089-2d82-5649-005056b9f17c/vMA.vmdk | 6.0 GB | 0 | 0 | +-----------------------------------------------------------------+-------------------+-----------------+------------------+ +------+------------------+ | Host | vesx3.virten.lab | +------+------------------+ +--------------------------------------------------------------------+-------------------+-----------------+------------------+ | Obj Name | Total object Size | Errors detected | Errors recovered | +--------------------------------------------------------------------+-------------------+-----------------+------------------+ | VM: testvm | | | | | [vsanDatastore] da00a658-f668-f186-af1c-005056b9f17c/testvm.vmx | 510.0 GB | 0 | 0 | | [vsanDatastore] da00a658-f668-f186-af1c-005056b9f17c/testvm.vmdk | 12.0 GB | 0 | 0 | +--------------------------------------------------------------------+-------------------+-----------------+------------------+

Excellent !!!