I could get my hands on Intel's first 3D XPoint based SSD to figure out how it performs.

I could get my hands on Intel's first 3D XPoint based SSD to figure out how it performs.

3D XPoint is a new non-volatile memory technology that has been developed by Intel and Micron.

With 32GB, it doesn't make sense to buy them for anything else than their intended use case: Cache device to enhance SSD/HDD Performance. If you want to use Optane technology as VM Datastore, wait a couple of months when devices with a higher capacity are available.

Specification Comparison

Before starting with benchmarks or talking about capabilities, let's have a look at the specifications. I've chosen the common consumer SSDs to compare against the new 32GB Intel Optane:

| Intel Optane | Samsung 950 PRO | Samsung 960 PRO | Samsung 960 EVO | Intel 600P | |

| Cell | 3D XPoint | 3DNAND-MLC | 3DNAND-MLC | 3DNAND TLC | 3DNAND TLC |

| Capacity | 32 GB | 256 GB | 512 GB | 250 GB | 256 GB |

| Sequential Read | 1.350 MB/s | 2.200 MB/s | 3.500 MB/s | 3.200 MB/s | 1.570 MB/s |

| Sequential Write | 290 MB/s | 900 MB/s | 2.100 MB/s | 1.500 MB/s | 540 MB/s |

| Random Read | 240.000 IOPS | 270.000 IOPS | 330.000 IOPS | 330.000 IOPS | 71.000 IOPS |

| Random Write | 65.000 IOPS | 85.000 IOPS | 330.000 IOPS | 300.000 IOPS | 112.000 IOPS |

| Read Latency | 7 µs | ||||

| Write Latency | 18 µs | ||||

| Endurance | 182,5 TB | 200 TB | 400 TB | 100 TB | 144 TB |

| DWPD* | 3,125 | 0,428 | 0,428 | 0,365 | 0,308 |

| Warranty | 5 years | 5 years | 5 years | 3 years | 5 years |

| Street Price | $80 | $170 | $300 | $120 | $200 |

| Price/GB | $2,50 | $0,66 | $0,59 | $0,48 | $0,78 |

*DWPD (Drive Writes Per Day) is a calculated value based on Capacity, Endurance, and Warranty.

When looking at these specifications, it appears that there is nothing super special in the 3D Xpoint technology. The only remarkable advantage is its relatively high durability, taking into account the low capacity.

Read/Write Performance

Not saying that 1.350MB/s and 240.000 IOPS is slow but it definitely lacks what many expected when the first information about 3D Xpoint technology was announced in 2015. Of course, there is more to come as the 32GB Optane SSD is only the first available device with a relatively small capacity. To understand why the Optane SSD still has the right to exists, you have to understand where it is supposed to be good: Low Queue Depth and Endurance.

Latency

Intel is the only vendor that has latency information in their specifications. Samsung, for example, has a white paper (here) that takes another approach. They calculate latency based on IOPS achieved with a high Queue Depth. When you want to deliver 330.000 IOPS, each IO must return in 3 µs (microseconds). That is not a realistic value because it can be achieved only with parallel IO, not with single IO operations. What they also have in their specification is IOPS with QD1 where the 960 PRO achieves 14.000 IOPS Read and 50.000 IOPS Write, which means about 70 µs and 20 µs.

System Requirements / Support

To use the Optane SSD you need a Gen7 (Kaby Lake) supported mainboard and CPU with an M.2 slot (X4 Gen 3 NVMe). When talking about ESXi, there is no difference to a common M.2 NVMe based SSD like the Samsung 950 Pro for example. It should work out of the box, despite is it (needless to say) not supported. Actually, there is only one Optane based SSD in the HCL at the moment, the 375GB Intel P4800X Series.

Performance Test

I do not plan to do excessive tests with various devices. For this article, I'm just doing quick fio benchmarks with the 16GB Optane and an older Samsung 950 Pro SSD. If you want to read a more detailed benchmark review, I recommend this article by storagereview.com.

The following components have been used for the benchmark:

- Intel NUC7i7BNH

- ESXi 6.5 U1 (Build 5969303)

- Debian 8.0

- VMware Paravirtual SCSI Controller

- Flexible IO Tester (fio)

- Intel Optane M.2 16GB

- Samsung 950 PRO 256GB

- Driver: nvme 1.2.0.32-4vmw.650.1.26.5969303

Test 1 - Sequential write with dd

The first test as warm up. Expected values are 145MB/s for the Optane and 900 MB/s for the 950PRO.

# dd if=/dev/zero of=/dev/sdb bs=1M

- Optane 16GB: 151 MB/s

- Samsung 950 PRO: 932 MB/s

Test 2 - Sequential write (QD=1)

The options used are "rw=write" for sequential writes, "direct=1" (non-buffered I/O), "blocksize=4M" (4MB blocksize) and "runtime=30" (timed benchmark). The default queue depth, and thus used in this test, is 1.

# fio --rw=write --direct=1 --ioengine=libaio --time_based --runtime=30 --blocksize=4M

- Optane 16GB: 143 MB/s

- Samsung 950 PRO: 516 MB/s

Test 3 - Sequential write (QD=32)

The same test again, but now with "--iodepth=32" to keep 32 IO units in flight.

#fio --rw=write --direct=1 --ioengine=libaio --time_based --blocksize=4M --iodepth=32

- Optane 16GB: 145 MB/s

- Samsung 950 PRO: 900 MB/s

Test 4 - Random write

Now I switch the "--rw=randwrite" option to simulate random write requests. I'm running the test 3 times with a queue depth of 1, 4 and 32. For random IO, a higher queue depth should drastically increase the performance and show the impact of "Queue Depth".

#fio --rw=randwrite --direct=1 --ioengine=libaio --time_based --iodepth=1

#fio --rw=randwrite --direct=1 --ioengine=libaio --time_based --iodepth=4

#fio --rw=randwrite --direct=1 --ioengine=libaio --time_based --iodepth=32

- Optane 16GB: 19866 IOPS (QD=1)

- Optane 16GB: 37555 IOPS (QD=4)

- Optane 16GB: 37564 IOPS (QD=32)

- Samsung 950 PRO: 28880 IOPS (QD=1)

- Samsung 950 PRO: 77194 IOPS (QD=4)

- Samsung 950 PRO: 79749 IOPS (QD=32)

Test 5 - Sequential read

The next benchmark tests sequential reads. Again, I'm testing with a queue depth of 1, 4 and 32.

#fio --rw=read --direct=1 --ioengine=libaio --time_based --blocksize=4M --iodepth=1

#fio --rw=read --direct=1 --ioengine=libaio --time_based --blocksize=4M --iodepth=4

#fio --rw=read --direct=1 --ioengine=libaio --time_based --blocksize=4M --iodepth=32

- Optane 16GB: 841 MB/s (QD=1)

- Optane 16GB: 886 MB/s (QD=4)

- Optane 16GB: 811 MB/s (QD=32)

- Samsung 950 PRO: 2085 MB/s (QD=1)

- Samsung 950 PRO: 2197 MB/s (QD=4)

- Samsung 950 PRO: 2197 MB/s (QD=32)

Test 6 - Random read

Now I switch to the "--rw=randread" option to simulate random write requests. I'm running the test 3 times with a queue depth of 1, 4 and 32.

#fio --rw=randread --direct=1 --ioengine=libaio --time_based --iodepth=1

#fio --rw=randread --direct=1 --ioengine=libaio --time_based --iodepth=4

#fio --rw=randread --direct=1 --ioengine=libaio --time_based --iodepth=32

- Optane 16GB: 33448 IOPS (QD=1)

- Optane 16GB: 87514 IOPS (QD=4)

- Optane 16GB: 220421 IOPS (QD=32)

- Samsung 950 PRO: 10959 IOPS (QD=1)

- Samsung 950 PRO: 45139 IOPS (QD=4)

- Samsung 950 PRO: 198333 IOPS (QD=32)

Test 7 - Random read latency

For the next benchmark, I'm configuring fio to create a constant read IOPS stream (--rate_iops=500) and measure the latency. The runtime has been increased to 60 seconds to get better results. The queue depth does not have a huge impact here.

#fio --rw=randread --direct=1 --ioengine=libaio --time_based --runtime=60 --rate_iops=5000

- Optane 16GB: 215µs (99,99th percentile)

- Optane 16GB: 43µs (99,00th percentile)

- Samsung 950 PRO: 314µs (99,99th percentile)

- Samsung 950 PRO: 102µs (99,00th percentile)

Note: "43µs (99,00th percentile)" states that 99% of all requests are answered within 43 microseconds.

Test 8 - Random write latency

Same test, but now with a constant random write stream of 1000 IOPS.

#fio --rw=randwrite --direct=1 --ioengine=libaio --time_based --runtime=60 --rate_iops=1000

- Optane 16GB: 171µs (99,99th percentile)

- Optane 16GB: 53µs (99,00th percentile)

- Samsung 950 PRO: 229µs (99,99th percentile)

- Samsung 950 PRO: 40µs (99,00th percentile)

Conclusion: As mentioned above, Optane drives are supposed to deliver great read performance with a low queue depth. They also provide a lower read latency which makes them a superior cache device. Overall performance in raw values (IOPS, MB/s is good, but not outstanding.

Intel NVMe Driver

VMware has an embedded NVMe driver included in ESXi which has been used during the test. Intel also provides a driver which is mainly targeted at enterprise SSDs like the DC P3xxx, P4xxx, or the P4800X.

The driver for vSphere 6.5 is available here. The download contains an offline zip bundle and a vib file. Don't forget to extract the zip that you've downloaded as this is not the installable bundle. Copy the offline bundle to your ESXi, install it and reboot the system:

# esxcli software vib install -d /VMW-ESX-6.5.0-intel-nvme-1.2.1.15-offline_bundle-5330543.zip Installation Result Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective. Reboot Required: true VIBs Installed: INT_bootbank_intel-nvme_1.2.1.15-1OEM.650.0.0.4598673 VIBs Removed: VIBs Skipped: # reboot

Now you have two NVMe drivers and have to figure out which one is used for the Optane. As you can see in the output below: vmhba1, which is the Optan SSD, uses the intel-nvme driver.

[root@esx3:~] esxcli software vib list |grep nvme intel-nvme 1.2.1.15-1OEM.650.0.0.4598673 INT VMwareCertified 2017-08-30 nvme 1.2.0.32-4vmw.650.1.26.5969303 VMW VMwareCertified 2017-07-30 [root@esx3:~] esxcli storage core adapter list HBA Name Driver Link State UID Capabilities Description -------- ---------- ---------- ------------------------------------ ------------------- ----------------------------------------------------------------------- vmhba0 vmw_ahci link-n/a sata.vmhba0 (0000:00:17.0) Intel Corporation Sunrise Point-LP AHCI Controller vmhba1 intel-nvme link-n/a pscsi.vmhba1 (0000:03:00.0) Intel Corporation Non-Volatile memory controller

I've done the same tests again with fio and couldn't notice a difference in performance. Also, the Intel SSD Data Center Tool, which I was hoping to work with the Optane SSD did not recognize the device. I guess it is not necessary to use the Intel driver.

Intel SSD Data Center Tool Installation:

# esxcli software vib install -v /intel_ssd_data_center_tool-3.0.4-400.vib [DependencyError] VIB Intel_bootbank_intel_ssd_data_center_tool_3.0.4-400's acceptance level is community, which is not compliant with the ImageProfile acceptance level partner To change the host acceptance level, use the 'esxcli software acceptance set' command. Please refer to the log file for more details. # esxcli software acceptance set --level=CommunitySupported Host acceptance level changed to 'CommunitySupported'. # esxcli software vib install -v /intel_ssd_data_center_tool-3.0.4-400.vib Installation Result Message: Operation finished successfully. Reboot Required: false VIBs Installed: Intel_bootbank_intel_ssd_data_center_tool_3.0.4-400 VIBs Removed: VIBs Skipped: # /opt/intel/isdct/isdct show -intelssd No results

How to use an Optane SSD with VMware ESXi?

Datastore

The most common use for any type of disk: Use it as datastore for Virtual Machines. However, you might want to wait until Optane drives with more capacity are available. It doesn't make sense to have a 32GB datastore.

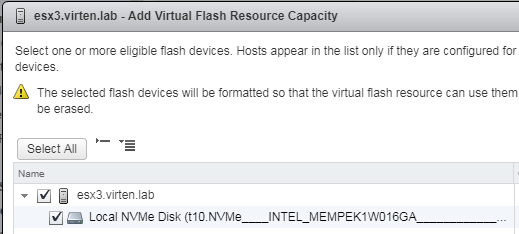

Read Cache

Optane drives offer great read capabilities so the Flash Read Cache is a great option to place them.

ESX > Configure > Virtual Flash > Virtual Flash Resource Management > Add Capacity...

Virtual SAN

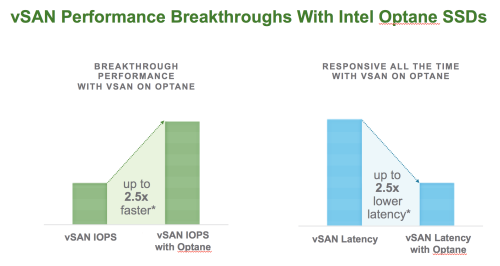

The Intel Optane SSD DC P4800X, which is the first available enterprise grade Optane SSD, is fully supported with vSAN and makes a great Cache device for Hybrid or All-Flash deployments. VMware has also published an article about how Optane technology can increase vSAN performance: vSAN Got a 2.5x Performance Increase: Thank You Intel Optane.