Many VMware Homelabs are based on Intel NUCs. It is also very common that generations are mixed which can lead to compatibility issues when trying to vMotion VMs across different generations. This is typically where VMware EVC comes into play.

Many VMware Homelabs are based on Intel NUCs. It is also very common that generations are mixed which can lead to compatibility issues when trying to vMotion VMs across different generations. This is typically where VMware EVC comes into play.

VMware EVC creates a baseline of CPU instructions for virtual machines running on ESXi hosts. When you add newer Hosts, EVC hides the new CPU instructions to the virtual machines. While this works great for Xeon CPUs used in professional servers, it has some limitations with consumer CPUs used in the Intel NUC ecosystem.

The problem has become worse with the latest 10th Gen Comet Lake/Frost Canyon NUC. Despite having a 10th generation CPU, it requires the EVC baseline to be configured to "Sandy Bridge", which is the 2nd generation of Intel Core-i CPUs:

- NUC10i7FNH/NUC10i7FNK (Intel Core i7-10710U - 6 Core, up to 4.7 GHz)

- NUC10i5FNH/NUC10i5FNK (Intel Core i5-10210U - 4 Core, up to 4.2 GHz)

- NUC10i3FNH/NUC10i3FNK (Intel Core i3-10110U - 2 Core, up to 4.1 GHz)

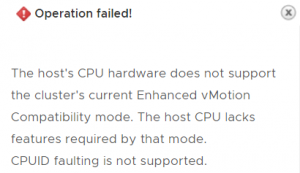

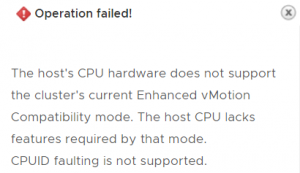

When you try to activate VMware EVC higher than Sandy Bridge, the following error message is displayed:

Compatibility

The host's CPU hardware does not support the cluster's current Enhanced vMotion Compatibility mode. The host CPU lacks features required by that mode.

When you try to add the Host to an EVC Enabled Cluster, the task fails:

Operation failed!

The host's CPU hardware does not support the cluster's current Enhanced vMotion Compatibility mode. The host CPU lacks features required by that mode.

CPUID faulting is not supported.

See KB 1003212 for more information.

Host is of type: vendor intel family 0x6 model 0xa6

Read More »VMware EVC Mode to Enable Intel Gen5-Gen10 NUC vMotion

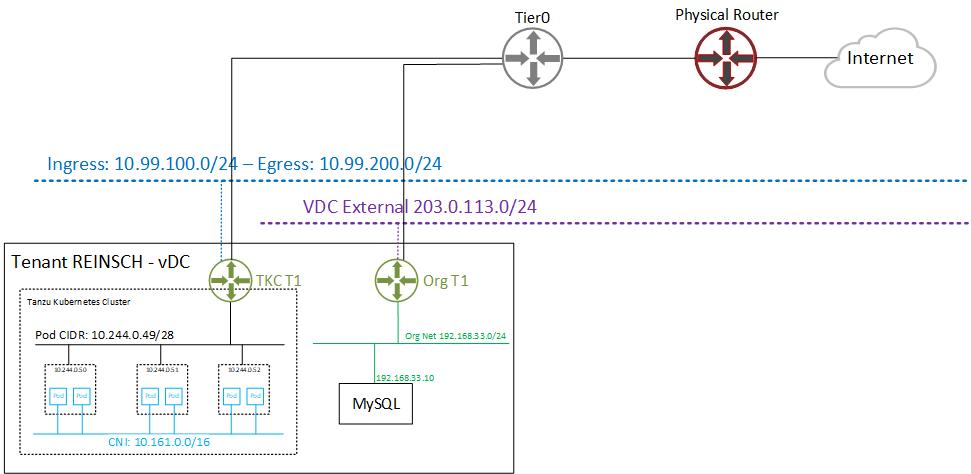

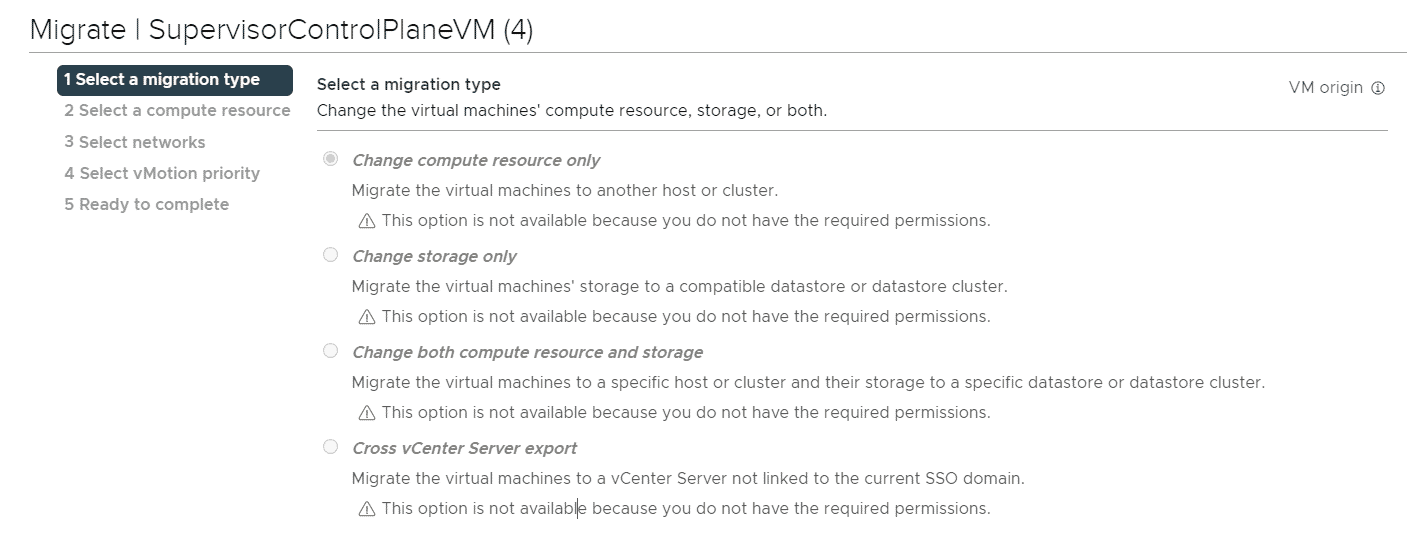

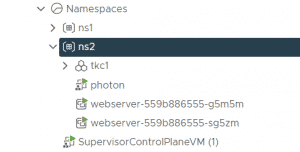

This article explains how you can create Virtual Machines in Kubernetes Namespaces in vSphere with Tanzu. The deployment of Virtual Machines in Kubernetes namespaces using kubectl was shown in demonstrations but is currently (as of vSphere 7.0 U2) not supported. Only with third-party integrations like TKG, it is possible to create Virtual Machines by leveraging the vmoperator.

This article explains how you can create Virtual Machines in Kubernetes Namespaces in vSphere with Tanzu. The deployment of Virtual Machines in Kubernetes namespaces using kubectl was shown in demonstrations but is currently (as of vSphere 7.0 U2) not supported. Only with third-party integrations like TKG, it is possible to create Virtual Machines by leveraging the vmoperator.

This article explains how to prepare the vCenter Server Appliance to connect with external Postgres Management Tools like pgAdmin. This method works with vCenter Server Appliance version 6.5, 6.7, and 7.0.

This article explains how to prepare the vCenter Server Appliance to connect with external Postgres Management Tools like pgAdmin. This method works with vCenter Server Appliance version 6.5, 6.7, and 7.0. Many VMware Homelabs are based on Intel NUCs. It is also very common that generations are mixed which can lead to compatibility issues when trying to vMotion VMs across different generations. This is typically where VMware EVC comes into play.

Many VMware Homelabs are based on Intel NUCs. It is also very common that generations are mixed which can lead to compatibility issues when trying to vMotion VMs across different generations. This is typically where VMware EVC comes into play.

In ESXi 6.5, there are some changes concerning devices connected with USB. The legacy drivers, including xhci, ehci-hcd, usb-uhci, and usb-storage have been replaced with a single USB driver named vmkusb. The new driver has some implications if you are trying to use USB devices like USB sticks or external hard disks as VMFS formatted datastore.

In ESXi 6.5, there are some changes concerning devices connected with USB. The legacy drivers, including xhci, ehci-hcd, usb-uhci, and usb-storage have been replaced with a single USB driver named vmkusb. The new driver has some implications if you are trying to use USB devices like USB sticks or external hard disks as VMFS formatted datastore.