Part 2 of the "Manage VSAN with RVC" series explains commands related to VSAN cluster administration tasks. This commands are required to gather informations about ESXi hosts and the cluster itself. They also provide important informations when you want to maintain your VSAN cluster:

- vsan.host_info

- vsan.cluster_info

- vsan.check_limits

- vsan.whatif_host_failures

- vsan.enter_maintenance_mode

- vsan.resync_dashboard

To make commands look better I created marks for a Cluster, a Virtual Machine and an ESXi Hosts. This allows me to use ~cluster, ~vm and ~esx in my examples:

/localhost/DC> mark cluster ~/computers/VSAN-Cluster/ /localhost/DC> mark vm ~/vms/vma.virten.lab /localhost/DC> mark esx ~/computers/VSAN-Cluster/hosts/esx1.virten.lab/

Day-to-Day VSAN Cluster Administration

vsan.host_info

Prints VSAN related information about an ESXi host. This command retains information about:

- Cluster role (master, backup or agent)

- Cluster UUID

- Node UUID

- Member UUIDs

- Auto claim (yes or no)

- Disk Mapptings: Disks that are claimed by VSAN

- NetworkInfo: VSAN traffic activated vmk adapters

Example 1 - Print VSAN Information:

/localhost/DC> vsan.host_info ~esx

VSAN enabled: yesg> vsan.host_info computers/VSAN-Cluster/hosts/esx1.virten.lab/

Cluster info:

Cluster role: agent

Cluster UUID: 525d9c62-1b87-5577-f76d-6f6d7bb4ba34

Node UUID: 52a61733-bcf1-4c92-6436-001b2193b9a4

Member UUIDs: ["52785959-6bee-21e0-e664-eca86bf99b3f", "52a61831-6060-22d7-c23d-001b2193b3b0", "52a61733-bcf1-4c92-6436-001b2193b9a4", "52aaffa8-d345-3a75-6809-005056bb3032"] (4)

Storage info:

Auto claim: no

Disk Mappings:

SSD: SanDisk_SDSSDP064G - 59 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

NetworkInfo:

Adapter: vmk1 (192.168.222.21)vsan.cluster_info

Prints VSAN related information about all ESXi hosts. This command provides the same information as vsan.host_info:

- Cluster role (master, backup or agent)

- Cluster UUID

- Node UUID

- Member UUIDs

- Auto claim (yes or no)

- Disk Mapptings: Disks that are claimed by VSAN

- NetworkInfo: VSAN traffic activated vmk adapters

Example 1 - Print VSAN Information:

/localhost/DC> vsan.cluster_info ~cluster

Host: esx1.virten.lab

VSAN enabled: yes

Cluster info:

Cluster role: agent

Cluster UUID: 525d9c62-1b87-5577-f76d-6f6d7bb4ba34

Node UUID: 52a61733-bcf1-4c92-6436-001b2193b9a4

Member UUIDs: ["52785959-6bee-21e0-e664-eca86bf99b3f", "52a61831-6060-22d7-c23d-001b2193b3b0", "52a61733-bcf1-4c92-6436-001b2193b9a4", "52aaffa8-d345-3a75-6809-005056bb3032"] (4)

Storage info:

Auto claim: no

Disk Mappings:

SSD: SanDisk_SDSSDP064G - 59 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

NetworkInfo:

Adapter: vmk1 (192.168.222.21)

Host: esx2.virten.lab

VSAN enabled: yes

Cluster info:

Cluster role: backup

Cluster UUID: 525d9c62-1b87-5577-f76d-6f6d7bb4ba34

Node UUID: 52a61831-6060-22d7-c23d-001b2193b3b0

Member UUIDs: ["52785959-6bee-21e0-e664-eca86bf99b3f", "52a61831-6060-22d7-c23d-001b2193b3b0", "52a61733-bcf1-4c92-6436-001b2193b9a4", "52aaffa8-d345-3a75-6809-005056bb3032"] (4)

Storage info:

Auto claim: no

Disk Mappings:

SSD: SanDisk_SDSSDP064G - 59 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

NetworkInfo:

Adapter: vmk1 (192.168.222.22)

[...]vsan.check_limits

Gathers and checks various VSAN related counters like components or disk utilization against their limits. This command can be used against a single ESXi host or a Cluster.

Example 1 - Check VSAN limits:

/localhost/DC> vsan.check_limits ~cluster 2013-12-26 14:21:02 +0000: Gathering stats from all hosts ... 2013-12-26 14:21:05 +0000: Gathering disks info ... +-----------------+-------------------+------------------------+ | Host | RDT | Disks | +-----------------+-------------------+------------------------+ | esx1.virten.lab | Assocs: 51/9800 | Components: 45/750 | | | Sockets: 26/10000 | WDC_WD3000HLFS: 44% | | | Clients: 4 | WDC_WD3000HLFS: 32% | | | Owners: 11 | WDC_WD3000HLFS: 28% | | | | SanDisk_SDSSDP064G: 0% | | esx2.virten.lab | Assocs: 72/9800 | Components: 45/750 | | | Sockets: 24/10000 | WDC_WD3000HLFS: 29% | | | Clients: 5 | WDC_WD3000HLFS: 31% | | | Owners: 12 | WDC_WD3000HLFS: 43% | | | | SanDisk_SDSSDP064G: 0% | | esx3.virten.lab | Assocs: 88/9800 | Components: 45/750 | | | Sockets: 31/10000 | WDC_WD3000HLFS: 42% | | | Clients: 6 | WDC_WD3000HLFS: 44% | | | Owners: 9 | WDC_WD3000HLFS: 38% | | | | SanDisk_SDSSDP064G: 0% | | esx4.virten.lab | Assocs: 62/9800 | Components: 45/750 | | | Sockets: 26/10000 | WDC_WD3000HLFS: 42% | | | Clients: 3 | WDC_WD3000HLFS: 38% | | | Owners: 9 | WDC_WD3000HLFS: 31% | | | | SanDisk_SDSSDP064G: 0% | +-----------------+-------------------+------------------------+

vsan.whatif_host_failures

Simulates how host failures would impact VSAN resource usage. The command shows the current VSAN disk usage and the calculated disk usage after a host has failed. The simulation assumes that all objects would be brought back to full policy compliance by bringing up new mirrors of existing data.

The command has 2 options:

- --num-host-failures-to-simulate, -n: Number of host failures to simulate. This option is not yet implemented.

- --show-current-usage-per-host, -s: Displays an utilization overview of all hosts:

Example 1 - Simulate 1 host failure:

/localhost/DC> vsan.whatif_host_failures ~cluster Simulating 1 host failures: +-----------------+-----------------------------+-----------------------------------+ | Resource | Usage right now | Usage after failure/re-protection | +-----------------+-----------------------------+-----------------------------------+ | HDD capacity | 7% used (1128.55 GB free) | 15% used (477.05 GB free) | | Components | 2% used (2025 available) | 3% used (1275 available) | | RC reservations | 0% used (90.47 GB free) | 0% used (48.73 GB free) | +-----------------+-----------------------------+-----------------------------------+

Example 2 - Show utilization and simulate 1 host failure:

/localhost/DC> vsan.whatif_host_failures -s ~cluster Current utilization of hosts: +------------+---------+--------------+------+----------+----------------+--------------+ | | | HDD Capacity | | | Components | SSD Capacity | | Host | NumHDDs | Total | Used | Reserved | Used | Reserved | +------------+---------+--------------+------+----------+----------------+--------------+ | 10.0.0.1 | 2 | 299.50 GB | 6 % | 5 % | 4/562 (1 %) | 0 % | | 10.0.0.2 | 2 | 299.50 GB | 10 % | 9 % | 11/562 (2 %) | 0 % | | 10.0.0.3 | 2 | 299.50 GB | 10 % | 9 % | 6/562 (1 %) | 0 % | | 10.0.0.4 | 2 | 299.50 GB | 14 % | 13 % | 7/562 (1 %) | 0 % | +------------+---------+--------------+------+----------+----------------+--------------+ Simulating 1 host failures: +-----------------+-----------------------------+-----------------------------------+ | Resource | Usage right now | Usage after failure/re-protection | +-----------------+-----------------------------+-----------------------------------+ | HDD capacity | 10% used (1079.73 GB free) | 13% used (780.23 GB free) | | Components | 1% used (2220 available) | 2% used (1658 available) | | RC reservations | 0% used (55.99 GB free) | 0% used (41.99 GB free) | +-----------------+-----------------------------+-----------------------------------+

vsan.enter_maintenance_mode

Put the host into maintenance mode. This command is VSAN aware and can migrate VSAN data to other host like the vSphere Web Client does. It also migrates running virtual machines when DRS is enabled.

The command has 4 options:

- --timeout, -t: Set a timoute for the process to complete. When the host can not enter maintenance mode in X seconds, the process is canceled. (Default: 0)

- --evacuate-powered-off-vms, -e: Moves powered off virtual machines to other hosts in the cluster.

- --no-wait, -n: The command returns immediately without waiting for the task to complete.

- --vsan-mode, -v: Actions to take for VSAN components (Options: ensureObjectAccessibility (default), evacuateAllData, noAction)

Example 1 - Put the host into maintenance mode. Do not copy any VSAN components (Fast but with reduced redundancy):

/localhost/DC> vsan.enter_maintenance_mode ~esx EnterMaintenanceMode esx1.virten.lab: success

Example 2 - Put the host into maintenance mode. Copy all VSAN components components to other hosts in the cluster:

/localhost/DC> vsan.enter_maintenance_mode ~esx -v evacuateAllData EnterMaintenanceMode esx1.virten.lab: success

Example 3 - Put the host into maintenance mode. Copy all VSAN components components to other hosts in the cluster. Cancel the process when it takes longer than 10 minutes:

/localhost/DC> vsan.enter_maintenance_mode ~esx -v evacuateAllData -t 600 EnterMaintenanceMode esx1.virten.lab: success

Example 4 - Put the host into maintenance mode. Do not track the process (Batch mode) :

/localhost/DC> vsan.enter_maintenance_mode ~esx -n /localhost/DC>

vsan.resync_dashboard

This command shows you what is happening when a mirror resync is in progress. If a host fails or is put into maintenance mode, you should watch the resync status here. The command can be run once or with an refresh interval. (--refresh-rate, -r)

Example 1 - Resync Dashboard where nothing happens:

/localhost/DC> vsan.resync_dashboard ~cluster 2013-12-27 20:05:56 +0000: Querying all VMs on VSAN ... 2013-12-27 20:05:56 +0000: Querying all objects in the system from esx1.virten.lab ... 2013-12-27 20:05:56 +0000: Got all the info, computing table ... +-----------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +-----------+-----------------+---------------+ +-----------+-----------------+---------------+ | Total | 0 | 0.00 GB | +-----------+-----------------+---------------+

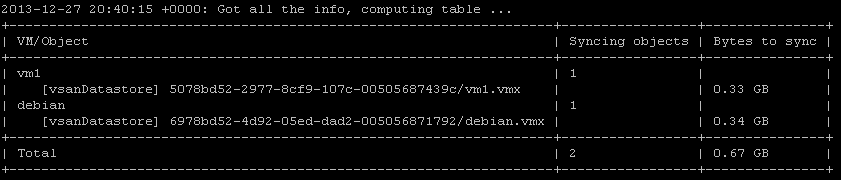

Example 2 - Resync Dashboerd after a host has been put into maintenance mode. Refreshed every 10 seconds:

/localhost/DC> vsan.resync_dashboard ~cluster --refresh-rate 10 2013-12-27 20:39:46 +0000: Querying all VMs on VSAN ... 2013-12-27 20:39:46 +0000: Querying all objects in the system from esx1.virten.lab ... 2013-12-27 20:39:47 +0000: Got all the info, computing table ... +-----------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +-----------+-----------------+---------------+ +-----------+-----------------+---------------+ | Total | 0 | 0.00 GB | +-----------+-----------------+---------------+ 2013-12-27 20:39:57 +0000: Querying all objects in the system from esx1.virten.lab ... 2013-12-27 20:39:59 +0000: Got all the info, computing table ... +-----------------------------------------------------------------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +-----------------------------------------------------------------+-----------------+---------------+ | vm1 | 1 | | | [vsanDatastore] 5078bd52-2977-8cf9-107c-00505687439c/vm1.vmx | | 0.17 GB | +-----------------------------------------------------------------+-----------------+---------------+ | Total | 1 | 0.17 GB | +-----------------------------------------------------------------+-----------------+---------------+ 2013-12-27 20:40:14 +0000: Querying all objects in the system from esx1.virten.lab ... 2013-12-27 20:40:17 +0000: Got all the info, computing table ... +--------------------------------------------------------------------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +--------------------------------------------------------------------+-----------------+---------------+ | vm1 | 1 | | | [vsanDatastore] 5078bd52-2977-8cf9-107c-00505687439c/vm1.vmx | | 0.34 GB | | debian | 1 | | | [vsanDatastore] 6978bd52-4d92-05ed-dad2-005056871792/debian.vmx | | 0.35 GB | +--------------------------------------------------------------------+-----------------+---------------+ | Total | 2 | 0.69 GB | +--------------------------------------------------------------------+-----------------+---------------+ [...]

Manage VSAN with RVC Series

- Manage VSAN with RVC Part 1 – Basic Configuration Tasks

- Manage VSAN with RVC Part 2 – VSAN Cluster Administration

- Manage VSAN with RVC Part 3 – Object Management

- Manage VSAN with RVC Part 4 – Troubleshooting

- Manage VSAN with RVC Part 5 – Observer

Pingback: Newsletter: December 28 | Notes from MWhite

I love the time and effort you've put into VSAN. Great RVC and VSAN articles. Keep it up.

Rawlinson :razz:

Hi Rawlinson,

Can I Can I get "vsan.resync_dashboard" from perl or python script? Can you give me example to do this?

Anyhelp would be appricated

My email: trungtien@longvan.net