This is a comprehensive guide to manage your VMware Virtual SAN with the Ruby vSphere Console. RVC is an interactive command line tool to control your platform. If you are new to RVC, make sure to read the Getting Started with Ruby vSphere Console Guide. All commands are from the latest vSphere 5.5 Update2 release.

- Preparation

- Basic Configuration

- Cluster Administration

- Object Management

- Troubleshooting

- VSAN Observer

Preparation

To make commands look clearer, I created marks for a Cluster, a Virtual Machine and an ESXi Hosts. This allows me to use ~cluster, ~vm and ~esx in my examples:

/localhost/DC> mark cluster ~/computers/VSAN-Cluster/ /localhost/DC> mark vm ~/vms/vma.virten.local /localhost/DC> mark esx ~/computers/VSAN-Cluster/hosts/esx1.virten.local/

Basic Configuration

vsan.enable_vsan_on_cluster

Enables VSAN on a cluster. When VSAN is already enabled you can also use this command to enable/disable auto claim. When not specified, auto claim is enabled by default.

usage: enable_vsan_on_cluster [opts] cluster

Enable VSAN on a cluster

cluster: Path to a ClusterComputeResource

--disable-storage-auto-claim, -d: Disable auto disk-claim

--help, -h: Show this message

Example 1 - Enable VSAN on a Cluster:

/localhost/DC> vsan.enable_vsan_on_cluster ~cluster ReconfigureComputeResource VSAN-Cluster: success esx1.virten.local: success esx2.virten.local: success esx3.virten.local: success

Example 2 - Enable VSAN on a Cluster with auto claim disabled, or disables auto claim on a VSAN enabled Cluster:

/localhost/DC> vsan.enable_vsan_on_cluster --disable-storage-auto-claim ~cluster ReconfigureComputeResource VSAN-Cluster: success esx1.virten.local: success esx2.virten.local: success esx3.virten.local: success

vsan.disable_vsan_on_cluster

Disables VSAN on a cluster. VMware HA has to be disabled, to disable Virtual SAN. This command will make the vsanDatastore including all virtual machines unavailable. It does not delete any data.

/localhost/DC> vsan.disable_vsan_on_cluster --help usage: disable_vsan_on_cluster cluster Disable VSAN on a cluster cluster: Path to a ClusterComputeResource --help, -h: Show this message

Example 1 - Disable VSAN on a HA enabled Cluster:

/localhost/DC> cluster.configure_ha --disabled ~cluster ReconfigureComputeResource VSAN-Cluster: success esx1.virten.local: success esx2.virten.local: success esx3.virten.local: success /localhost/DC> vsan.disable_vsan_on_cluster ~cluster ReconfigureComputeResource VSAN-Cluster: success esx1.virten.local: success esx2.virten.local: success esx3.virten.local: success

vsan.cluster_change_autoclaim

This command enables or disables auto-claim. With auto-claim enabled all unused disks are automatically consumed by Virtual SAN.

usage: cluster_change_autoclaim [opts] cluster

Enable VSAN on a cluster

cluster: Path to a ClusterComputeResource

--enable, -e: Enable auto-claim

--disable, -d: Disable auto-claim

--help, -h: Show this message

Example 1 - Enable auto-claim:

/localhost/DC> vsan.cluster_change_autoclaim --enable ~cluster ReconfigureComputeResource VSAN-Cluster: success esx2.virten.local: success esx3.virten.local: success esx1.virten.local: success

Example 2 - Disable auto-claim:

/localhost/DC> vsan.cluster_change_autoclaim --disable ~cluster ReconfigureComputeResource VSAN-Cluster: success esx2.virten.local: success esx3.virten.local: success esx1.virten.local: success

vsan.apply_license_to_cluster

Applies a Virtual SAN license key to a Cluster. This command also triggers a Null-Reconfigure on all hosts to ensure that all disks are claimed when auto-claim is enabled.

usage: apply_license_to_cluster [opts] cluster

Apply license to VSAN

cluster: Path to a ClusterComputeResource

--license-key, -k <s>: License key to be applied to the cluster

--null-reconfigure, -r: (default: true)

--help, -h: Show this messageExample 1 - Apply a VSAN license and for auto-claim:

/localhost/DC> vsan.apply_license_to_cluster -k 00000-00000-00000-00000-00000 ~cluster VSAN-Cluster: Applying VSAN License on the cluster... VSAN-Cluster: Null-Reconfigure to force auto-claim... ReconfigureComputeResource VSAN-Cluster: success esx1.virten.local: success esx2.virten.local: success esx3.virten.local: success

Example 2 - Replace a VSAN license key:

/localhost/DC> vsan.apply_license_to_cluster -r -k 00000-00000-00000-00000-00000 ~cluster VSAN-Cluster: Applying VSAN License on the cluster...

vsan.host_consume_disks

Consumes all eligible disks on a host. When you have multiple SSDs you can specify the appropriate SSD by its model.

usage: host_consume_disks [opts] host_or_cluster...

Consumes all eligible disks on a host

host_or_cluster: Path to a ComputeResource or HostSystem

--filter-ssd-by-model, -f <s>: Regex to apply as ssd model filter

--help, -h: Show this messageExample 1 - Identify SSD Model description and create a disk group. This is required when you have more than one SSD:

/localhost/DC> esxcli.execute ~esx storage core device list [...] IsSSD: true Model: "SanDisk SDSSDP06" [...] /localhost/DC> vsan.host_consume_disks ~esx --filter-ssd-by-model "SanDisk SDSSDP06" AddDisks esx1.virten.local: success

Example 2 - Add all eligible disks to a new or existing disk group:

/localhost/DC> vsan.host_consume_disks ~esx AddDisks esx1.virten.local: success

vsan.cluster_set_default_policy

Set default policy on a cluster. That policy is used by default when an object has no VM Storage Policy defined. A policy defines how objects are stored in the Virtual SAN. Available values are:

- hostFailuresToTolerate (Number of failures to tolerate)

- forceProvisioning (If VSAN can't fulfill the policy requirements for an object, it will still deploy it)

- stripeWidth (Number of disk stripes per object)

- cacheReservation (Flash read cache reservation)

- proportionalCapacity (Object space reservation)

Default Policy: (("hostFailuresToTolerate" i1))

usage: cluster_set_default_policy cluster policy Set default policy on a cluster cluster: Path to a ClusterComputeResource policy: --help, -h: Show this message

Example 1 - Change the default policy to tolerate two host failures:

/localhost/DC> vsan.cluster_set_default_policy ~cluster '(("hostFailuresToTolerate" i2))'Example 2 - Determine the current default policy from an existing host and add a rule:

/localhost/DC> esxcli.execute ~esx vsan policy getdefault

+--------------+----------------------------------------------------------+

| Policy Class | Policy Value |

+--------------+----------------------------------------------------------+

| cluster | (("hostFailuresToTolerate" i1)) |

| vdisk | (("hostFailuresToTolerate" i1)) |

| vmnamespace | (("hostFailuresToTolerate" i1)) |

| vmswap | (("hostFailuresToTolerate" i1) ("forceProvisioning" i1)) |

+--------------+----------------------------------------------------------+

/localhost/DC> vsan.cluster_set_default_policy ~cluster '(("hostFailuresToTolerate" i1) ("stripeWidth" i2))'vsan.host_wipe_vsan_disks

Deletes the content of all VSAN enabled disks on a host. When the command is uses without the force option it only tells what it would wipe.

usage: host_wipe_vsan_disks [opts] host... Wipes content of all VSAN disks on a host host: Path to a HostSystem --force, -f: Apply force --help, -h: Show this message

Example 1 - Run the command in dry mode:

/localhost/DC> vsan.host_wipe_vsan_disks ~esx Would wipe disk WDC_WD1500HLFS (ATA WDC WD1500HLFS-0, ssd = false) Would wipe disk WDC_WD1500HLFS (ATA WDC WD1500HLFS-0, ssd = false) Would wipe disk WDC_WD1500HLFS (ATA WDC WD1500HLFS-0, ssd = false) Would wipe disk SanDisk_SDSSDP064G (ATA SanDisk SDSSDP06, ssd = true) NO ACTION WAS TAKEN. Use --force to actually wipe. CAUTION: Wiping disks means all user data will be destroyed!

Example 2 - Wipe the content of all VSAN disks:

/localhost/DC> vsan.host_wipe_vsan_disks ~esx --force RemoveDiskMapping esx1.virten.local: success

Cluster Administration

vsan.host_info

Prints VSAN related information about an ESXi host. This command retains information about:

- Cluster role (master, backup or agent)

- Cluster UUID

- Node UUID

- Member UUIDs

- Auto claim (yes or no)

- Disk Mapptings: Disks that are claimed by VSAN

- NetworkInfo: VSAN traffic activated vmk adapters

usage: host_info host Print VSAN info about a host host: Path to a HostSystem --help, -h: Show this message

Example 1 - Print VSAN host Information:

/localhost/DC> vsan.host_info ~esx

VSAN enabled: yes

Cluster info:

Cluster role: agent

Cluster UUID: 525d9c62-1b87-5577-f76d-6f6d7bb4ba34

Node UUID: 52a61733-bcf1-4c92-6436-001b2193b9a4

Member UUIDs: ["52785959-6bee-21e0-e664-eca86bf99b3f", "52a61831-6060-22d7-c23d-001b2193b3b0", "52a61733-bcf1-4c92-6436-001b2193b9a4", "52aaffa8-d345-3a75-6809-005056bb3032"] (4)

Storage info:

Auto claim: no

Disk Mappings:

SSD: SanDisk_SDSSDP064G - 59 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

NetworkInfo:

Adapter: vmk1 (192.168.222.21)vsan.cluster_info

Prints VSAN related information about all ESXi hosts. This command provides the same information as vsan.host_info:

- Cluster role (master, backup or agent)

- Cluster UUID

- Node UUID

- Member UUIDs

- Auto claim (yes or no)

- Disk Mapptings: Disks that are claimed by VSAN

- NetworkInfo: VSAN traffic activated vmk adapters

usage: cluster_info cluster Print VSAN info about a cluster cluster: Path to a ClusterComputeResource --help, -h: Show this message

Example 1 - Print VSAN related information from all host in a VSAN enabled Cluster:

/localhost/DC> vsan.cluster_info ~cluster

Host: esx1.virten.local

VSAN enabled: yes

Cluster info:

Cluster role: agent

Cluster UUID: 525d9c62-1b87-5577-f76d-6f6d7bb4ba34

Node UUID: 52a61733-bcf1-4c92-6436-001b2193b9a4

Member UUIDs: ["52785959-6bee-21e0-e664-eca86bf99b3f", "52a61831-6060-22d7-c23d-001b2193b3b0", "52a61733-bcf1-4c92-6436-001b2193b9a4", "52aaffa8-d345-3a75-6809-005056bb3032"] (4)

Storage info:

Auto claim: no

Disk Mappings:

SSD: SanDisk_SDSSDP064G - 59 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

NetworkInfo:

Adapter: vmk1 (192.168.222.21)

Host: esx2.virten.local

VSAN enabled: yes

Cluster info:

Cluster role: backup

Cluster UUID: 525d9c62-1b87-5577-f76d-6f6d7bb4ba34

Node UUID: 52a61831-6060-22d7-c23d-001b2193b3b0

Member UUIDs: ["52785959-6bee-21e0-e664-eca86bf99b3f", "52a61831-6060-22d7-c23d-001b2193b3b0", "52a61733-bcf1-4c92-6436-001b2193b9a4", "52aaffa8-d345-3a75-6809-005056bb3032"] (4)

Storage info:

Auto claim: no

Disk Mappings:

SSD: SanDisk_SDSSDP064G - 59 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

MD: WDC_WD3000HLFS - 279 GB

NetworkInfo:

Adapter: vmk1 (192.168.222.22)

[...]vsan.check_limits

Gathers and checks various VSAN related counters like components or disk utilization against their limits. This command can be used against a single ESXi host or a Cluster.

usage: check_limits hosts_and_clusters... Gathers (and checks) counters against limits hosts_and_clusters: Path to a HostSystem or ClusterComputeResource --help, -h: Show this message

Example 1 - Check VSAN limits from from all host in a VSAN enabled Cluster:

/localhost/DC> vsan.check_limits ~cluster 2013-12-26 14:21:02 +0000: Gathering stats from all hosts ... 2013-12-26 14:21:05 +0000: Gathering disks info ... +-------------------+-------------------+------------------------+ | Host | RDT | Disks | +-------------------+-------------------+------------------------+ | esx1.virten.local | Assocs: 51/20000 | Components: 45/750 | | | Sockets: 26/10000 | WDC_WD3000HLFS: 44% | | | Clients: 4 | WDC_WD3000HLFS: 32% | | | Owners: 11 | WDC_WD3000HLFS: 28% | | | | SanDisk_SDSSDP064G: 0% | | esx2.virten.local | Assocs: 72/20000 | Components: 45/750 | | | Sockets: 24/10000 | WDC_WD3000HLFS: 29% | | | Clients: 5 | WDC_WD3000HLFS: 31% | | | Owners: 12 | WDC_WD3000HLFS: 43% | | | | SanDisk_SDSSDP064G: 0% | | esx3.virten.local | Assocs: 88/20000 | Components: 45/750 | | | Sockets: 31/10000 | WDC_WD3000HLFS: 42% | | | Clients: 6 | WDC_WD3000HLFS: 44% | | | Owners: 9 | WDC_WD3000HLFS: 38% | | | | SanDisk_SDSSDP064G: 0% | +-------------------+-------------------+------------------------+

vsan.whatif_host_failures

Simulates how host failures would impact VSAN resource usage. The command shows the current VSAN disk usage and the calculated disk usage after a host has failed. The simulation assumes that all objects would be brought back to full policy compliance by bringing up new mirrors of existing data.

usage: whatif_host_failures [opts] hosts_and_clusters...

Simulates how host failures impact VSAN resource usage

The command shows current VSAN disk usage, but also simulates how

disk usage would evolve under a host failure. Concretely the simulation

assumes that all objects would be brought back to full policy

compliance by bringing up new mirrors of existing data.

The command makes some simplifying assumptions about disk space

balance in the cluster. It is mostly intended to do a rough estimate

if a host failure would drive the cluster to being close to full.

hosts_and_clusters: Path to a HostSystem or ClusterComputeResource

--num-host-failures-to-simulate, -n <i>: Number of host failures to simulate (default: 1)

--show-current-usage-per-host, -s: Show current resources used per host

--help, -h: Show this messageExample 1 - Simulate 1 host failure:

/localhost/DC> vsan.whatif_host_failures ~cluster Simulating 1 host failures: +-----------------+-----------------------------+-----------------------------------+ | Resource | Usage right now | Usage after failure/re-protection | +-----------------+-----------------------------+-----------------------------------+ | HDD capacity | 7% used (1128.55 GB free) | 15% used (477.05 GB free) | | Components | 2% used (2025 available) | 3% used (1275 available) | | RC reservations | 0% used (90.47 GB free) | 0% used (48.73 GB free) | +-----------------+-----------------------------+-----------------------------------+

Example 2 - Show utilization and simulate 1 host failure:

/localhost/DC> vsan.whatif_host_failures -s ~cluster Current utilization of hosts: +------------+---------+--------------+------+----------+----------------+--------------+ | | | HDD Capacity | | | Components | SSD Capacity | | Host | NumHDDs | Total | Used | Reserved | Used | Reserved | +------------+---------+--------------+------+----------+----------------+--------------+ | 10.0.0.1 | 2 | 299.50 GB | 6 % | 5 % | 4/562 (1 %) | 0 % | | 10.0.0.2 | 2 | 299.50 GB | 10 % | 9 % | 11/562 (2 %) | 0 % | | 10.0.0.3 | 2 | 299.50 GB | 10 % | 9 % | 6/562 (1 %) | 0 % | | 10.0.0.4 | 2 | 299.50 GB | 14 % | 13 % | 7/562 (1 %) | 0 % | +------------+---------+--------------+------+----------+----------------+--------------+ Simulating 1 host failures: +-----------------+-----------------------------+-----------------------------------+ | Resource | Usage right now | Usage after failure/re-protection | +-----------------+-----------------------------+-----------------------------------+ | HDD capacity | 10% used (1079.73 GB free) | 13% used (780.23 GB free) | | Components | 1% used (2220 available) | 2% used (1658 available) | | RC reservations | 0% used (55.99 GB free) | 0% used (41.99 GB free) | +-----------------+-----------------------------+-----------------------------------+

vsan.enter_maintenance_mode

Put the host into maintenance mode. This command is VSAN aware and can migrate VSAN data to other host like the vSphere Web Client does. It also migrates running virtual machines when DRS is enabled.

usage: enter_maintenance_mode [opts] host...

Put hosts into maintenance mode

host: Path to a HostSystem

--timeout, -t <i>: Timeout (default: 0)

--evacuate-powered-off-vms, -e: Evacuate powered off vms

--no-wait, -n: Don't wait for Task to complete

--vsan-mode, -v <s>: Actions to take for VSAN backed

storage (default: ensureObjectAccessibility)

--help, -h: Show this messageFurther explanation of the 4 options:

- timeout, -t: Set a timoute for the process to complete. When the host can not enter maintenance mode in X seconds, the process is canceled.

- evacuate-powered-off-vms, -e: Moves powered off virtual machines to other hosts in the cluster.

- no-wait, -n: The command returns immediately without waiting for the task to complete.

- vsan-mode, -v: Actions to take for VSAN components. Options:

- ensureObjectAccessibility (default)

- evacuateAllData

- noAction

Example 1 - Put the host into maintenance mode. Do not copy any VSAN components (Fast but with reduced redundancy):

/localhost/DC> vsan.enter_maintenance_mode ~esx EnterMaintenanceMode esx1.virten.local: success

Example 2 - Put the host into maintenance mode. Copy all VSAN components components to other hosts in the cluster:

/localhost/DC> vsan.enter_maintenance_mode ~esx -v evacuateAllData EnterMaintenanceMode esx1.virten.local: success

Example 3 - Put the host into maintenance mode. Copy all VSAN components components to other hosts in the cluster. Cancel the process when it takes longer than 10 minutes:

/localhost/DC> vsan.enter_maintenance_mode ~esx -v evacuateAllData -t 600 EnterMaintenanceMode esx1.virten.local: success

Example 4 - Put the host into maintenance mode. Do not track the process (Batch mode) :

/localhost/DC> vsan.enter_maintenance_mode ~esx -n /localhost/DC>

vsan.resync_dashboard

This command shows what happens when a mirror resync is in progress. If a host fails or is going into maintenance mode, you should watch the resync status here. The command can be run once or with an refresh interval.

usage: resync_dashboard [opts] cluster_or_host

Resyncing dashboard

cluster_or_host: Path to a ClusterComputeResource or HostSystem

--refresh-rate, -r <i>: Refresh interval (in sec). Default is no refresh

--help, -h: Show this messageExample 1 - Resync Dashboard where nothing happens:

/localhost/DC> vsan.resync_dashboard ~cluster 2013-12-27 20:05:56 +0000: Querying all VMs on VSAN ... 2013-12-27 20:05:56 +0000: Querying all objects in the system from esx1.virten.lab ... 2013-12-27 20:05:56 +0000: Got all the info, computing table ... +-----------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +-----------+-----------------+---------------+ +-----------+-----------------+---------------+ | Total | 0 | 0.00 GB | +-----------+-----------------+---------------+

Example 2 - Resync Dashboerd after a host has been put into maintenance mode. Refreshed every 10 seconds:

/localhost/DC> vsan.resync_dashboard ~cluster --refresh-rate 10 2013-12-27 20:39:46 +0000: Querying all VMs on VSAN ... 2013-12-27 20:39:46 +0000: Querying all objects in the system from esx1.virten.local ... 2013-12-27 20:39:47 +0000: Got all the info, computing table ... +-----------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +-----------+-----------------+---------------+ +-----------+-----------------+---------------+ | Total | 0 | 0.00 GB | +-----------+-----------------+---------------+ 2013-12-27 20:39:57 +0000: Querying all objects in the system from esx1.virten.local ... 2013-12-27 20:39:59 +0000: Got all the info, computing table ... +-----------------------------------------------------------------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +-----------------------------------------------------------------+-----------------+---------------+ | vm1 | 1 | | | [vsanDatastore] 5078bd52-2977-8cf9-107c-00505687439c/vm1.vmx | | 0.17 GB | +-----------------------------------------------------------------+-----------------+---------------+ | Total | 1 | 0.17 GB | +-----------------------------------------------------------------+-----------------+---------------+ 2013-12-27 20:40:14 +0000: Querying all objects in the system from esx1.virten.local ... 2013-12-27 20:40:17 +0000: Got all the info, computing table ... +--------------------------------------------------------------------+-----------------+---------------+ | VM/Object | Syncing objects | Bytes to sync | +--------------------------------------------------------------------+-----------------+---------------+ | vm1 | 1 | | | [vsanDatastore] 5078bd52-2977-8cf9-107c-00505687439c/vm1.vmx | | 0.34 GB | | debian | 1 | | | [vsanDatastore] 6978bd52-4d92-05ed-dad2-005056871792/debian.vmx | | 0.35 GB | +--------------------------------------------------------------------+-----------------+---------------+ | Total | 2 | 0.69 GB | +--------------------------------------------------------------------+-----------------+---------------+ [...]

Object Management

vsan.disks_info

Prints informations about all physical disks from a host. This command helps to identify backend disks and their state. It also provides hints on why physical disks are ineligible for VSAN. This command retains information about:

- Disk Display Name

- Disk type (SSD or MD)

- RAW Size

- State (inUse, eligible or ineligible)

- Host Adapter (only with -s option)

- Existing partitions

usage: disks_info [opts] host...

Print physical disk info about a host

host: Path to a HostSystem

--show-adapters, -s: Show adapter information

--help, -h: Show this messageExample 1 - Print host disk information

/localhost/DC> vsan.disks_info ~esx Disks on host esx1.virten.local: +-------------------------------------+-------+--------+----------------------------------------+ | DisplayName | isSSD | Size | State | +-------------------------------------+-------+--------+----------------------------------------+ | WDC_WD3000HLFS | MD | 279 GB | inUse | | ATA WDC WD3000HLFS-0 | | | | +-------------------------------------+-------+--------+----------------------------------------+ | WDC_WD3000HLFS | MD | 279 GB | inUse | | ATA WDC WD3000HLFS-0 | | | | +-------------------------------------+-------+--------+----------------------------------------+ | WDC_WD3000HLFS | MD | 279 GB | inUse | | ATA WDC WD3000HLFS-0 | | | | +-------------------------------------+-------+--------+----------------------------------------+ | SanDisk_SDSSDP064G | SSD | 59 GB | inUse | | ATA SanDisk SDSSDP06 | | | | +-------------------------------------+-------+--------+----------------------------------------+ | Local USB (mpx.vmhba32:C0:T0:L0) | MD | 7 GB | ineligible (Existing partitions found | | USB DISK 2.0 | | | on disk 'mpx.vmhba32:C0:T0:L0'.) | | | | | | | | | | Partition table: | | | | | 5: 0.24 GB, type = vfat | | | | | 6: 0.24 GB, type = vfat | | | | | 7: 0.11 GB, type = coredump | | | | | 8: 0.28 GB, type = vfat | +-------------------------------------+-------+--------+----------------------------------------+

vsan.disks_stats

Prints stats on all disks in VSAN. Can be used against a host or the whole cluster. When used against a host, it does only resolves names from the given host. I would suggest to run this command against clusters only. That displays names for all disks and hosts in the VSAN. It is very helpfull when you are troubleshooting disk full errors. The command retains information about:

- Disk Display Name

- Disk Size

- Disk type (SSD or MD)

- Number of Components

- Capacity

- Used/Reserved percentage

- Health Status

usage: disks_stats [opts] hosts_and_clusters...

Show stats on all disks in VSAN

hosts_and_clusters: Path to a HostSystem or ClusterComputeResource

--compute-number-of-components, -c: Deprecated

--show-iops, -s: Show deprecated fields

--help, -h: Show this messageThe -c option is deprecated, the number of components is displayed by default. The value displayed with the -s option is an assumption about the drives maximum possible iops.

Example 1 - Print VSAN Disk stats from all hosts in a VSAN enabled Cluster:

/localhost/DC> vsan.disks_stats ~cluster +---------------------------------+-----------------+-------+------+-----------+------+----------+--------+ | | | | Num | Capacity | | | Status | | DisplayName | Host | isSSD | Comp | Total | Used | Reserved | Health | +---------------------------------+-----------------+-------+------+-----------+------+----------+--------+ | t10.ATA_____SanDisk_SDSSDP064G | esx1.virten.loc | SSD | 0 | 41.74 GB | 0 % | 0 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx1.virten.loc | MD | 30 | 279.25 GB | 43 % | 42 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx1.virten.loc | MD | 32 | 279.25 GB | 41 % | 40 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx1.virten.loc | MD | 24 | 279.25 GB | 33 % | 32 % | OK | +---------------------------------+-----------------+-------+------+-----------+------+----------+--------+ | t10.ATA_____SanDisk_SDSSDP064G | esx2.virten.loc | SSD | 0 | 41.74 GB | 0 % | 0 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx2.virten.loc | MD | 32 | 279.25 GB | 44 % | 44 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx2.virten.loc | MD | 34 | 279.25 GB | 46 % | 44 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx2.virten.loc | MD | 28 | 279.25 GB | 33 % | 32 % | OK | +---------------------------------+-----------------+-------+------+-----------+------+----------+--------+ | t10.ATA_____SanDisk_SDSSDP064G | esx3.virten.loc | SSD | 0 | 41.74 GB | 0 % | 0 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx3.virten.loc | MD | 35 | 279.25 GB | 42 % | 42 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx3.virten.loc | MD | 33 | 279.25 GB | 43 % | 42 % | OK | | t10.ATA_____WDC_WD3000HLFS | esx3.virten.loc | MD | 22 | 279.25 GB | 36 % | 34 % | OK | +---------------------------------+-----------------+-------+------+-----------+------+----------+--------+

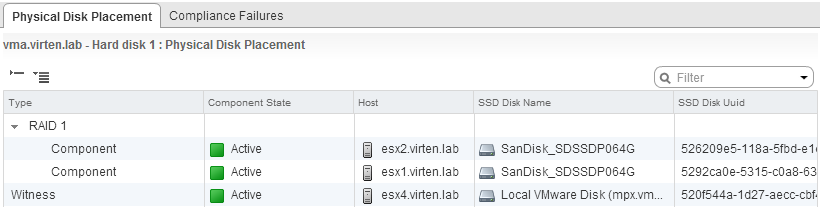

vsan.vm_object_info

Prints VSAN object information about a VM. This command is the equivalent to the Manage > VM Storage Policies tab in the vSphere Web Client and allows you to idenify where stripes, mirrors and witness of virtual disks are located. The command retains information about:

- Namespace directory (Virtual Machine home directory)

- Disk backing (Virtual Disks)

- Number of objects (DOM Objects)

- UUID from objects and components (usful for other commands)

- Location of object stripes and mirrors

- Location of object witness

- Storage Policy (hostFailuresToTolerate, forceProvisioning, stripeWidth, etc.)

- Resync Status

usage: vm_object_info [opts] vms...

Fetch VSAN object information about a VM

vms: Path to a VirtualMachine

--cluster, -c <s>: Cluster on which to fetch the object info

--perspective-from-host, -p <s>: Host to query object info from

--include-detailed-usage, -i: Include detailed usage info

--help, -h: Show this messageThe -i parameter displays additional information about the physical usage (physUsage) from objects.

Example 1 - Print VM object information:

/localhost/DC> vsan.vm_object_info ~vm

VM vma.virten.lab:

Namespace directory

DOM Object: f3bfa952-175f-4d7e-1eae-001b2193b3b0 (owner: esx2.virten.lab, policy: hostFailuresToTolerate = 1, stripeWidth = 1, spbmProfileId = fbd74e7a-2bf9-481d-88b6-22c0abbc8898, proportionalCapacity = [0, 100], spbmProfileGenerationNumber = 1)

Witness: eb16af52-dd3f-b6c4-19f6-001b2193b3b0 (state: ACTIVE (5), host: esx1.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

Witness: eb16af52-1865-b3c4-3abd-001b2193b3b0 (state: ACTIVE (5), host: esx2.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

Witness: eb16af52-805a-b5c4-cf5d-001b2193b3b0 (state: ACTIVE (5), host: esx1.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

RAID_1

RAID_0

Component: 2c16af52-89fa-db48-09f8-001b2193b3b0 (state: ACTIVE (5), host: esx4.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

Component: 2c16af52-6f1f-da48-5c92-001b2193b3b0 (state: ACTIVE (5), host: esx4.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

RAID_0

Component: f3bfa952-5d7b-f4ba-190f-001b2193b3b0 (state: ACTIVE (5), host: esx2.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

Component: f3bfa952-958b-f3ba-5648-001b2193b3b0 (state: ACTIVE (5), host: esx1.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

Disk backing: [vsanDatastore] f3bfa952-175f-4d7e-1eae-001b2193b3b0/vma.virten.lab.vmdk

DOM Object: fabfa952-721d-fc1f-82d3-001b2193b3b0 (owner: esx2.virten.lab, policy: spbmProfileGenerationNumber = 1, hostFailuresToTolerate = 1, spbmProfileId = fbd74e7a-2bf9-481d-88b6-22c0abbc8898)

Witness: e916af52-075e-9740-f1c7-001b2193b3b0 (state: ACTIVE (5), host: esx4.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)

RAID_1

Component: f015af52-7c95-0d57-d7b3-001b2193b3b0 (state: ACTIVE (5), host: esx1.virten.local, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G, dataToSync: 0.00 GB)

Component: fabfa952-aa57-d02c-9204-001b2193b3b0 (state: ACTIVE (5), host: esx2.virten.lab, md: t10.ATA_____WD3000HLFS, ssd: t10.ATA_____SanDisk_SDSSDP064G)What we see here is a virtual machine with one virtual disk. That are 2 DOM Objects - The Namespace directory and the virtual disk.

vsan.cmmds_find

A powerful command to find objects and detailed object information. Can be used against hosts or clusters. Usage against clusters is recommended to resolve UUID into readable names.

usage: cmmds_find [opts] cluster_or_host

CMMDS Find

cluster_or_host: Path to a ClusterComputeResource or HostSystem

--type, -t <s>: CMMDS type, e.g. DOM_OBJECT, LSOM_OBJECT, POLICY, DISK etc.

--uuid, -u <s>: UUID of the entry.

--owner, -o <s>: UUID of the owning node.

--help, -h: Show this messageExample 1 - List all Disks in VSAN:

/localhost/DC> vsan.cmmds_find ~cluster -t DISK

+----+------+--------------------------------------+-----------------+---------+-------------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+----+------+--------------------------------------+-----------------+---------+-------------------------------------------------------+

| 1 | DISK | 527547f8-247e-9901-c532-04b8d5b952b0 | esx2.virten.lab | Healthy | {"capacity"=>299842404352, |

| | | | | | "iops"=>100, |

| | | | | | "iopsWritePenalty"=>10000000, |

| | | | | | "throughput"=>200000000, |

| | | | | | "throughputWritePenalty"=>0, |

| | | | | | "latency"=>3400000, |

| | | | | | "latencyDeviation"=>0, |

| | | | | | "reliabilityBase"=>10, |

| | | | | | "reliabilityExponent"=>15, |

| | | | | | "mtbf"=>1600000, |

| | | | | | "l2CacheCapacity"=>0, |

| | | | | | "l1CacheCapacity"=>16777216, |

| | | | | | "isSsd"=>0, |

| | | | | | "ssdUuid"=>"526209e5-118a-5fbd-e1d0-0bdd816b32eb", |

| | | | | | "volumeName"=>"52a9bf13-6b13325f-e4da-001b2193b3b0"} |

| 2 | DISK | 526e0510-6461-3410-5be0-0d9ec1b1495f | esx4.virten.lab | Healthy | {"capacity"=>53418655744, |

| | | | | | "iops"=>100, |

| | | | | | "iopsWritePenalty"=>10000000, |

| | | | | | "throughput"=>200000000, |

| | | | | | "throughputWritePenalty"=>0, |

| | | | | | "latency"=>3400000, |

| | | | | | "latencyDeviation"=>0, |

| | | | | | "reliabilityBase"=>10, |

| | | | | | "reliabilityExponent"=>15, |

| | | | | | "mtbf"=>1600000, |

| | | | | | "l2CacheCapacity"=>0, |

| | | | | | "l1CacheCapacity"=>16777216, |

| | | | | | "isSsd"=>0, |

| | | | | | "ssdUuid"=>"520f544a-1d27-aecc-cbf4-2f170e4bf0f8", |

| | | | | | "volumeName"=>"52af1537-3d763738-2c93-005056bb3032"} |

[...]Example 2 - List all disks from a specific ESXi Host. Identify the hosts UUID (Node UUID) with vsan.host_info:

/localhost/DC> vsan.host_info ~esx

VSAN enabled: yes

Cluster info:

Cluster role: agent

Cluster UUID: 525d9c62-1b87-5577-f76d-6f6d7bb4ba34

Node UUID: 52a61733-bcf1-4c92-6436-001b2193b9a4

Member UUIDs: ["52785959-6bee-21e0-e664-eca86bf99b3f", "52a61831-6060-22d7-c23d-001b2193b3b0", "52a61733-bcf1-4c92-6436-001b2193b9a4", "52aaffa8-d345-3a75-6809-005056bb3032"] (4)

Storage info:

Auto claim: no

Disk Mappings:

SSD: SanDisk_SDSSDP064G - 59 GB

MD: WDC_WD1500HLFS2D01G6U3 - 139 GB

MD: WDC_WD1500ADFD2D00NLR1 - 139 GB

MD: VB0250EAVER - 232 GB

NetworkInfo:

Adapter: vmk1 (192.168.222.21)

/localhost/DC> vsan.cmmds_find ~cluster -t DISK -o 52a61733-bcf1-4c92-6436-001b2193b9a4

+---+------+--------------------------------------+-----------------+---------+-------------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+---+------+--------------------------------------+-----------------+---------+-------------------------------------------------------+

| 1 | DISK | 52f853e4-7eca-2e9c-b157-843aefcde6dd | esx1.virten.lab | Healthy | {"capacity"=>149786984448, |

| | | | | | "iops"=>100, |

| | | | | | "iopsWritePenalty"=>10000000, |

| | | | | | "throughput"=>200000000, |

| | | | | | "throughputWritePenalty"=>0, |

| | | | | | "latency"=>3400000, |

| | | | | | "latencyDeviation"=>0, |

| | | | | | "reliabilityBase"=>10, |

| | | | | | "reliabilityExponent"=>15, |

| | | | | | "mtbf"=>1600000, |

| | | | | | "l2CacheCapacity"=>0, |

| | | | | | "l1CacheCapacity"=>16777216, |

| | | | | | "isSsd"=>0, |

| | | | | | "ssdUuid"=>"5292ca0e-5315-c0a8-6396-bd7d93a92d92", |

| | | | | | "volumeName"=>"52a9befe-6a2ccbfe-9d16-001b2193b9a4"} |

| 2 | DISK | 5292ca0e-5315-c0a8-6396-bd7d93a92d92 | esx1.virten.lab | Healthy | {"capacity"=>44812992512, |

| | | | | | "iops"=>20000, |

| | | | | | "iopsWritePenalty"=>10000000, |

| | | | | | "throughput"=>200000000, |

| | | | | | "throughputWritePenalty"=>0, |

| | | | | | "latency"=>3400000, |

| | | | | | "latencyDeviation"=>0, |

| | | | | | "reliabilityBase"=>10, |

| | | | | | "reliabilityExponent"=>15, |

| | | | | | "mtbf"=>2000000, |

| | | | | | "l2CacheCapacity"=>0, |

| | | | | | "l1CacheCapacity"=>16777216, |

| | | | | | "isSsd"=>1, |

| | | | | | "ssdUuid"=>"5292ca0e-5315-c0a8-6396-bd7d93a92d92", |

| | | | | | "volumeName"=>"NA"} |

| 3 | DISK | 5287fb1c-4cfc-04e0-6a22-281b4c259a1c | esx1.virten.lab | Healthy | {"capacity"=>249913409536, |

| | | | | | "iops"=>100, |

| | | | | | "iopsWritePenalty"=>10000000, |

[...]Example 3 - List DOM Objects from a specific ESXi Host:

/localhost/DC> vsan.cmmds_find ~cluster -t DOM_OBJECT -o 52a61733-bcf1-4c92-6436-001b2193b9a4

[...]

| 16 | DOM_OBJECT | 7e62c152-7dfb-c6e5-07b8-001b2193b9a4 | esx1.virten.lab | Healthy | {"type"=>"Configuration", |

| | | | | | "attributes"=> |

| | | | | | {"CSN"=>3, |

| | | | | | "addressSpace"=>268435456, |

| | | | | | "compositeUuid"=>"7e62c152-7dfb-c6e5-07b8-001b2193b9a4"}, |

| | | | | | "child-1"=> |

| | | | | | {"type"=>"RAID_1", |

| | | | | | "attributes"=>{}, |

| | | | | | "child-1"=> |

| | | | | | {"type"=>"Component", |

| | | | | | "attributes"=> |

| | | | | | {"capacity"=>268435456, |

| | | | | | "addressSpace"=>268435456, |

| | | | | | "componentState"=>5, |

| | | | | | "componentStateTS"=>1388405374, |

| | | | | | "faultDomainId"=>"52a61831-6060-22d7-c23d-001b2193b3b0"}, |

| | | | | | "componentUuid"=>"7e62c152-763d-1400-2b06-001b2193b9a4", |

| | | | | | "diskUuid"=>"527547f8-247e-9901-c532-04b8d5b952b0"}, |

| | | | | | "child-2"=> |

| | | | | | {"type"=>"Component", |

| | | | | | "attributes"=> |

| | | | | | {"capacity"=>268435456, |

| | | | | | "addressSpace"=>268435456, |

| | | | | | "componentState"=>5, |

| | | | | | "componentStateTS"=>1388590529, |

| | | | | | "staleLsn"=>0, |

| | | | | | "faultDomainId"=>"52a61733-bcf1-4c92-6436-001b2193b9a4"}, |

| | | | | | "componentUuid"=>"c135c452-2f04-0533-dbbc-001b2193b9a4", |

| | | | | | "diskUuid"=>"52f853e4-7eca-2e9c-b157-843aefcde6dd"}}, |

| | | | | | "child-2"=> |

| | | | | | {"type"=>"Witness", |

| | | | | | "attributes"=> |

| | | | | | {"componentState"=>5, |

| | | | | | "isWitness"=>1, |

| | | | | | "faultDomainId"=>"52aaffa8-d345-3a75-6809-005056bb3032"}, |

| | | | | | "componentUuid"=>"c135c452-cd77-0733-1708-001b2193b9a4", |

| | | | | | "diskUuid"=>"526e0510-6461-3410-5be0-0d9ec1b1495f"}} |

[...]Example 4 - List LSOM Objects (Components) from a specific ESXi Host:

/localhost/DC> vsan.cmmds_find ~cluster -t LSOM_OBJECT -o 52a61733-bcf1-4c92-6436-001b2193b9a4

[...]

| 95 | LSOM_OBJECT | 2739c452-a2cf-e9ed-9d07-eca86bf99b3f | esx1.virten.lab | Healthy | {"diskUuid"=>"52bc69ac-35ad-c2f7-bab8-2ecf298cd4e5", |

| | | | | | "compositeUuid"=>"1d61c152-d60c-6a95-5470-eca86bf99b3f", |

| | | | | | "capacityUsed"=>17181966336, |

| | | | | | "physCapacityUsed"=>0} |

| 96 | LSOM_OBJECT | 895fc152-391f-c4f2-a697-eca86bf99b3f | esx1.virten.lab | Healthy | {"diskUuid"=>"52bc69ac-35ad-c2f7-bab8-2ecf298cd4e5", |

| | | | | | "compositeUuid"=>"895fc152-3056-1cde-3a1b-eca86bf99b3f", |

| | | | | | "capacityUsed"=>53477376, |

| | | | | | "physCapacityUsed"=>53477376} |

| 97 | LSOM_OBJECT | 6537c452-a9b1-f4f5-badb-001b2193b3b0 | esx1.virten.lab | Healthy | {"diskUuid"=>"52f853e4-7eca-2e9c-b157-843aefcde6dd", |

| | | | | | "compositeUuid"=>"ff5fc152-65b6-bc34-ff54-001b2193b9a4", |

| | | | | | "capacityUsed"=>2097152, |

| | | | | | "physCapacityUsed"=>0} |

[...]vsan.disk_object_info

Prints all objects that are located on a physical disk. This command helps during troubleshooting when you want to identify all objects on a physical disk. You have to know the disk UUID which can be identified with the vsan.cmmds_find command.

usage: disk_object_info cluster_or_host disk_uuid... Fetch information about all VSAN objects on a given physical disk cluster_or_host: Cluster or host on which to fetch the object info ClusterComputeResource or HostSystem disk_uuid: --help, -h: Show this message

Example 1 - Get Disk UUID with vsan.cmmds_find and display all objects located on this disk:

/localhost/DC> vsan.cmmds_find ~cluster -t DISK

+---+------+--------------------------------------+-----------------+---------+-------------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+---+------+--------------------------------------+-----------------+---------+-------------------------------------------------------+

| 1 | DISK | 52f853e4-7eca-2e9c-b157-843aefcde6dd | esx1.virten.lab | Healthy | {"capacity"=>149786984448, |

| | | | | | "iops"=>100, |

| | | | | | "iopsWritePenalty"=>10000000, |

| | | | | | "throughput"=>200000000, |

| | | | | | "throughputWritePenalty"=>0, |

| | | | | | "latency"=>3400000, |

| | | | | | "latencyDeviation"=>0, |

| | | | | | "reliabilityBase"=>10, |

| | | | | | "reliabilityExponent"=>15, |

| | | | | | "mtbf"=>1600000, |

| | | | | | "l2CacheCapacity"=>0, |

| | | | | | "l1CacheCapacity"=>16777216, |

| | | | | | "isSsd"=>0, |

| | | | | | "ssdUuid"=>"5292ca0e-5315-c0a8-6396-bd7d93a92d92", |

| | | | | | "volumeName"=>"52a9befe-6a2ccbfe-9d16-001b2193b9a4"} |

[...]

/localhost/DC> vsan.disk_object_info ~cluster 52f853e4-7eca-2e9c-b157-843aefcde6dd

Fetching VSAN disk info from [...] (this may take a moment) ...

Physical disk mpx.vmhba1:C0:T4:L0 (52f853e4-7eca-2e9c-b157-843aefcde6dd):

DOM Object: 27e4bd52-49f6-050e-178b-00505687439c (owner: esx1.virten.lab, policy: hostFailuresToTolerate = 1, stripeWidth = 2, forceProvisioning = 1, proportionalCapacity = 100)

Context: Part of VM vm1: Disk: [vsanDatastore] 5078bd52-2977-8cf9-107c-00505687439c/vm1_1.vmdk

Witness: bae5bd52-54f7-05ab-f4b3-00505687439c (state: ACTIVE (5), host: esx1.virten.lab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

Witness: bae5bd52-ebf9-ffaa-cbb4-00505687439c (state: ACTIVE (5), host: esx1.virten.lab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

Witness: bae5bd52-e59e-03ab-b080-00505687439c (state: ACTIVE (5), host: esx1.virten.lab, md: **mpx.vmhba1:C0:T4:L0**, ssd: mpx.vmhba1:C0:T1:L0)

RAID_1

RAID_0

Component: abe5bd52-4128-cf28-ef6f-00505687439c (state: ACTIVE (5), host: esx2.virtenlab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

Component: abe5bd52-1516-cb28-6e87-00505687439c (state: ACTIVE (5), host: esx2.virtenlab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

RAID_0

Component: 27e4bd52-4048-1465-ac76-00505687439c (state: ACTIVE (5), host: esx4.virtenlab, md: **mpx.vmhba1:C0:T4:L0**, ssd: mpx.vmhba1:C0:T1:L0)

Component: 27e4bd52-1d88-1265-22ab-00505687439c (state: ACTIVE (5), host: esx4.virtenlab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

DOM Object: 7e78bd52-7595-1716-85a2-005056871792 (owner: esx1.virtenlab, policy: hostFailuresToTolerate = 1, stripeWidth = 2, forceProvisioning = 1, proportionalCapacity = 100)

Context: Part of VM debian: Disk: [vsanDatastore] 6978bd52-4d92-05ed-dad2-005056871792/debian.vmdk

Witness: aee5bd52-2ae2-197b-e67a-005056871792 (state: ACTIVE (5), host: esx4.virtenlab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

Witness: aee5bd52-8557-0d7b-3022-005056871792 (state: ACTIVE (5), host: esx2.virtenlab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

Witness: aee5bd52-7443-177b-74a8-005056871792 (state: ACTIVE (5), host: esx2.virtenlab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

RAID_1

RAID_0

Component: 36debd52-7390-a05d-9225-005056871792 (state: ACTIVE (5), host: esx4.virtenlab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

Component: 36debd52-a9b8-965d-03a6-005056871792 (state: ACTIVE (5), host: esx4.virtenlab, md: **mpx.vmhba1:C0:T4:L0**, ssd: mpx.vmhba1:C0:T1:L0)

RAID_0

Component: 7f78bd52-2d59-c558-09f9-005056871792 (state: ACTIVE (5), host: esx3.virtenlab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

Component: 7f78bd52-d827-c458-9d94-005056871792 (state: ACTIVE (5), host: esx3.virtenlab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

DOM Object: 2ee4bd52-d1af-dd19-626d-00505687439c (owner: esx3.virtenlab, policy: hostFailuresToTolerate = 1, stripeWidth = 2, forceProvisioning = 1, proportionalCapacity = 100)

Context: Part of VM vm1: Disk: [vsanDatastore] 5078bd52-2977-8cf9-107c-00505687439c/vm1_2.vmdk

Witness: bde5bd52-959d-5d8b-6058-00505687439c (state: ACTIVE (5), host: esx3.virtenlab, md: **mpx.vmhba1:C0:T4:L0**, ssd: mpx.vmhba1:C0:T1:L0)

Witness: bde5bd52-4a48-588b-e464-00505687439c (state: ACTIVE (5), host: esx1.virtenlab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

[...]vsan.object_info

Prints informations about physical location and configuration from objects. The command output is very similar to the vsan.vm_object_info but it is used against a single object.

usage: object_info [opts] cluster obj_uuid...

Fetch information about a VSAN object

cluster: Cluster on which to fetch the object info HostSystem or ClusterComputeResource

obj_uuid:

--skip-ext-attr, -s: Don't fetch extended attributes

--include-detailed-usage, -i: Include detailed usage info

--help, -h: Show this messageExample 1 - Print physical location of a DOM Object:

/localhost/DC> vsan.object_info ~cluster 7e62c152-7dfb-c6e5-07b8-001b2193b9a4

2014-01-01 18:35:16 +0000: Fetching VSAN disk info from esx1.virten.lab (may take a moment) ...

2014-01-01 18:35:16 +0000: Fetching VSAN disk info from esx4.virten.lab (may take a moment) ...

2014-01-01 18:35:16 +0000: Fetching VSAN disk info from esx2.virten.lab (may take a moment) ...

2014-01-01 18:35:16 +0000: Fetching VSAN disk info from esx3.virten.lab (may take a moment) ...

2014-01-01 18:35:18 +0000: Done fetching VSAN disk infos

DOM Object: 7e62c152-7dfb-c6e5-07b8-001b2193b9a4 (owner: esx1.virten.lab, policy: hostFailuresToTolerate = 1, forceProvisioning = 1, proportionalCapacity = 100)

Witness: c135c452-cd77-0733-1708-001b2193b9a4 (state: ACTIVE (5), host: esx4.virten.lab, md: t10.ATA_____WDC_WD300HLFSD2D03DFC1, ssd: t10.ATA_____SanDisk_SDSSDP064G)

RAID_1

Component: c135c452-2f04-0533-dbbc-001b2193b9a4 (state: ACTIVE (5), host: esx1.virten.lab, md: t10.ATA_____WDC_WD300HLFSD2D00NLR1, ssd: t10.ATA_____SanDisk_SDSSDP064G)

Component: 7e62c152-763d-1400-2b06-001b2193b9a4 (state: ACTIVE (5), host: esx2.virten.lab, md: t10.ATA_____WDC_WD3000HLFS2D01G6U1, ssd: t10.ATA_____SanDisk_SDSSDP064G)vsan.object_reconfigure

Changes the policy from DOM objects. To use this command, you need to know the object UUID which can be identified with vsan.cmmds_find or vsan.vm_object_info. Available policy options are:

- hostFailuresToTolerate (Number of failures to tolerate)

- forceProvisioning (If VSAN can't fulfill the policy requirements for an object, it will still deploy it)

- stripeWidth (Number of disk stripes per object)

- cacheReservation (Flash read cache reservation)

- proportionalCapacity (Object space reservation)

Be careful to keep existing policies. Always specify all options. The policy has to be defined in the following format:

'(("hostFailuresToTolerate" i1) ("forceProvisioning" i1))'

usage: object_reconfigure [opts] cluster obj_uuid...

Reconfigure a VSAN object

cluster: Cluster on which to execute the reconfig HostSystem or ClusterComputeResource

obj_uuid: Object UUID

--policy, -p <s>: New policy

--help, -h: Show this messageExample 1 - Change the disk policy to tolerate 2 host failures.

Current policy is hostFailuresToTolerate = 1, stripeWidth = 1

/localhost/DC> vsan.object_reconfigure ~cluster 5078bd52-2977-8cf9-107c-00505687439c -p '(("hostFailuresToTolerate" i2) ("stripeWidth" i1))'Example 2 - Disable Force provisioning. Current policy is hostFailuresToTolerate = 1, stripeWidth = 1

/localhost/DC> vsan.object_reconfigure ~cluster 5078bd52-2977-8cf9-107c-00505687439c -p '(("hostFailuresToTolerate" i1) ("stripeWidth" i1) ("forceProvisioning" i0))'Example 3 - Locate and change VM disk policies

/localhost/DC> vsan.vm_object_info ~vm

VM perf1:

Namespace directory

[...]

Disk backing: [vsanDatastore] 6978bd52-4d92-05ed-dad2-005056871792/vma.virten.lab.vmdk

DOM Object: 7e78bd52-7595-1716-85a2-005056871792 (owner: esx1.virtenlab, policy: hostFailuresToTolerate = 1, stripeWidth = 2, forceProvisioning = 1)

Witness: aee5bd52-7443-177b-74a8-005056871792 (state: ACTIVE (5), host: esx2.virten.lab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

RAID_1

RAID_0

Component: 36debd52-7390-a05d-9225-005056871792 (state: ACTIVE (5), esx3.virten.lab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

Component: 36debd52-a9b8-965d-03a6-005056871792 (state: ACTIVE (5), esx3.virten.lab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

RAID_0

Component: 7f78bd52-2d59-c558-09f9-005056871792 (state: ACTIVE (5), esx1.virten.lab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

Component: 7f78bd52-d827-c458-9d94-005056871792 (state: ACTIVE (5), esx1.virten.lab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

/localhost/DC> vsan.object_reconfigure ~cluster 7e78bd52-7595-1716-85a2-005056871792 -p '(("hostFailuresToTolerate" i1) ("stripeWidth" i1) ("forceProvisioning" i1))'

Reconfiguring '7e78bd52-7595-1716-85a2-005056871792' to (("hostFailuresToTolerate" i1) ("stripeWidth" i1) ("forceProvisioning" i1))

All reconfigs initiated. Synching operation may be happening in the backgroundvsan.vmdk_stats

Displays read cache and capacity stats for vmdks.

usage: vmdk_stats cluster_or_host vms... Print read cache and capacity stats for vmdks. Disk Capacity (GB): Disk Size: Size of the vmdk Used Capacity: MD capacity used by this vmdk Data Size: Size of data on this vmdk Read Cache (GB): Used: RC used by this vmdk Reserved: RC reserved by this vmdk cluster_or_host: Path to a ClusterComputeResource or HostSystem vms: Path to a VirtualMachine --help, -h: Show this message

Troubleshooting

vsan.obj_status_report

Provides information about objects and their health status. With this command, you can identify that all object components are healthy, which means that witness and all mirrors are available and synced. It also identifies possibly orphaned objects.

usage: obj_status_report [opts] cluster_or_host

Print component status for objects in the cluster.

cluster_or_host: Path to a ClusterComputeResource or HostSystem

--print-table, -t: Print a table of object and their

status, default all objects

--filter-table, -f <s>: Filter the obj table based on status

displayed in histogram, e.g. 2/3

--print-uuids, -u: In the table, print object UUIDs

instead of vmdk and vm paths

--ignore-node-uuid, -i <s>: Estimate the status of objects if

all comps on a given host were healthy.

--help, -h: Show this messageExample 1 - Simple component status histogram.

We can see 45 objects with 3/3 healthy components and 23 objects with 7/7 healthy components. With default policies, 3/3 are disks (2 mirror+witness) and 7/7 are namespace directories. We can also see an orphand object in this example.

/localhost/DC> vsan.obj_status_report ~cluster 2014-01-03 19:10:13 +0000: Querying all VMs on VSAN ... 2014-01-03 19:10:13 +0000: Querying all objects in the system from esx1.virten.lab ... 2014-01-03 19:10:14 +0000: Querying all disks in the system ... 2014-01-03 19:10:15 +0000: Querying all components in the system ... 2014-01-03 19:10:15 +0000: Got all the info, computing table ... Histogram of component health for non-orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 3/3 | 45 | | 7/7 | 23 | +-------------------------------------+------------------------------+ Total non-orphans: 68 Histogram of component health for possibly orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 1/3 | 1 | +-------------------------------------+------------------------------+ Total orphans: 1

Example 2 - Add a table with all object and their status to the command output. That output reveals which object actually is orphaned.

/localhost/DC> vsan.obj_status_report ~cluster -t 2014-01-03 19:42:13 +0000: Querying all VMs on VSAN ... 2014-01-03 19:42:13 +0000: Querying all objects in the system from esx1.virten.lab ... 2014-01-03 19:42:14 +0000: Querying all disks in the system ... 2014-01-03 19:42:15 +0000: Querying all components in the system ... 2014-01-03 19:42:16 +0000: Got all the info, computing table ... Histogram of component health for non-orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 3/3 | 45 | | 7/7 | 23 | +-------------------------------------+------------------------------+ Total non-orphans: 68 Histogram of component health for possibly orphaned objects +-------------------------------------+------------------------------+ | Num Healthy Comps / Total Num Comps | Num objects with such status | +-------------------------------------+------------------------------+ | 1/3 | 1 | +-------------------------------------+------------------------------+ Total orphans: 1 +-----------------------------------------------------------------------------+---------+---------------------------+ | VM/Object | objects | num healthy / total comps | +-----------------------------------------------------------------------------+---------+---------------------------+ | perf9 | 1 | | | [vsanDatastore] 735ec152-da7c-64b1-ebfe-eca86bf99b3f/perf9.vmx | | 7/7 | | perf8 | 1 | | | [vsanDatastore] 195ec152-92a3-491b-801d-eca86bf99b3f/perf8.vmx | | 7/7 | [...] +-----------------------------------------------------------------------------+---------+---------------------------+ | Unassociated objects | | | [...] | 795dc152-6faa-9ae7-efe5-001b2193b9a4 | | 3/3 | | d068ab52-b882-c6e8-32ca-eca86bf99b3f | | 1/3* | | ce5dc152-c5f6-3efb-9a44-001b2193b3b0 | | 3/3 | +-----------------------------------------------------------------------------+---------+---------------------------+ +------------------------------------------------------------------+ | Legend: * = all unhealthy comps were deleted (disks present) | | - = some unhealthy comps deleted, some not or can't tell | | no symbol = We cannot conclude any comps were deleted | +------------------------------------------------------------------+

Example 3 - Add a filtered table with unhealthy components only. We filter the table to show 1/3 health components only

/localhost/DC> vsan.obj_status_report ~cluster -t -f 1/3 [...] +-----------------------------------------+---------+---------------------------+ | VM/Object | objects | num healthy / total comps | +-----------------------------------------+---------+---------------------------+ | Unassociated objects | | | | d068ab52-b882-c6e8-32ca-eca86bf99b3f | | 1/3* | +-----------------------------------------+---------+---------------------------+ [...] /localhost/DC> vsan.obj_status_report ~cluster -t -u [...] +-----------------------------------------+---------+---------------------------+ | VM/Object | objects | num healthy / total comps | +-----------------------------------------+---------+---------------------------+ | perf9 | 1 | | | 735ec152-da7c-64b1-ebfe-eca86bf99b3f | | 7/7 | | perf8 | 1 | | | 195ec152-92a3-491b-801d-eca86bf99b3f | | 7/7 | | perf11 | 1 | | | d65ec152-7d27-f441-39bb-eca86bf99b3f | | 7/7 | [...]vsan.check_state Checks state of VMs and VSAN objects. This command can also re-register vms where objects are out of sync. I can't reproduce to get VMs out of sync, so I couldn't test that for now. I am going to update that post when i have any further information. Example 1 - Check state /localhost/DC> vsan.check_state ~cluster 2014-01-03 19:53:36 +0000: Step 1: Check for inaccessible VSAN objects Detected 1 objects to not be inaccessible Detected d068ab52-b882-c6e8-32ca-eca86bf99b3f on esx2.virten.lab to be inaccessible 2014-01-03 19:53:37 +0000: Step 2: Check for invalid/inaccessible VMs 2014-01-03 19:53:37 +0000: Step 3: Check for VMs for which VC/hostd/vmx are out of sync Did not find VMs for which VC/hostd/vmx are out of sync

vsan.fix_renamed_vms

This command fixes virtual machines that are renamed by the vCenter in case of storage inaccessibility when they are get renamed to their vmx file path.

It is a best effort command, as the real VM name is unknown.

usage: fix_renamed_vms vms... This command can be used to rename some VMs which get renamed by the VC in case of storage inaccessibility. It is possible for some VMs to get renamed to vmx file path. eg. "/vmfs/volumes/vsanDatastore/foo/foo.vmx". This command will rename this VM to "foo". This is the best we can do. This VM may have been named something else but we have no way to know. In this best effort command, we simply rename it to the name of its config file (without the full path and .vmx extension ofcourse!). vms: Path to a VirtualMachine --help, -h: Show this message

Example 1 - Fix a renamed VM

![]()

/localhost/DC> vsan.fix_renamed_vms ~/vms/%2fvmfs%2fvolumes%2fvsanDatastore%2fvma.virten.lab%2fvma.virten.lab.vmx/ Continuing this command will rename the following VMs: %2fvmfs%2fvolumes%2fvsanDatastore%2fvma.virten.lab%2fvma.virten.lab.vmx -> vma.virten.lab Do you want to continue [y/N]? y Renaming... Rename %2fvmfs%2fvolumes%2fvsanDatastore%2fvma.virten.lab%2fvma.virten.lab.vmx: success

vsan.reapply_vsan_vmknic_config

Re-enables VSAN on vmk ports. Could be useful when you have network configuration problems in your VSAN Cluster.

usage: reapply_vsan_vmknic_config [opts] host...

Unbinds and rebinds VSAN to its vmknics

host: Path to a HostSystem

--vmknic, -v <s>: Refresh a specific vmknic. default is all vmknics

--dry-run, -d: Do a dry run: Show what changes would be made

--help, -h: Show this messageExample 1 - Run the command in dry mode

/localhost/DC> vsan.reapply_vsan_vmknic_config -d ~esx

Host: esx1.virten.lab

Would reapply config of vmknic vmk1:

AgentGroupMulticastAddress: 224.2.3.4

AgentGroupMulticastPort: 23451

IPProtocol: IPv4

InterfaceUUID: 776ca852-6660-c6d8-c9f4-001b2193b9a4

MasterGroupMulticastAddress: 224.1.2.3

MasterGroupMulticastPort: 12345

MulticastTTL: 5Example 2 - Unbinds and rebinds VSAN on a host

/localhost/DC> vsan.reapply_vsan_vmknic_config ~esx

Host: esx1.virten.lab

Reapplying config of vmk1:

AgentGroupMulticastAddress: 224.2.3.4

AgentGroupMulticastPort: 23451

IPProtocol: IPv4

InterfaceUUID: 776ca852-6660-c6d8-c9f4-001b2193b9a4

MasterGroupMulticastAddress: 224.1.2.3

MasterGroupMulticastPort: 12345

MulticastTTL: 5

Unbinding VSAN from vmknic vmk1 ...

Rebinding VSAN to vmknic vmk1 ...vsan.vm_perf_stats

Displays performance statistics from a virtual machine. The following metrics are supported:

- IOPS (read/write)

- Throughput in KB/s (read/write

- Latency in ms (read/write)

usage: vm_perf_stats [opts] vms...

VM perf stats

vms: Path to a VirtualMachine

--interval, -i <i>: Time interval to compute average over (default: 20)

--show-objects, -s: Show objects that are part of VM

--help, -h: Show this messageExample 1 - Display performance stats (Default: 20sec interval)

/localhost/DC> san.vm_perf_stats ~vm 2014-01-03 20:23:44 +0000: Querying info about VMs ... 2014-01-03 20:23:44 +0000: Querying VSAN objects used by the VMs ... 2014-01-03 20:23:45 +0000: Fetching stats counters once ... 2014-01-03 20:23:46 +0000: Sleeping for 20 seconds ... 2014-01-03 20:24:06 +0000: Fetching stats counters again to compute averages ... 2014-01-03 20:24:07 +0000: Got all data, computing table +-----------+--------------+------------------+--------------+ | VM/Object | IOPS | Tput (KB/s) | Latency (ms) | +-----------+--------------+------------------+--------------+ | win7 | 325.1r/80.6w | 20744.8r/5016.8w | 2.4r/12.2w | +-----------+--------------+------------------+--------------+

Example 2 - Display all VM objects performance stats with an interval of 5.

/localhost/DC> vsan.vm_perf_stats ~vm --show-objects --interval=5 2014-01-03 20:29:08 +0000: Querying info about VMs ... 2014-01-03 20:29:08 +0000: Querying VSAN objects used by the VMs ... 2014-01-03 20:29:09 +0000: Fetching stats counters once ... 2014-01-03 20:29:09 +0000: Sleeping for 5 seconds ... 2014-01-03 20:29:14 +0000: Fetching stats counters again to compute averages ... 2014-01-03 20:29:15 +0000: Got all data, computing table +-----------------------------------------------------+-------------+----------------+--------------+ | VM/Object | IOPS | Tput (KB/s) | Latency (ms) | +-----------------------------------------------------+-------------+----------------+--------------+ | win7 | | | | | ddc4a952-c91c-a777-771f-001b2193b9a4/win7.vmx | 0.0r/0.3w | 0.0r/0.2w | 0.0r/26.1w | | ddc4a952-c91c-a777-771f-001b2193b9a4/win7.vmdk | 166.6r/3.9w | 10597.2r/13.2w | 5.2r/23.5w | | ddc4a952-c91c-a777-771f-001b2193b9a4/win7_1.vmdk | 0.0r/72.6w | 0.0r/4583.7w | 0.0r/13.1w | +-----------------------------------------------------+-------------+----------------+--------------+

vsan.clear_disks_cache

Clears cached disk informations. $disksCache is an internal variable. Usually, this command is not required. If you encounter problems it might help.

usage: clear_disks_cache Clear cached disks information --help, -h: Show this message

Example 1 - Display performance stats. (No outpu)

/localhost/DC> vsan.clear_disks_cache

vsan.lldpnetmap

Displays the mapping between physical network interface and physical switchs by using using Link Layer Discovery Protocol (LLDP).

usage: lldpnetmap hosts_and_clusters... Gather LLDP mapping information from a set of hosts hosts_and_clusters: Path to a HostSystem or ClusterComputeResource --help, -h: Show this message

Example 1 - Display LLDP information from all hosts

/localhost/DC> vsan.lldpnetmap ~cluster 2014-12-30 18:55:40 +0000: This operation will take 30-60 seconds ... +-------------------+--------------+ | Host | LLDP info | +-------------------+--------------+ | esx2.virten.local | s320: vmnic1 | | esx3.virten.local | s320: vmnic1 | | esx1.virten.local | s320: vmnic1 | +-------------------+--------------+

vsan.recover_spbm

This command recovers Storage Policy Based Management associations.

usage: recover_spbm [opts] cluster_or_host

SPBM Recovery

cluster_or_host: Path to a ClusterComputeResource or HostSystem

--dry-run, -d: Don't take any automated actions

--force, -f: Answer all question with 'yes'

--help, -h: Show this messageExample 1 - Recover SPBM profile association

/localhost/DC> vsan.recover_spbm -f ~cluster 2014-12-30 19:02:53 +0000: Fetching Host info 2014-12-30 19:02:53 +0000: Fetching Datastore info 2014-12-30 19:02:53 +0000: Fetching VM properties 2014-12-30 19:02:53 +0000: Fetching policies used on VSAN from CMMDS 2014-12-30 19:02:53 +0000: Fetching SPBM profiles 2014-12-30 19:02:54 +0000: Fetching VM <-> SPBM profile association 2014-12-30 19:02:54 +0000: Computing which VMs do not have a SPBM Profile ... 2014-12-30 19:02:54 +0000: Fetching additional info about some VMs 2014-12-30 19:02:54 +0000: Got all info, computing after 0.66 sec 2014-12-30 19:02:54 +0000: Done computing SPBM Profiles used by VSAN: +---------------------+---------------------------+ | SPBM ID | policy | +---------------------+---------------------------+ | Not managed by SPBM | proportionalCapacity: 100 | | | hostFailuresToTolerate: 1 | +---------------------+---------------------------+ | Not managed by SPBM | hostFailuresToTolerate: 1 | +---------------------+---------------------------+

vsan.support_information

Use this command to gather information for support cases at VMware. This commands runs several other commands:

- vsan.cluster_info VSAN-Cluster

- vsan.host_info

- vsan.vm_object_info

- vsan.vm_object_info

- vsan.disks_info

- vsan.disks_stats

- vsan.check_limits VSAN-Cluster

- vsan.check_state VSAN-Cluster

- vsan.check_state VSAN-Cluster

- vsan.lldpnetmap VSAN-Cluster

- vsan.obj_status_report VSAN-Cluster

- vsan.resync_dashboard VSAN-Cluster

- vsan.disk_object_info VSAN-Cluster, disk_uuids

usage: support_information dc_or_clust_conn Command to collect vsan support information dc_or_clust_conn: Path to a RbVmomi::VIM or Datacenter or ClusterComputeResource --help, -h: Show this message

Example 1 - Gather Support Information

/localhost/DC> vsan.support_information ~ VMware virtual center DAE11A48-50D6-4D6F-91F5-1E1D349A6917 *** command> vsan.support_information DC ************* BEGIN Support info for datacenter DC ************* ************* BEGIN Support info for cluster VSAN-Cluster ************* *** command>vsan.cluster_info VSAN-Cluster *** command>vsan.host_info *** command>vsan.vm_object_info *** command>vsan.vm_object_info *** command>vsan.disks_info *** command>vsan.disks_stats *** command>vsan.check_limits VSAN-Cluster *** command>vsan.check_state VSAN-Cluster *** command>vsan.check_state VSAN-Cluster *** command>vsan.lldpnetmap VSAN-Cluster *** command>vsan.obj_status_report VSAN-Cluster *** command>vsan.resync_dashboard VSAN-Cluster *** command>vsan.disk_object_info VSAN-Cluster, disk_uuids ************* END Support info for cluster VSAN-Cluster ************* ************* END Support info for datacenter DC *************

VSAN Observer

Basics All commands can be configured with an Interval in which Observer will collect stats and a maximum runtime. Default is to collect stats each 60 seconds and run 2 hours. Keep in mind that VSAN Observer runs from memory when adjusting runtime settings. Options to change the default settings for runtime and interval are -i and -m.

usage: observer [opts] cluster_or_host

Run observer

cluster_or_host: Path to a ClusterComputeResource or HostSystem

--filename, -f <s>: Output file path

--port, -p <i>: Port on which to run webserver (default: 8010)

--run-webserver, -r: Run a webserver to view live stats

--force, -o: Apply force

--keep-observation-in-memory, -k: Keep observed stats in memory even when

commands ends. Allows to resume later

--generate-html-bundle, -g <s>: Generates an HTML bundle after completion.

Pass a location

--interval, -i <i>: Interval (in sec) in which to collect

stats (default: 60)

--max-runtime, -m <i>: Maximum number of hours to collect stats.

Caps memory usage. (Default: 2)

--forever, -e <s>: Runs until stopped. Every --max-runtime

intervals retires snapshot to disk. Pass

a location

--no-https, -n: Don't use HTTPS and don't require login.

Warning: Insecure

--max-diskspace-gb, -a <i>: Maximum disk space (in GB) to use in forever

mode. Deletes old data periodically (default: 5)

--help, -h: Show this message

Dump Data in JSON

VSAN Observer queries stats from each ESXi host in the JSON format. That output can be written to a file to be used by other tools. In each collection interval, stats are gathered from all hosts and written to a new line.

Example: I am going to run a single collection, and stop them with CTRL+C after the first time:

/localhost/DC> vsan.observer ~cluster --filename /tmp/observer.json Press + to stop observing at any point ... 2014-01-09 20:15:56 +0000: Collect one inventory snapshot Query VM properties: 0.16 sec Query Stats on esx3.virten.lab: 0.65 sec (on ESX: 0.16, json size: 33KB) Query Stats on esx2.virten.lab: 2.05 sec (on ESX: 0.51, json size: 461KB) Query Stats on esx4.virten.lab: 2.19 sec (on ESX: 1.25, json size: 409KB) Query Stats on esx1.virten.lab: 2.13 sec (on ESX: 0.50, json size: 472KB) Query CMMDS from esx1.virten.lab: 1.84 sec (json size: 349KB) 2014-01-09 20:16:18 +0000: Collection took 21.98s, sleeping for 38.02s 2014-01-09 20:16:18 +0000: Press + to stop observing ^C2014-01-09 20:16:44 +0000: Execution interrupted, wrapping up ...

To format json output, i use python -mjson.tools:

vc:~ # cat /tmp/observer.json | python -mjson.tool |less

The output looks like this, but be warned, there are lots of data. One collection of my small 4 Node Cluster generates 110,000 lines:

[...]

"vsi": {

"esx1.virten.lab": {

"cbrc": {

"dcacheStats": null

},

"cbrc-taken": 1389298607.2460959,

"disks-taken": 1389298607.2457359,

"disks.stats": {

"SanDisk_SDSSDP064G": {

"atsOps": 0,

"blocksCloneRead": 0,

"blocksCloneWrite": 0,

"blocksDeleted": 0,

"blocksRead": 36723527,

"blocksWritten": 50546140,

"blocksZeroed": 0,

"cloneReadOps": 0,

"cloneWriteOps": 0,

"commands": 3558146,

"dAvgLatency": 3748,

"dAvgMoving": 1288,

"deleteOps": 0,

"failedAtsOps": 0,

[...]Generate HTML bundle

To visualize the huge amount of data, VSAN Observer can generate a HTML bundle after completion:

/localhost/DC> vsan.observer ~cluster --generate-html-bundle /tmp/ Press + to stop observing at any point ... 2014-01-09 20:37:44 +0000: Collect one inventory snapshot Query VM properties: 0.17 sec Query Stats on esx3.virten.lab: 0.50 sec (on ESX: 0.16, json size: 33KB) Query Stats on esx2.virten.lab: 2.02 sec (on ESX: 0.51, json size: 461KB) Query Stats on esx1.virten.lab: 2.15 sec (on ESX: 0.54, json size: 472KB) Query Stats on esx4.virten.lab: 2.99 sec (on ESX: 2.28, json size: 409KB) Query CMMDS from esx1.virten.lab: 1.84 sec (json size: 349KB) 2014-01-09 20:38:06 +0000: Live-Processing inventory snapshot 2014-01-09 20:38:06 +0000: Collection took 21.93s, sleeping for 38.07s 2014-01-09 20:38:06 +0000: Press + to stop observing ^C2014-01-09 20:38:12 +0000: Execution interrupted, wrapping up ... 2014-01-09 20:38:12 +0000: Writing out an HTML bundle to /tmp/vsan-observer-2014-01-09.20-38-12.tar.gz ... 2014-01-09 20:38:12 +0000: Writing statsdump for system mem ... 2014-01-09 20:38:12 +0000: Writing statsdump for pnics ... 2014-01-09 20:38:12 +0000: Writing statsdump for slabs ... 2014-01-09 20:38:12 +0000: Writing statsdump for heaps ... 2014-01-09 20:38:12 +0000: Writing statsdump for pcpus ... 2014-01-09 20:38:12 +0000: Writing statsdump for ssds ... 2014-01-09 20:38:12 +0000: Writing statsdump for worldlets ... 2014-01-09 20:38:12 +0000: Writing statsdump for helper worlds ... 2014-01-09 20:38:12 +0000: Writing statsdump for DOM ... 2014-01-09 20:38:12 +0000: Writing statsdump for LSOM components ... 2014-01-09 20:38:12 +0000: Writing statsdump for LSOM hosts ... 2014-01-09 20:38:12 +0000: Writing statsdump for PLOG disks ... 2014-01-09 20:38:12 +0000: Writing statsdump for LSOM disks ... 2014-01-09 20:38:12 +0000: Writing statsdump for CBRC ... 2014-01-09 20:38:12 +0000: Writing statsdump for VMs ... 2014-01-09 20:38:12 +0000: Writing statsdump for VSCSI ... 2014-01-09 20:38:12 +0000: Writing statsdump for Physical disks ...

VSAN Observer Live View

VSAN Observer also has an implemented web server that allows to view the same output as html-bundle live. Just start it and open http://<vcenter>:8010 in your browser:

/localhost/DC> vsan.observer ~cluster --run-webserver --force [2014-01-09 20:44:08] INFO WEBrick 1.3.1 [2014-01-09 20:44:08] INFO ruby 1.9.2 (2011-07-09) [x86_64-linux] [2014-01-09 20:44:08] WARN TCPServer Error: Address already in use - bind(2) Press + to stop observing at any point ... 2014-01-09 20:44:08 +0000: Collect one inventory snapshot [2014-01-09 20:44:08] INFO WEBrick::HTTPServer#start: pid=26029 port=8010

The Webinterface comes up with 8 Views. I am going to cover the most useful stats for now:![]()

- SAN Client

- VSAN Disks

- VSAN Disks (deep-dive)

- PCPU

- Memory

- Distribution

- DOM Owner

- VMs

SAN Client

This view shows VSAN statistics from the view point of the host. The view represents the performance as seen by VMs running on the hosts for which statistics are shown. You should see balanced statistics in that view. To balance the workload you can try to use vMotion to move VMs to other ESXi hosts in the Cluster. This might help, but does not always solve the problem because the performance issue can be caused by any disk on any host in the VSAN cluster.

VSAN Disks

This view shows aggregated statistics from all disks in an host. This might help to identify the ESXi host which causes contention.

VSAN Disks (deep-dive)

This view shows detailed information about physical disks on each host. This allows to further identify when single disks are causing contention. You can view latency and IOPS from all disks (SSD and MD) and special metrics like RC Hit rate and WriteBuffer load.

VM

This view allows to analyse single VMs. You can drill down each virtual disk and see where it is physically located. You can see objects and components performance metrics.

Pingback: Manage Virtual SAN with RVC – Complete Guide | Storage CH Blog

Pingback: De VSAN 6.1 à VSAN 6.2 … quelle aventure ! – vBlog.io

I noticed after removing a disk group from a host and re-adding it back, it now has N/A for the DisplayName and Host column; when running vsan.disks_stats I was instructed by VMware support to do this, due to invalid components. It did clean up the error, but I now have N/A for Displayname and Host. VSAN seems to be in good standing. Is there a way to add this information back for the vsan.disks_stats cmd? Thanks.