Intel's Coffee Lake-based 8th Gen Bean Canyon NUC is an ideal candidate for running VMware ESXi. NUCs are not officially supported by VMware but they are very widespread in many homelabs or test environments. They are small, silent, transportable and have very low power consumption, making it a great server for your homelab. The Bean Canyon is available with i3, i5, and i7 CPU. It's the first series where the i5 is also equipped with a Quad-Core CPU, so both - the i5 and i7 - are ideal candidates for ESXi.

- NUC8i7BEH/NUC8i7BEK (Intel Core i7-8559U - 4 Core, up to 4.5 GHz)

- NUC8i5BEH/NUC8i5BEK (Intel Core i5-8259U - 4 Core, up to 3.8 GHz)

- NUC8i3BEH/NUC8i3BEK (Intel Core i3-8109U - 2 Core, up to 3.6 GHz)

Features

Features

- 8th Gen Intel Coffee Lake CPU

- 64GB of DDR4 SO-DIMM memory (32GB are supported, but 64GB works without problems)

- Available with and without 2.5″ HDD slot

- M.2 slot for PCIe or X4 Gen 3 NVMe

- External Micro SDXC Slot

- Intel I219-V Gigabit Network Adapter

- Thunderbolt 3 (USB-C)

- USB 3.1 Gen2

- Intel Optane Memory Ready

Comparison with predecessor (Baby Canyon)

- Quad-Core CPU in i5 and i7 models

- Uniform TDP (28W)

- i3 models with Thunderbolt 3 support

To get an ESXi Host installed you additionally need:

- Memory (1.2V DDR4-2400 SODIMM)

- M.2 SSD (22×42 or 22×80), 2.5″ HDD or USB-Stick

Model comparison

| Model (2.5") | NUC8i7BEH | NUC8i5BEH | NUC8i3BEH |

| no 2.5" Slot | NUC8i7BEK | NUC8i5BEK | NUC8i3BEK |

| Architecture | Coffee Lake (14 nm) | ||

| CPU | Intel Core i7-8559U | Intel Core i5-8259U | Intel Core i3-8109U |

| Base Frequency | 2.7 GHz | 2.3 GHz | 3.0 GHz |

| Max Frequency | 4.5 GHz | 3.8 GHz | 3.6 GHz |

| Cores | 4 (8 Threads) | 2 (4 Threads) | |

| TDP | 28 W | ||

| TDP-down | 20 W | ||

| Memory Type | 2x 260-pin 1.2 V DDR4 2400 MHz SO-DIMM | ||

| Max Memory | 64 GB (32 GB officially supported) | ||

| USB Ports | 2x USB 3.1 Gen2 (front panel) 3x USB 3.1 Gen2 (back panel) 2x USB 2.0 (internal header) | ||

| Thunderbolt 3 Port (40Gbps) | Yes | Yes | Yes |

| USB 3.1 Gen 2 Port (10Gbps) | Yes | Yes | Yes |

| Storage | M.2 22x42/80 slot for SATA3 or PCIe X4 Gen3 NVMe OR AHCI SSD SATA3 2.5" HDD/SDD SDXC Slot | ||

| LAN | Intel I219-V Gigabit LAN | ||

| Intel VT-x | Yes | ||

| Intel vPro | No | ||

| Available | Q3 2018 | Q3 2018 | Q3 2018 |

| Price | $600 | $450 | $320 |

HCL and VMware ESXi Support

Intel NUCs are not supported by VMware and not listed in the HCL. Not supported means that you can't open Service Requests with VMware when you have a problem. It does not state that it won't work. Some components used in the NUC are listed in the IO Devices HCL.

ESXi runs out of the box starting with the following releases:

- ESXi 6.5 Update 2 (Build 8294253) released in May 2018

- ESXi 6.7 Update 1 (Build 10302608) released in October 2018

To clarify, the system is not supported by VMware, so do not use this system in a productive environment. I can not guarantee that it will work stable. As a home lab or a small home server, it should be fine.

Network (Intel I219-V) - "No Network Adapters" Error

The network adapter does not work out of the box with ESXi versions before 6.7u1 or 6.5u2. If you need older ESXi versions to work you have to inject the ne1000 driver manually into the installer image (ESXi 6.5: 0.8.3-7vmw.650.2.50.8294253 / ESXi 6.7: 0.8.4-1vmw.670.1.28.10302608).

SD Card

8th Gen NUCs are equipped with a Micro SDXC Slot. Unfortunately, there is no driver available for ESXi at the moment so it's not possible to use the SD Card slot.

Tested ESXi Versions

- VMware ESXi 6.5 U2

- VMware ESXi 6.5 U3

- VMware ESXi 6.7 U1

- VMware ESXi 6.7 U2

- VMware ESXi 6.7 U3

Delivery and assembly

The Box contains a short description of how to open and assemble the components. The system is a little bit heavier than it looks and has a high build quality. The upside is very scratch-sensitive, so be careful with it.

The installation is very simple. Remove 4 screws on the bottom and remove the lid and the 2.5" drive holder. The assembly takes about 5 minutes to open the NUC, install memory, NVMe SSD module and a 2.5" drive.

Installation

No customization is required to install ESXi 6.5U2 / ESXi 6.7U1 or later on the 8th Gen Bean Canyon NUC. I recommend to use the latest image provided by VMware to Install ESXi:

- VMware vSphere Hypervisor (ESXi) 6.7U3 [Release Notes] [Download]

- VMware vSphere Hypervisor (ESXi) 6.5U3 [Release Notes] [Download]

The simplest way to install ESXi is by using the ISO and Rufus to create a bootable ESXi Installer USB Flash Drive. If you don't have access to ESXi Binaries you can sign up for a free version.

There is a problem with the ESXi installer when UEFI boot is enabled. To work around the issue, configure the NUC to boot in legacy mode. This step is required for the installation only and can be changed back after.

- Power on the NUC

- Press F2 to enter BIOS

- Disable Secure Boot (Advanced -> Boot -> Secure Boot)

- Enable Legacy Boot (Boot Order > Legacy > Legacy Boot)

- Press F10 to save and exit

Now you should be able to proceed with the ESXi installation. The installer might seem to be stuck for about 2 minutes at "nfs4client failed to load", just be patient. When the installation is finished you can disable Legacy Boot.

Warning: If you've installed ESXi to an NVMe drive, you have to disable Legacy boot as NVMe can only boot with UEFI.

Performance

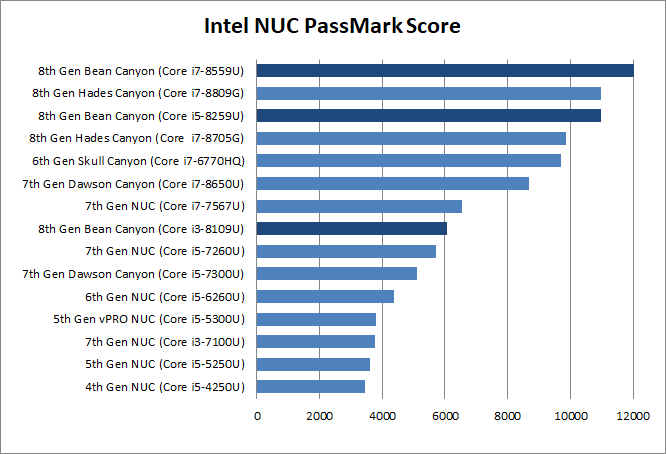

The performance of a single NUC is sufficient to run a small home lab including a vCenter Server and 3 ESXi hosts. It's a great system to take along for demonstration purposes. The i7 is even faster than the Hades Canyon and the i5 is, thanks to its quad-core CPU, on a par.

The following chart is a comparison based on the NUCs PassMark score:

Power consumption

NUCs have very low power consumption. The NUC8i7BEH with an M.2 NVMe draws about 25W. With that, the average operating costs are at about 5-6 Euros per month:

25 watt * 24 h * 30 (days) = 18 KWh * 0,30 (EUR) = 5,40 EUR

Consumption measured with Homematic HM-ES-PMSw1

hi

I also use this as homelab

it's great

Have you encountered this problem?

smartd: [warn] t10.NVMe____Samsung_SSD_970_EVO_Plus_250GB__________505FB19157382500: REALLOCATED SECTOR CT below threshold (0 < 90)

esxcli storage core device smart get -d t10.NVMe____Samsung_SSD_970_EVO_Plus_250GB__________505FB19157382500

Parameter Value Threshold Worst

---------------------------- ----- --------- -----

Health Status OK N/A N/A

Media Wearout Indicator N/A N/A N/A

Write Error Count N/A N/A N/A

Read Error Count N/A N/A N/A

Power-on Hours 4158 N/A N/A

Power Cycle Count 31 N/A N/A

Reallocated Sector Count 0 90 N/A

Raw Read Error Rate N/A N/A N/A

Drive Temperature 35 85 N/A

Driver Rated Max Temperature N/A N/A N/A

Write Sectors TOT Count N/A N/A N/A

Read Sectors TOT Count N/A N/A N/A

Initial Bad Block Count N/A N/A N/A

Your SSD has a problem. Can you check smart stats with this command? (This Will tell you TBW):

# esxcli nvme device log smart get -A vmhba0

hi, thank you for your reply.

only 3 TBW/3640 GBW, so if I write 3TB the ssd will can't work?

The TBW of Samsung_SSD_970_EVO_Plus_250GB is 150TB, I bought it for no more than a year, only for esxi, and the frequency of use is not high. I am confused about this.

[root@localhost:~] i=$(esxcli nvme device log smart get -A vmhba1 |grep Written | cut -d ":" -f 2); echo $(($(printf "%d\n"$i)*512000/1099511627776)) TBW

3 TBW

[root@localhost:~] i=$(esxcli nvme device log smart get -A vmhba1 |grep Written | cut -d ":" -f 2); echo $(($(printf "%d\n"$i)*512000/1073741824)) GBW

3640 GBW

[root@localhost:~] i=$(esxcli nvme device log smart get -A vmhba1 |grep Written | cut -d ":" -f 2); echo $(($(printf "%d\n"$i)*512000/1099511627776)) TBW

3 TBW

[root@localhost:~] i=$(esxcli nvme device log smart get -A vmhba1 |grep Written | cut -d ":" -f 2); echo $(($(printf "%d\n"$i)*512000/1073741824)) GBW

3640 GBW

I read your blog post about TBW and I understand that this 3 TBW/3640 GBW is the amount of data that has been written. Thank you

I also checked the explanation of S.M.A.R.T., the Reallocated Sector Count value should be 0, if the hard disk is in good condition. The current value is 0, but esxi judges that there is a fault below threshold (0 <90).

While the micro SDXC reader may not be detected by ESXi, it is possible to passthrough the reader to a guest VM.

I have recently got hold of a Hades Canyon and installed esxi 6.7, installed bios 58.

Only one network adapter (vmnic1) has a status of connected, the 2nd adapter (vmnic0) is showing as 'notconnected' I have reversed cables to rule out a cabling issue. The Nic lights are flashing green at both ends. I have attached the esxi 'configure management network' menu screenshot and also a the view from the esxi client which permanently shows link down.

Can anyone advise on possible issue and next troubleshooting steps please?

Yes. Here: https://www.virten.net/2019/02/esxi-on-8th-gen-intel-nuc-kaby-lake-g-hades-canyon/

Disable auto-negotiation: Host Client > Networking > Physical NICs > vmnic0 > Edit settings and set the speed to 1000Mbps, full duplex.

After upgrading to ESXi version 7.0U1-16850804, network card is no longer detected.

You have to keep the old ne1000 driver. Instructions: https://www.virten.net/2020/10/will-esxi-7-0-update-1-run-on-intel-nuc/

Can this be used as a nested lab. Would love to have a test environment for 1VC and 2-3 esxi nodes like Nakivo suggests. Nakivo reckons 16-32gb should be fine. Downside is the performance compared to bare-metal, however on the upside - compatibility issues can be avoided.

https://www.nakivo.com/blog/how-to-build-a-vsphere-7-0-home-lab/

Sure. You can install up to 64GB in the NUC and run a vCenter and 3 ESXi hosts without any problems.

Spent today messing with my NUC8i7BEH

Finally managed to pass the NUC's GPU into a Windows 10 VM ! by editing the below Advanced config of the VM

hypervisor.cpuid.v0 = FALSE

pciPassthru0.msiEnabled = FALSE

svga.present = false

I personally use nuc8i5 + Qunhui NAS and NUC to install Linux as the server. At the same time, it serves as Qunhui's file cache and different backup of important data (after all, SSD reads fast).

The file is also written to NUC first, and then sorted to NAS.

esxi 7.0u3f now works straight out of the box with the build-in nic, see https://williamlam.com/2022/07/quick-tip-esxi-7-0-update-3f-now-includes-all-intel-i219-devices-from-community-networking-driver-fling.html