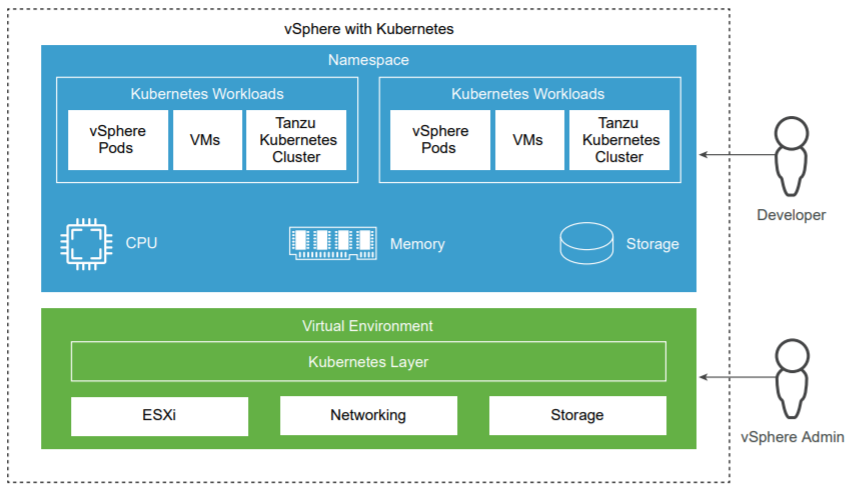

With the release of vSphere 7.0, the integration of Kubernetes, formerly known as Project Pacific, has been introduced. vSphere with Kubernetes enables you to directly run containers on your ESXi cluster. This article explains how to get your cluster enabled for the so-called "Workload Management".

- Required Components

- License Considerations

- NSX-T Installation and Configuration

- Prepare the vCenter for Kubernetes

- Cluster Compatibility Troubleshooting

- Enable Workload Management / Kubernetes

The article covers evaluation options, licensing options, troubleshooting, and the initial configuration.

Required Components

To get started with vSphere Integrated Kubernetes, you need the following components:

- 3x ESXi 7.0

- 1x vCenter Server 7.0

- 1x NSX-T 3.0 Manager

- 1x NSX-T Edge Appliance (Large Deployment!)

If you do not have 3 ESXi hosts. You can also deploy everything in a virtual environment.

Be aware that even the minimum setup is very resource-intensive. For physical setups, you should have 3 hosts with at least 6 cores and 64GB memory. If you want to run everything virtual you should have a host with 128GB memory.

License Considerations

The only supported option to enable vSphere with Kubernetes is by having a VMware Cloud Foundation (VCF) 4.0 license. If you do not have a VCF license, you can get a 365-day Evaluation Licenses by purchasing the VMUG Advantage package which costs $200.00 USD/year.

The third option is by using VMware's 60-day evaluation licenses. You can just install ESXi and vCenter without a license to activate a fully-featured 60-day evaluation. If you have vExpert or any other licenses do not activate ESXi with Enterprise Plus as it will remove the "Workload Management" feature. Feel free to activate the vCenter license. NSX-T is a little bit special as instead of giving you 60-days after every installation, you have to sign up for an evaluation license that runs 60 days after requesting it. See here for more details on getting an NSX-T Eval. If you do not have an NSX-T license (NSX-T is delivered with an Endpoint license), you can't create a T0 Gateway, which is required to enable Workload Management.

License Options Summary:

- VMware Cloud Foundation 4.0 License

- VMUG Advantage (365-day Evaluation)

- 60-day Evaluation (Just Install ESXi/vCenter + Subscribe for NSX-T Evaluation)

NSX-T Installation and Configuration

The initial NSX-T configuration is quite complex. I'm not going deep into the configuration as there are various options to get the overlay up and running. The goal is to have a T-0 Gateway with external connectivity that the Kubernetes can utilize. You should be able to configure the overlay, create a T-0 with an external interface, connect a T-1 to the T-0 using auto-plumbing, connect a segment to the T-1, create a virtual machine in that segment, and ping to the Internet from that VM.

If you are completely new to NSX-T you can try the official vSphere with Kubernetes guide which also covers NSX-T configuration. Here is some basic information about my setup:

- 3 ESXi Hosts (Intel NUC with USB Network adapters)

- VDS7 Setup (No N-VDS)

- 2 VLANs: Management and Geneve Transport

- Management VLAN is for everything: vCenter, ESXi, NSX-Manager, External T-0 Interface, Kubernetes Components (Ingress, Egress, Supervisor Control Plane,...)

- Second VLAN is for Geneve Transport (ESXi+Edge VM)

- Same Transport VLAN for Edge and Compute Nodes

- Dedicated Network Port (Second VDS) for Edge VMs (Required as you can't have Edge and Compute nodes on the same network adapter, in the same VLAN. Either use separate transport VLANs (requires external routing!) or separate network adapters).

- No dedicated ESXi host for Edge Nodes

- A Large Edge VM (8vCPU / 32GB Memory)

- T-0 Gateway with external interface in management VLAN (With internet connectivity)

Prepare the vCenter for Kubernetes and Gather Information

Prior to start with Kubernetes, you have to make sure that vCenter and ESXi are properly configured. Use the following checklist to verify the configuration:

- ESXi Hosts are in a Cluster with HA and DRS (fully automated) enabled.

- NSX-T configured with one operational T-0 Gateway and overlay network.

- ESXi Hosts connected to a vSphere Distributed Switch Version 7.

- Storage Policies configured (vSAN, LUNs, or NFS are fine but you have to use Storage Policies for all components within Kubernetes).

- Internet access for all components (NAT is fine)

During configuration, you have to enter several parameters. It's a good practice to have them ready prior to start. All IP addresses, except Pod and Service Networks, can be in the same subnet. For testing, it's fine to use the management for everything. You need the following information:

- Management Network Portgroup

- 5 consecutive IP addresses for Kubernetes control plane VMs

- Subnet Mask (Management Network)

- Gateway (Management Network)

- DNS & NTP Server

- 32 Egress IP addresses (Configured in CIDR (/27) notation. This can be in your management network to keep the setup simple.)

- 32 Ingress IP addresses (Configured in CIDR (/27) notation. This can be in your management network to keep the setup simple.)

- Pod CIDR range (Preconfigured - Stick with the default)

- Services CIDR range (Preconfigured - Stick with the default)

- Storage Policy

Enable Kubernetes / Troubleshooting Cluster Compatibility

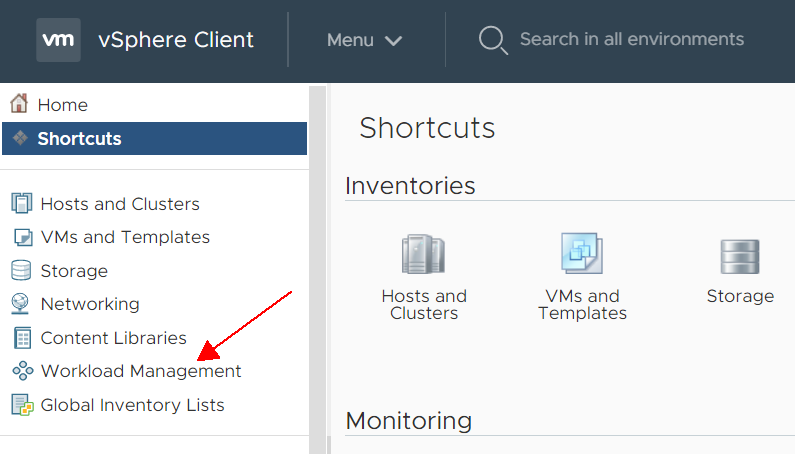

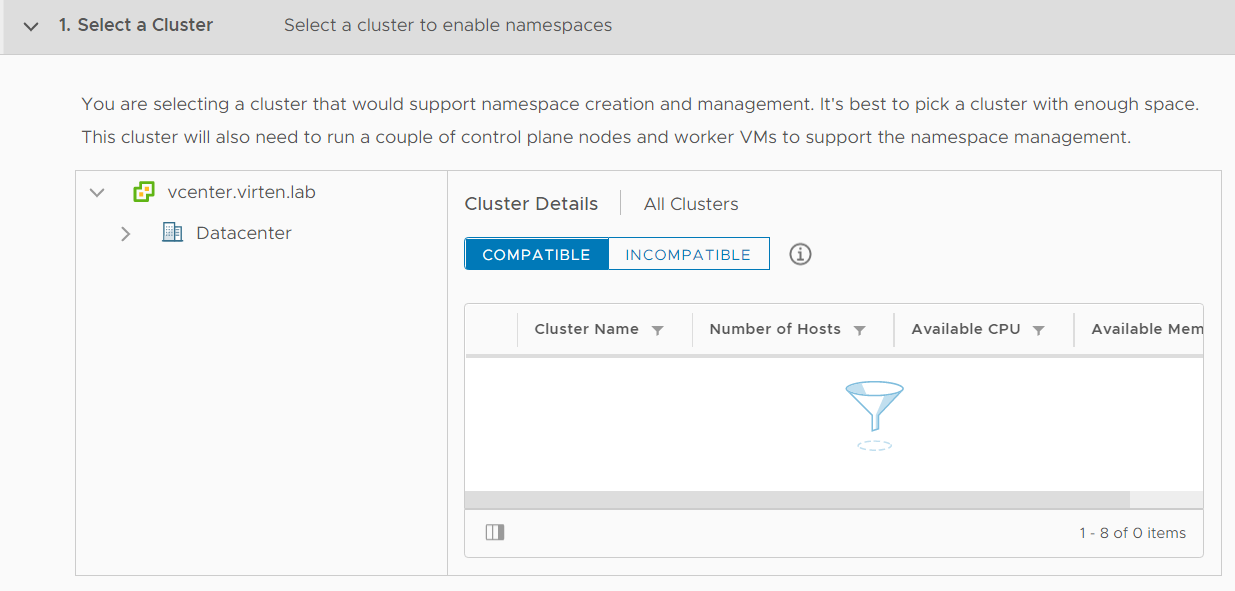

When NSX-T is configured, the next step is to enable Kubernetes. You do not find anything called "Kubernetes" in the vSphere Client. Instead, you find "Workload Management" or "Namespace Management" options. Open the vSphere Client and Navigate to Workload Management and click ENABLE.

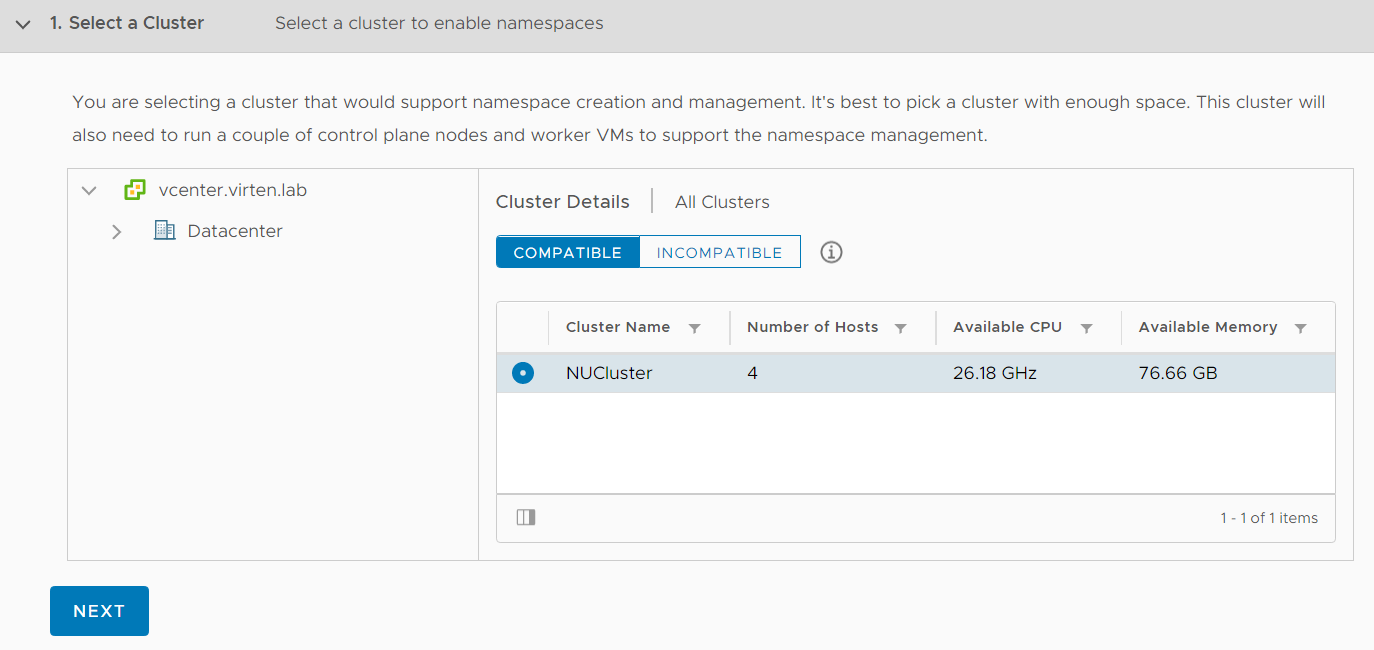

If you see compatible clusters, skip the troubleshooting part. If not, you just see the following screen showing you that something is wrong, but not why.

Troubleshooting

The following troubleshooting options are available:

- DCLI (Datacenter CLI), installed on the vCenter Server (Command Line)

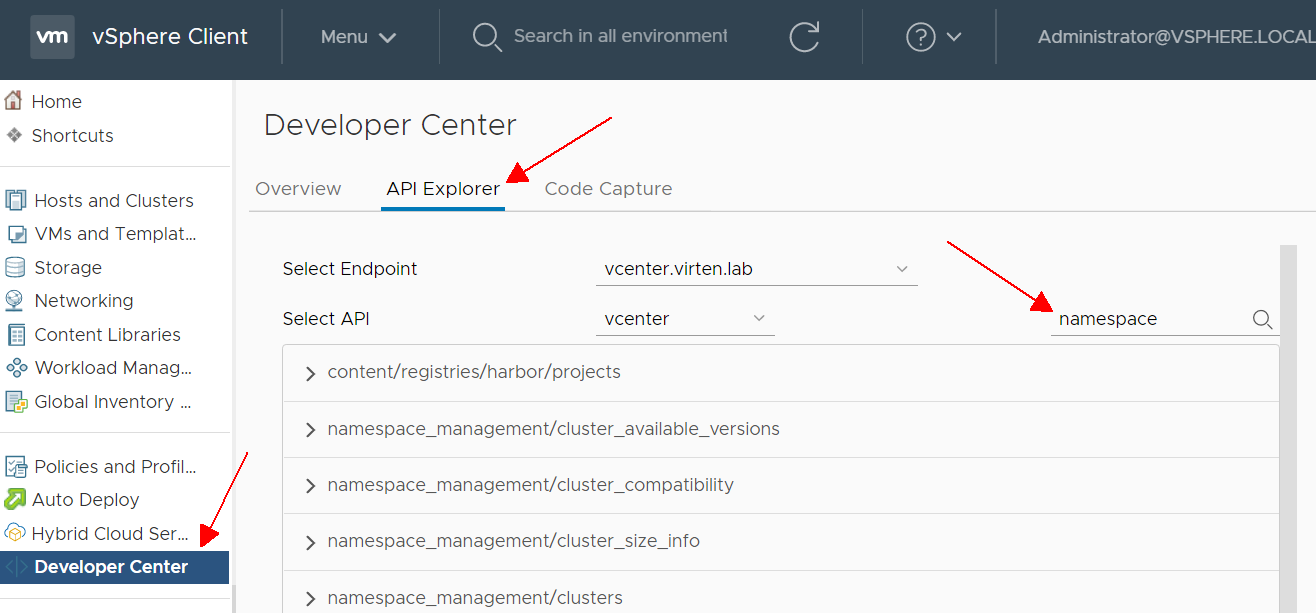

- API Explorer (vSphere Client > Developer Center > API Explorer > Filter > "namespace")

- Workload Management Log on the vCenter Server: /var/log/vmware/wcp/wcpsvc.log

- NSX-T Log on the vCenter Server: /var/log/vmware/wcp/nsxd.log

You can get the same information from DCLI and API Explorer. It's up to you if you want to work with a command line or the browser-based API Explorer.

The first step is to figure out why the cluster is incompatible.

DCLI

Connect to the vCenter Server using SSH and run the following command. It does not matter if you are in bash or the default appliancesh.

dcli com vmware vcenter namespacemanagement clustercompatibility list

The command lists all compatibility reasons for your cluster(s):

Command> dcli com vmware vcenter namespacemanagement clustercompatibility list |------------|----------|---------------------------------------------------------------------------------------------------| |cluster |compatible|incompatibility_reasons | |------------|----------|---------------------------------------------------------------------------------------------------| |domain-c3143|False |Cluster domain-c3143 does not have HA enabled. | | | |Cluster domain-c3143 must have DRS enabled and set to fully automated to enable vSphere namespaces.| | | |Cluster domain-c3143 is missing compatible NSX-T VDS. | |domain-c46 |False |Cluster domain-c46 has hosts that are not licensed for vSphere Namespaces. | |------------|----------|---------------------------------------------------------------------------------------------------|

You don't see cluster names in the output. If you have multiple clusters, use the following command to get the id to name mapping:

dcli com vmware vcenter cluster list

API Explorer

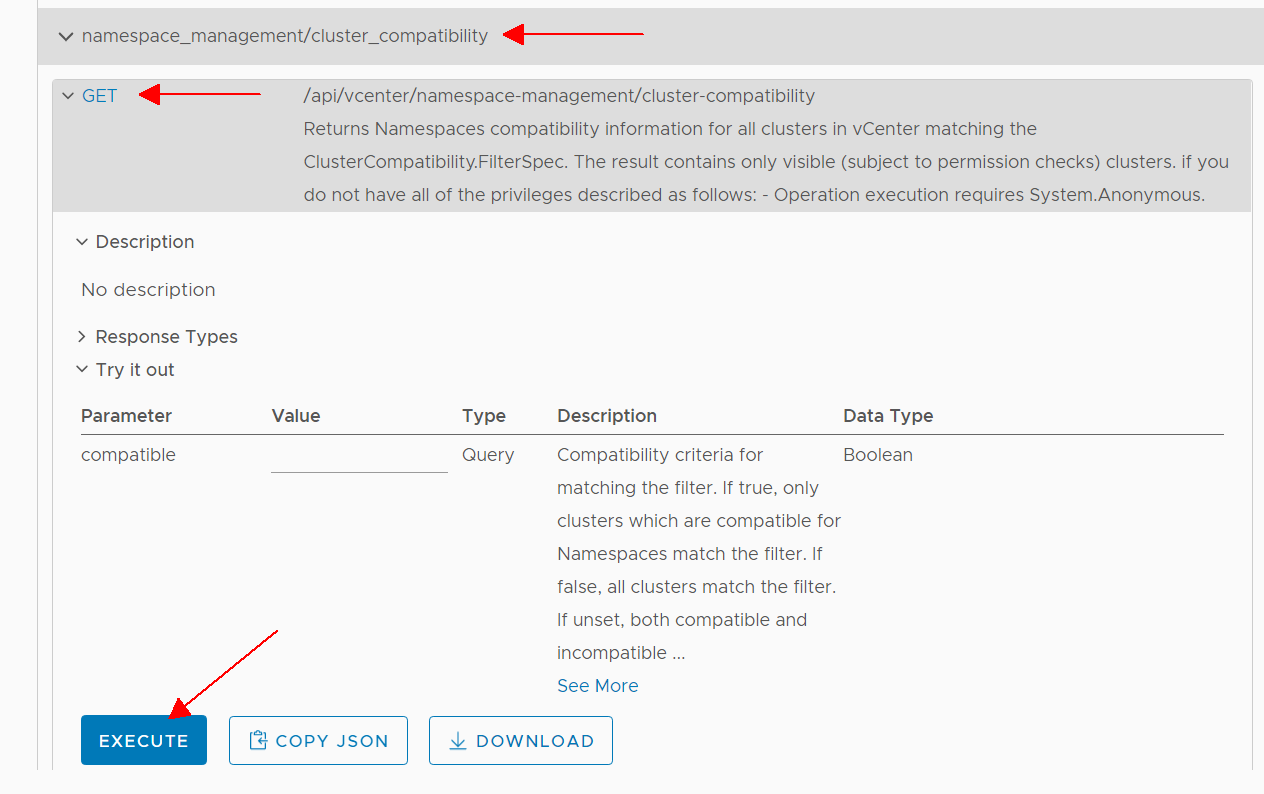

In the vSphere Client, navigate to Developer Center > API Explorer and search for namespace

Click on namespace_management/cluster_compatibility > GET > EXECUTE

Scroll down to see the response:

Scroll down to see the response:

Fix Individual Cluster Incompatibility Reasons

Cluster domain-c1 does not have HA enabled.

Easy fix. Navigate to vSphere Client > Hosts and Clusters > [Clustername] > Configure > Services > vSphere Availability > Edit... and enable vSphere HA

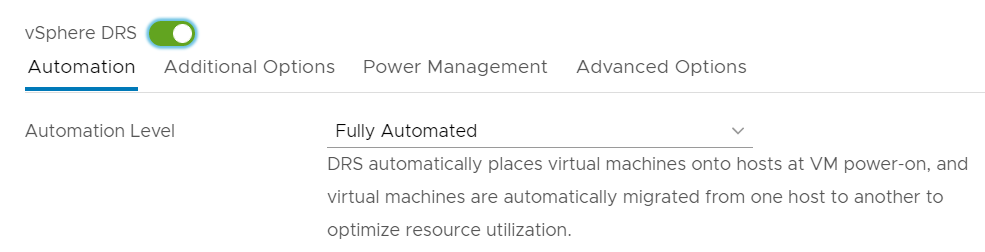

Cluster domain-c1 must have DRS enabled and set to fully automated to enable vSphere namespaces.

Easy fix. Navigate to vSphere Client > Hosts and Clusters > [Clustername] > Configure > Services > vSphere DRS > Edit... and enable vSphere DRS. Make sure to set the Automation Level to "Fully Automated".

Cluster domain-c1 is missing compatible NSX-T VDS.

This message can have various reasons.

- ESXi Hosts have no NSX-T configuration

- Missing VDS

- Missing T0 Gateway

- Multiple T0 deployed

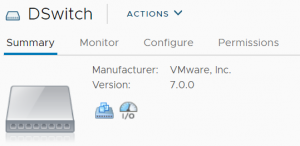

It is important to understand that the problem is usually not related to the host being not connected to a VDS, it states that the NSX-T configuration has a problem. At first, you should verify that the DVS is version 7.0.

You can also try to get useful information by checking Distributed Switch Compatibility with DCLI:

Command> dcli com vmware vcenter namespacemanagement distributedswitchcompatibility list --cluster domain-c3143

Server error: com.vmware.vapi.std.errors.InternalServerError

Error message:

Invalid hosts: 031cafbb-b571-4d79-867f-6cfa442e398c.Check /var/log/vmware/wcp/nsxd.log on the vCenter Server for errors:

debug wcp [opID=5ef66a94] Transport node ID for host host-3141: 031cafbb-b571-4d79-867f-6cfa442e398c debug wcp [opID=5ef66a94] Finding VDS for hosts 031cafbb-b571-4d79-867f-6cfa442e398c debug wcp [opID=5ef66a94] Failed to find VDS for hosts 031cafbb-b571-4d79-867f-6cfa442e398c due to Invalid hosts: 031cafbb-b571-4d79-867f-6cfa442e398c error wcp [opID=5ef66a94] Failed to get list of VDS for cluster: domain-c3143. Err: Invalid hosts: 031cafbb-b571-4d79-867f-6cfa442e398c debug wcp [opID=5ef66a94] Transport node ID for host host-27: 2269c8be-ea0f-4931-9886-e68a1ab91799 debug wcp [opID=5ef66a94] Transport node ID for host host-1094: ff3348b9-ddf9-4e7f-af4e-26732796f99c debug wcp [opID=5ef66a94] Transport node ID for host host-1010: c4239575-acd0-4312-9ca3-edce2585722e

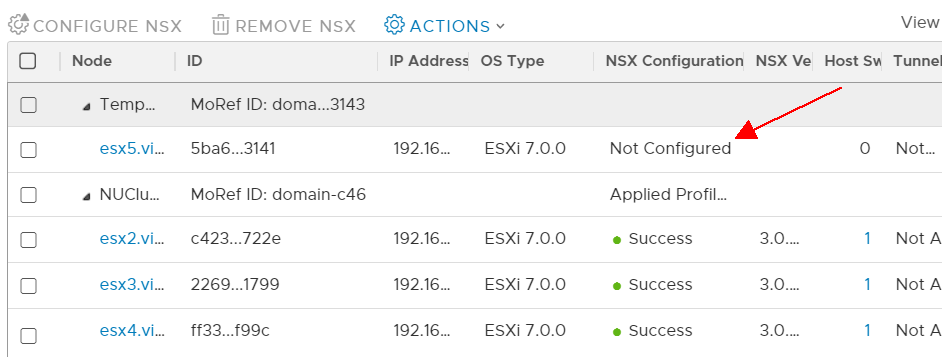

The "Invalid hosts" error hints to a problem in NSX-T. Open NSX-T Manager and navigate to System > Configuration > Fabric > Nodes > Host Transport Nodes to verify that all hosts are configured without errors. In the following example, 1 host is not configured with NSX-T:

When I once tried to enable Workload Management in a platform where I migrated from N-VDS to VDS7 in a very harsh way, one host was also in an invalid state. If that happens, reboot/remove/reconfigure might help.

If the problem is still active, check /var/log/vmware/wcp/wcpsvc.log for errors. If you have no T0 gateway for example, the following message is displayed:

debug wcp [opID=5ef66d44] Found VDS [{ID:50 17 46 79 02 f5 f9 cf-ff 1a f9 db b5 50 82 84 Name:DSwitch EdgeClusters:[{ID:adac224c-0e73-40d5-b1ac-bb70540f94d3 Name:edge-cluster1 TransportZoneID:1b3a2f36-bfd1-443e-a0f6-4de01abc963e Tier0s:[] Validated:false Error:Edge cluster adac224c-0e73-40d5-b1ac-bb70540f94d3 has no tier0 gateway}] Validated:false Error:No valid edge cluster for VDS 50 17 46 79 02 f5 f9 cf-ff 1a f9 db b5 50 82 84}] for hosts 2269c8be-ea0f-4931-9886-e68a1ab91799, fb1575d6-0c5c-4721-b5be-15b89fbe5606, ff3348b9-ddf9-4e7f-af4e-26732796f99c, c4239575-acd0-4312-9ca3-edce2585722e

It will also fail when you have more than one T0:

debug wcp [opID=5ef66d71] Found VDS [{ID:50 17 46 79 02 f5 f9 cf-ff 1a f9 db b5 50 82 84 Name:DSwitch EdgeClusters:[{ID:adac224c-0e73-40d5-b1ac-bb70540f94d3 Name:edge-cluster1 TransportZoneID:1b3a2f36-bfd1-443e-a0f6-4de01abc963e Tier0s:[tier0-k8s tier0-2] Validated:false Error:Edge cluster adac224c-0e73-40d5-b1ac-bb70540f94d3 has more than one tier0 gateway: tier0-k8s, tier0-prod}] Validated:false Error:No valid edge cluster for VDS 50 17 46 79 02 f5 f9 cf-ff 1a f9 db b5 50 82 84}] for hosts 2269c8be-ea0f-4931-9886-e68a1ab91799, fb1575d6-0c5c-4721-b5be-15b89fbe5606, ff3348b9-ddf9-4e7f-af4e-26732796f99c, c4239575-acd0-4312-9ca3-edce2585722e

Cluster domain-c1 has hosts that are not licensed for vSphere Namespaces.

This message states that you do not have an ESXi license with the "Namespace Management" feature. When you don't have VCF or the Add-on license you have to set your ESXi hosts back to "evaluation mode", which is possible up to 60 days after installation. That also applies when you have the Enterprise Plus license, which is widely known to be a fully-featured license.

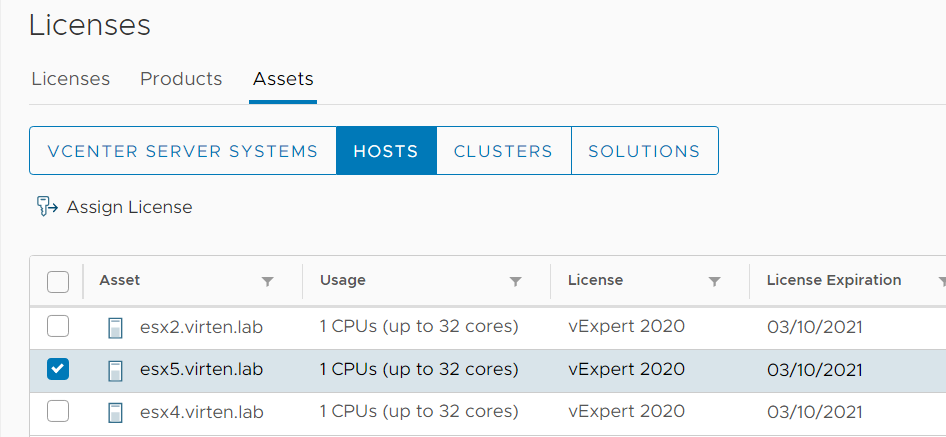

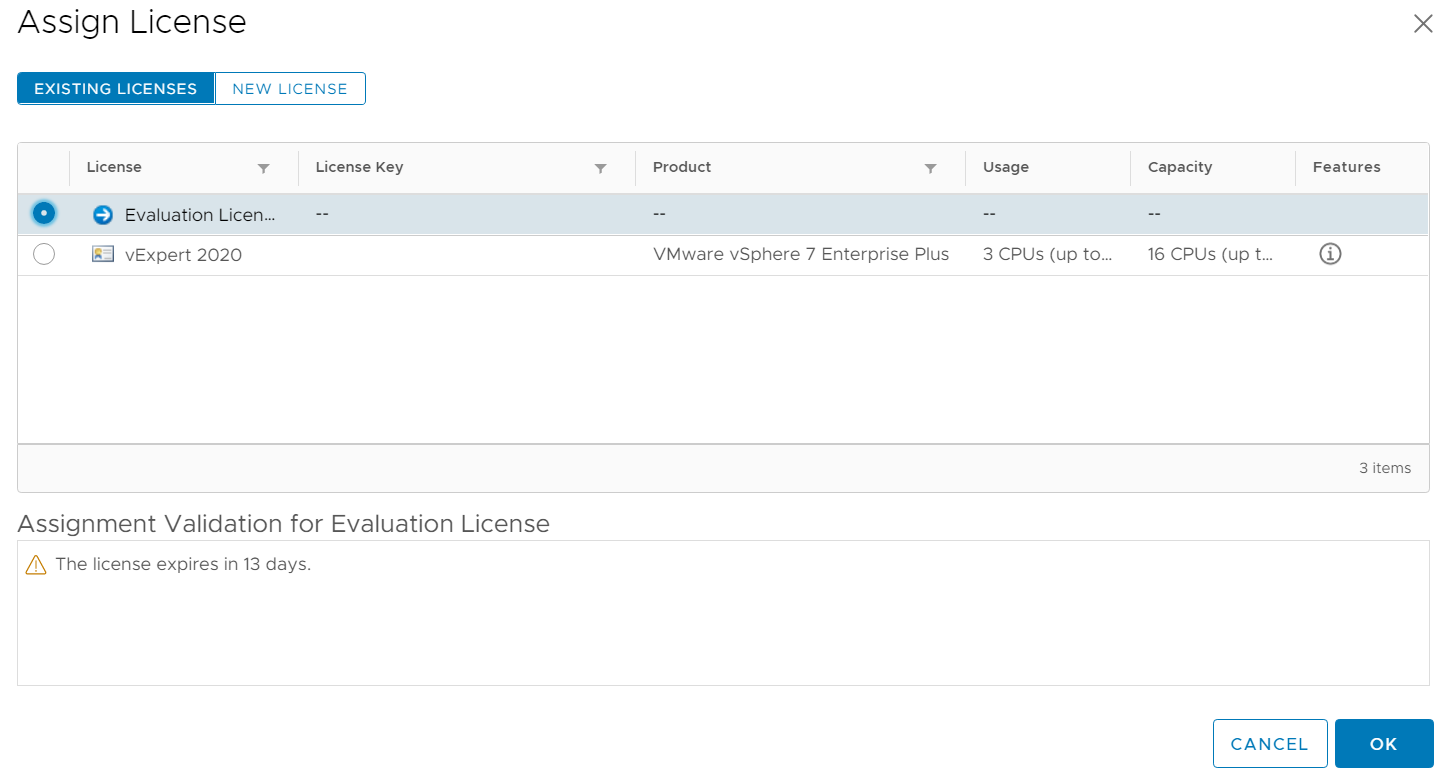

Open vSphere Client and navigate to Administration > Licensing > Licenses > Assets > Hosts, select your ESXi Hosts, click "Assign License" and set it back to Evaluation Mode.

When you are within the 60 days, you see the remaining days:

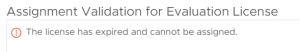

When the installation is older than 60 days, you can't assign the license and see the following message is displayed: "The license has expired and cannot be assigned."

If the license is expired, you have to reinstall, reset the ESXi host, or reset the evaluation license.

Additional note: You can reactivate the license when Workload Management has been enabled. This behavior might change in the future, but as of now, the license is only required for activation.

Enable Workload Management / Kubernetes

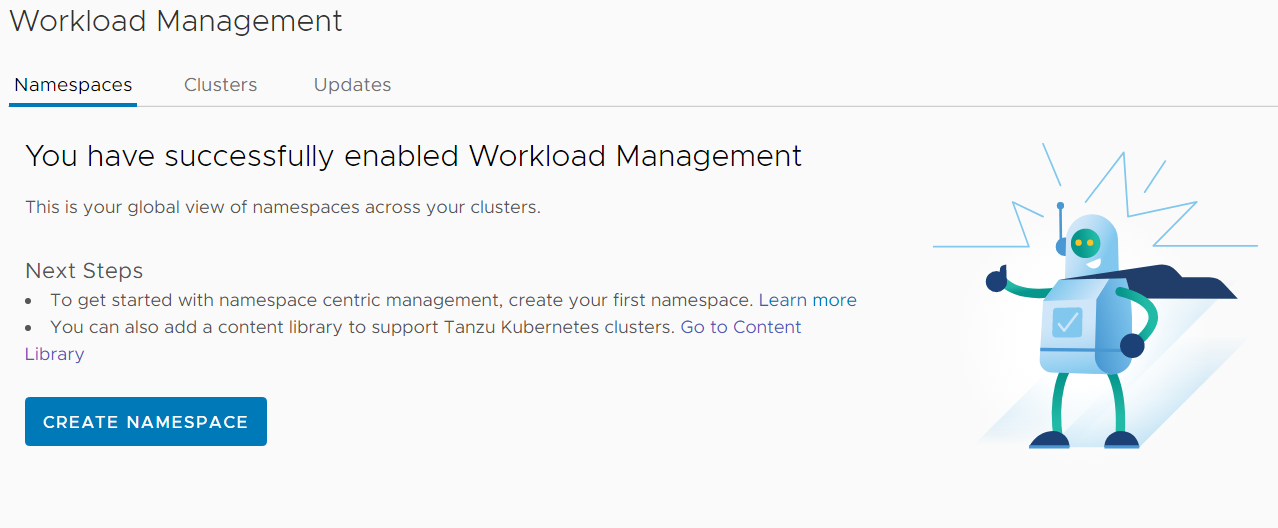

In the vSphere Client and Navigate to Workload Management and click ENABLE. Select your compatible cluster and click NEXT.

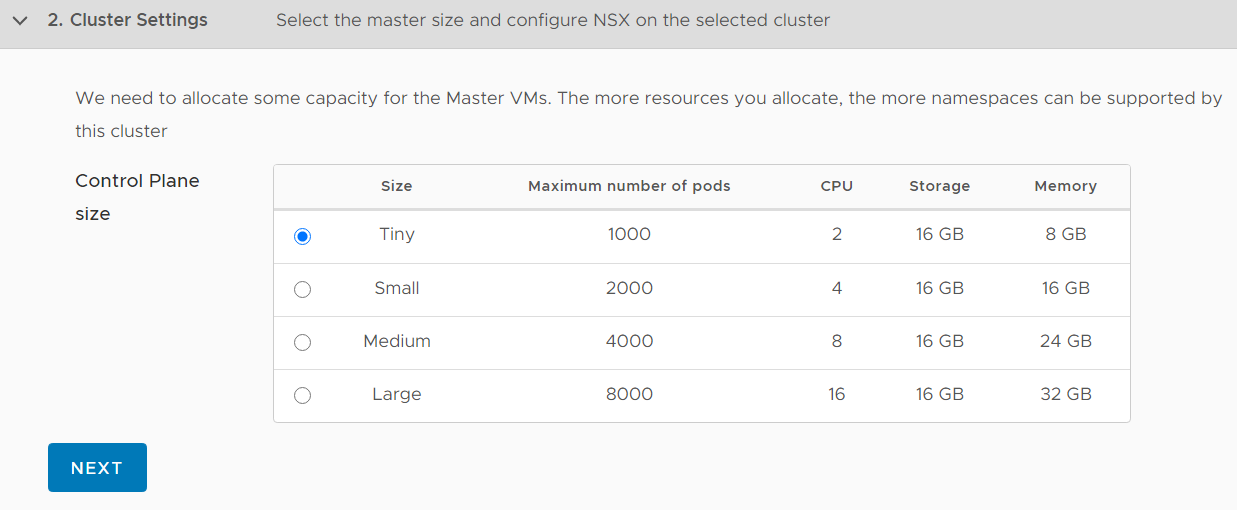

I'm deploying a Tiny Control Plane Cluster which is sufficient for 1000 pods.

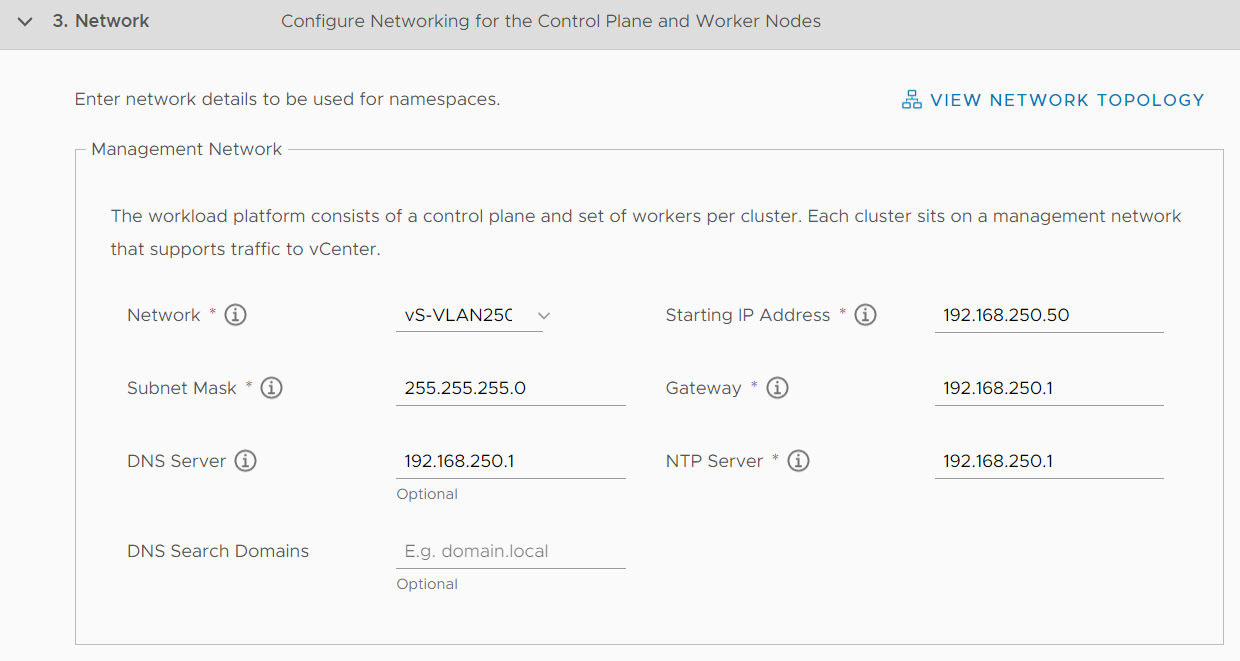

Configure the Management Network. I'm using my default Management VLAN.

Network: Choose a Portgroup or Distributed Portgroup

Starting IP Address: 192.168.250.50 (It will use 5 consecutive addresses. In that case, 192.168.250.50-192.168.250.54. These addresses are used for the Controle Plane: 3x Worker, 1x VIP, and an additional IP for rolling updates)

Subnet Mask: 255.255.255.0

Gateway: 192.168.250.1

DNS Server: 192.168.250.1

NTP Server: 192.168.250.1

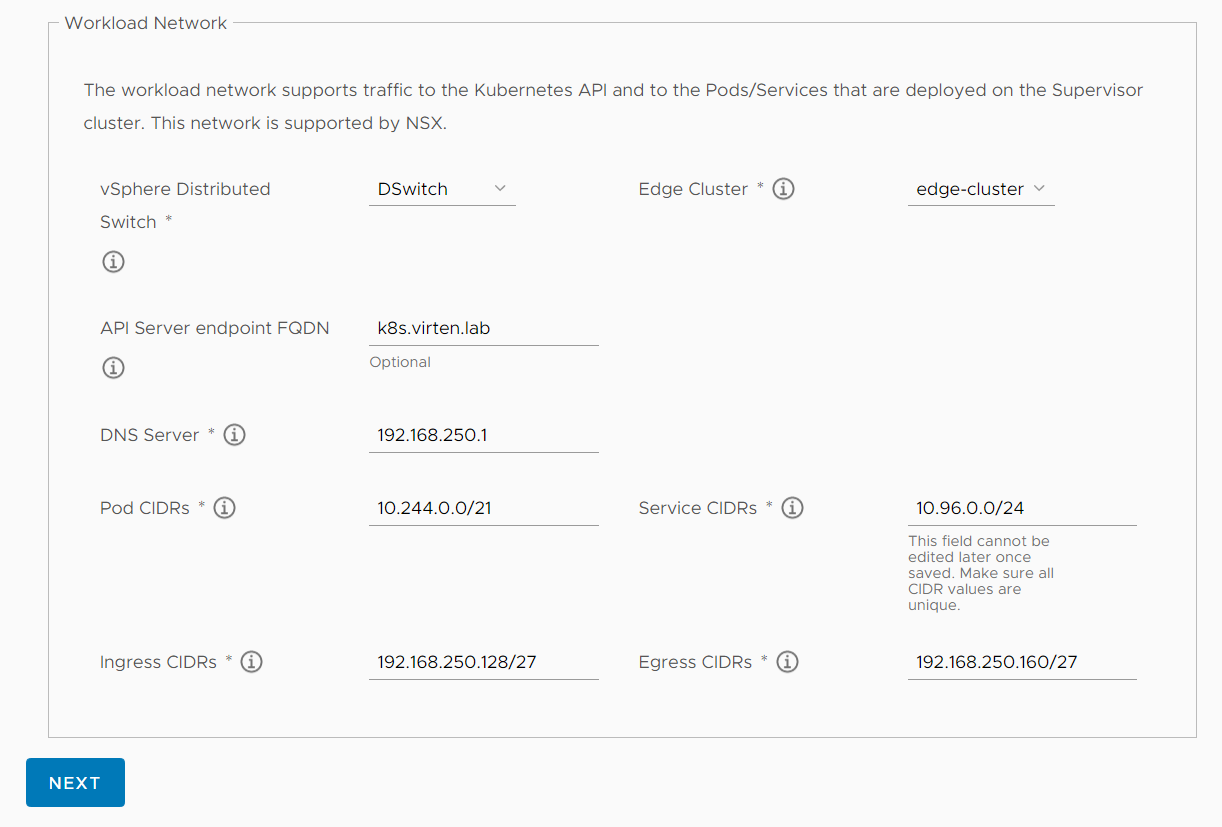

Configure the Workload Network

vSphere Distributed Switch: VDS used for NSX-T

Edge Cluster: NSX-T Edge Cluster

API Server endpoint FQDN: If you set a name, it will be added to the certificate used to access the Supervisor Cluster.

DNS Server: 192.168.250.1 (Very important for PODs to access the Internet)

Pod CIDRs: 10.244.0.0/21 (Default Value)

Service CIDRs: 10.96.0.0/24 (Default Value)

Ingress CIDRs: 192.168.250.128/27 (Used to make services available outside Kubernetes)

Egress CIDRs: 192.168.250.160/27 (NAT Pool for outgoing Pod communication)

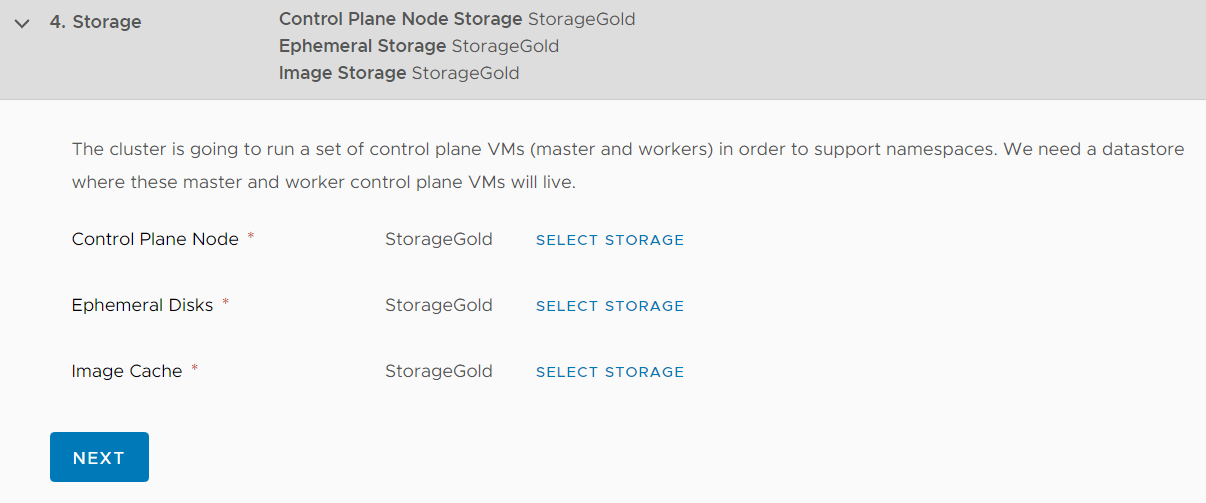

Select Storage Policies for Control Plane Nodes, Ephemeral Disks, and Image Cache.

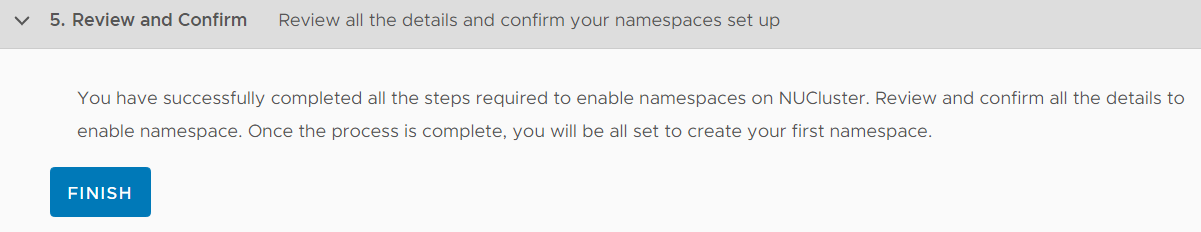

Review your configuration and click FINISH to start the deployment

From here, you don't see anything for 30-60 minutes. Just be patient, the deployment happens in the background. If you want to see what happens, log in to the vCenter Server using SSH and follow the wcpsvc.log:

# tail -f /var/log/vmware/wcp/wcpsvc.log

A problem I ran into during my first deployments was that my Edge VM was too small. Login to the NSX-T Manager and check if there are any errors, especially in Networking > Tier-1 Gateway and Networking > Load Balancers. When the Edge VM is too small, Load Balancer Services will fail to deploy. If you ran into that issue, just gracefully shut down the Edge VM, resize the Virtual Machine (vCenter > Edit Virtual Machine) and reboot it. The installer should catch up and finish.

vSphere with Kubernetes Guide |

Don't you also need the vSphere Kubernetes add-on license?

You don't need the add-on license when running in evaluation mode.

For production, VCF is the only (supported) option at the moment.

thanks

Hi, and happy new year!

I have successfully deployed the supervisor cluster on my home lab. The only issue I see is that the 3 Control Plane VMs have ~25% (1 core) at full load all the time.

It's not a big issue, but power consumption in very high for my lab (770W, normally they are at 550W). I don't know if it's normal or they have a process misbehaving.

I'll switch to the tiny deployment, maybe it consumes less CPU.

Hello Florian,

I have a question. I saw you used for workfload network the Ingress CIDRs: 192.168.250.128/27

I saw this segment network is on Management Network too. Do you know if is the only way to route Control Plane with VCD? I tried to use a private segment but I can't reach Tanzu from VCD.

Regards