A major problem when deploying "vSphere with Tanzu" Clusters in VMware Cloud Director 10.2 is that the defaults for TKG Clusters are overlapping with the defaults for the Supervisor Cluster configured in vCenter Server during the Workload Management enablement.

A major problem when deploying "vSphere with Tanzu" Clusters in VMware Cloud Director 10.2 is that the defaults for TKG Clusters are overlapping with the defaults for the Supervisor Cluster configured in vCenter Server during the Workload Management enablement.

When you deploy a Kubernetes Cluster using the new Container Extension in VCD 10.2, it deploys the cluster in a namespace on top of the Supervisor Cluster in the vCenter Server. The Supervisor Clusters IP address ranges for the Ingress CIDRs and Services CIDR must not overlap with IP addresses 10.96.0.0/12 and 192.168.0.0/16, which is the default for TKG Clusters. Unfortunately, 10.96.0.0 is also the default when enabling workload management so the deployment will fail when you stick to the defaults. The following error message is displayed when you have overlapping networks:

spec.settings.network.pods.cidrBlocks intersects with the network range of the external ip pools in network provider's configuration

spec.settings.network.pods.cidrBlocks intersects with the network range of the external ip pools LB in network provider's configuration

This article explains a workaround that you can apply when deleting and reconfiguring the Namespace Management with non-overlapping addresses is not an option.

A few words before we start. I highly recommend designing the Supervisor clusters network to not collide with the TKG defaults. The method described here uses the VCD Cloud API to deploy a TKG Cluster on top of VCD. The API Endppiont is not publicly documented, so use it at your own risk. I can't guarantee that the API and TKG Clusters will be compatible in future versions.

At this point, you should have your VCD 10.2 configured with a Kubernetes enabled Provider VDC and the policy published to a tenants' Organization VDC. If you have problems with the configuration, refer to my Configuration Guide and Troubleshooting articles. You can totally configure everything to this point, even with overlapping networks. There is no pre-check that prevents you from doing so. The error message mentioned above happens when the tenant tries to deploy a TKG Cluster.

TKG Clusters can not only be configured using the GUI, there is also an API endpoint in the Cloud API. The API endpoint has additional options, compared to the GUI, including a setting for POD and Service CIDR. That allows the customer to deploy non-overlapping TKG Clusters. The API endpoint is currently not documented publicly:

POST /cloudapi/1.0.0/tkgClusters

Header:

Content-Type: application/json

Accept: */*;version=35.0

x-vcloud-authorization: [TOKEN]

X-VMWARE-VCLOUD-TENANT-CONTEXT: [Organization ID]

BODY:

{

"kind": "TanzuKubernetesCluster",

"spec": {

"settings": {

"network": {

"pods": {

"cidrBlocks": [

"x.x.x.x/x" <-- CIDR for Pod Network

]

},

"services": {

"cidrBlocks": [

"x.x.x.x/x" <-- CIDR for Service Network

]

}

}

},

"topology": {

"workers": {

"class": "best-effort-xsmall", <-- Worker VM Size

"count": 2, <-- Worker VM Count

"storageClass": "gold" <-- Worker VM Storage Class

},

"controlPlane": {

"class": "best-effort-xsmall", <-- Control Plane VM Size

"count": 1, <-- Control Plane VM Count

"storageClass": "gold" <-- Control Plane VM Storage Class

}

},

"distribution": {

"version": "1.17.8" <-- Kubernetes Version

}

},

"metadata": {

"name": "k8s", <-- DNS compliant Name for the TKG Cluster

"placementPolicy": "policy", <-- Tenant facing Policy Name

"virtualDataCenterName": "OVDC" <-- Organization VDC

}

}CIDR for Pod Network

Specifies a range of IP addresses to use for Kubernetes pods.

CIDR for Service Network

Specifies a range of IP addresses to use for Kubernetes services. Must not overlap with the settings chosen for the Supervisor Cluster.

Control Plane/Worker VM Size

Specifies the name of the VirtualMachineClass that describes the virtual hardware settings to be used for each node in the pool. The following options are available. Please keep in mind that the Provider can limit the choice for the customer.

| Class | CPU | Memory | Storage |

| guaranteed-8xlarge | 32 | 128 GB | 16 GB |

| best-effort-8xlarge | 32 | 128 GB | 16 GB |

| guaranteed-4xlarge | 16 | 128 GB | 16 GB |

| best-effort-4xlarge | 16 | 128 GB | 16 GB |

| guaranteed-2xlarge | 8 | 64 GB | 16 GB |

| best-effort-2xlarge | 8 | 64 GB | 16 GB |

| guaranteed-xlarge | 4 | 32 GB | 16 GB |

| best-effort-xlarge | 4 | 32 GB | 16 GB |

| guaranteed-large | 4 | 16 GB | 16 GB |

| best-effort-large | 4 | 16 GB | 16 GB |

| guaranteed-medium | 2 | 8 GB | 16 GB |

| best-effort-medium | 2 | 8 GB | 16 GB |

| guaranteed-small | 2 | 4 GB | 16 GB |

| best-effort-small | 2 | 4 GB | 16 GB |

| guaranteed-xsmall | 2 | 2 GB | 16 GB |

| best-effort-xsmall | 2 | 2 GB | 16 GB |

Control Plane VM Count

Specifies the number of control plane nodes. Must be either 1 or 3.

Worker VM Count

Specifies the number of worker nodes in the cluster. There is no hard limit for the number of worker nodes, but the suggested limit is 150.

Control Plane/Worker VM Storage Class

Storage Policy Name for Control Plane and Work VMs. Must be all-lowercase and only contain hyphens (-). Do not use the name as displayed in the policy or vCenter Server. Use the name as it is displayed in Kubernetes. In Kubernetes, the storage class is automatically made all-lowercase, and spaces are replaced by hyphens. You can check the name with kubectl.

# kubectl get storageclasses storagegold vsan-default vsan-singlenode

Kubernetes Version

Specifies the software version of the Kubernetes distribution to install on the cluster. Available versions in Cloud Director 10.2 are 1.16.8, 1.16.12, 1.17.7, and 1.17.8. You can also use other versions when they are available in the Content Library.

DNS compliant Name for the TKG Cluster

Specifies the name of the cluster to create. Current cluster naming constraints:

- Name length must be 41 characters or less.

- Name must begin with a letter.

- Name may contain lowercase letters, numbers, and hyphens.

- Name must end with a letter or a number.

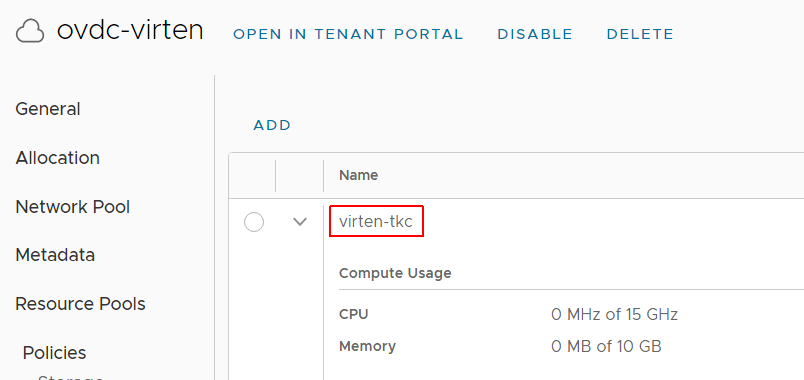

Tenant facing Policy Name

The Policy Name as it is displayed in the Organization VDC.

Organization VDC

Name of the Organization VDC

Header x-vcloud-authorization

Token received from POST /api/sessions

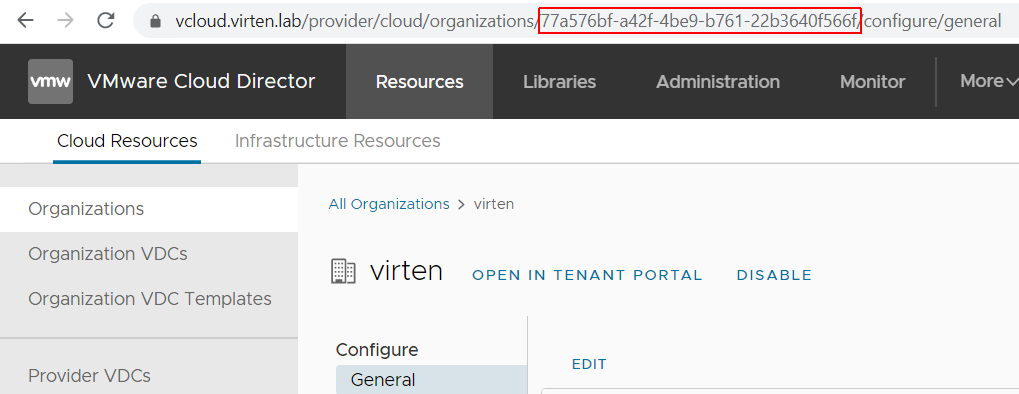

Header X-VMWARE-VCLOUD-TENANT-CONTEXT

The header is required when you run the API call with a global VCD Administrator. It is not required when you are using an Organization Administrator. The easiest way to get the org ID is by opening the Organization in VCD and copying the ID from the URL.

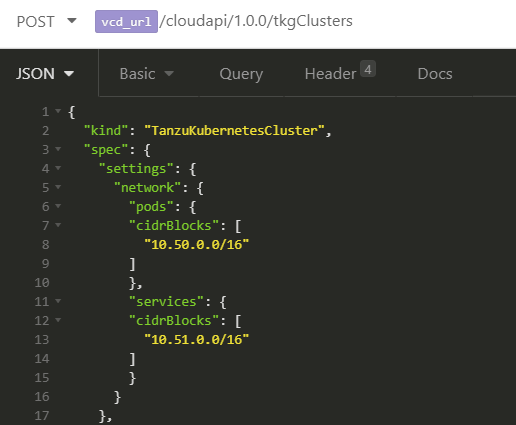

Example API Request

I've used the following API call to deploy a TKG Cluster in VCD on top of the Supervisor Cluster with default CIDRs for Pod and Services.

> POST /cloudapi/1.0.0/tkgClusters HTTP/1.1

> Host: 192.168.222.3

> Authorization: Basic YWRtaW5pc3RyYXRvckBzeXN0ZW06Vk13YXJlMSE=

> User-Agent: insomnia/2020.4.1

> Content-Type: application/json

> accept: */*;version=35.0

> x-vcloud-authorization: b528f756f94648bea0f7d1d85357bfcf

> X-VMWARE-VCLOUD-TENANT-CONTEXT: 77a576bf-a42f-4be9-b761-22b3640f566f

| {

| "kind": "TanzuKubernetesCluster",

| "spec": {

| "settings": {

| "network": {

| "pods": {

| "cidrBlocks": [

| "10.50.0.0/16"

| ]

| },

| "services": {

| "cidrBlocks": [

| "10.51.0.0/16"

| ]

| }

| }

| },

| "topology": {

| "workers": {

| "class": "best-effort-xsmall",

| "count": 2,

| "storageClass": "storagegold"

| },

| "controlPlane": {

| "class": "best-effort-xsmall",

| "count": 1,

| "storageClass": "storagegold"

| }

| },

| "distribution": {

| "version": "1.17.8"

| }

| },

| "metadata": {

| "name": "demo",

| "placementPolicy": "virten-tkc",

| "virtualDataCenterName": "ovdc-virten"

| }

| }