About a month ago, the USB Native Driver Fling has added support for 2.5 Gbit Network adapters. Until then it was clear that the embedded network interface is faster and more stable, compared to a 1Gbit USB Network adapter. I've never used a USB NIC for storage or management traffic.

With the support of 2.5GBASE-T network adapters, I wondered if it is a good idea to use them for accessing shared storage or for vSAN traffic.

It is obvious that a 2.5 Gbit adapter has a higher bandwidth, but due to the USB overhead, there should be a penalty to latency. But how bad will it be? To figure out the impact, I did some testing.

Testbed

For all of the test I've used the following components:

- Intel NUC8i7HNK

- StarTech 1Gbit USB NIC

- CableCreation CD0673 2.5 Gbit USB NIC

- Mikrotik CRS305 (10 Gbps Switch)

- Mikrotik CRS326 (1 Gbps Switch)

- HP Gen10 Microserver with a 10GbE NIC (X520-DA2) running FreeNAS

Test 1 - ICMP aka. Ping

Testing latency with ICMP/Ping is very common. Everyone does it all the time as it is the quickest way to quickly check availability and latency. But when it comes to low latency involving single or no switches, the results are too vague. However, here are some results:

| MIN | AVG | MAX | |

| Embedded NIC (Connected to a Switch) | 0,158 | 0,189 | 0,234 |

| 1 Gbit/s USB (Connected to a Switch) | 0,399 | 0,491 | 0,575 |

| 2.5 Gbit/s USB (Cross-connected) | 0,134 | 0,206 | 0,549 |

| 2.5 Gbit/s USB (Connected to a Switch) | 0,193 | 0,285 | 0,548 |

Test 2 - Initial Round Trip Time (iRTT)

Initial Round Trip Time is determined by looking at the TCP Three-Way Handshake. From my experience, this gives more consistent results, compared to ICMP as ICMP is usually handled as low-priority traffic.

I've measured the iRRT between my NUCs network adapters and an unused 10GbE adapter in my storage. A switch was used in both tests, no cross-connect. The table includes Average iRTT, Maximum iRTT, and the standard deviation.

| AVG | MAX | SD | |

| Embedded NIC to Storage | 0,135 | 0,302 | 0,05 |

| 2.5 Gbit/s USB NIC to Storage | 0,158 | 0,641 | 0,12 |

Test 3 - iPerf3 Bandwidth test

For the third test, I used an iPerf3 server running on my storage and the NUC acts as a client. This is a pure bandwidth test.

| Bandwidth | |

| Embedded NIC to Storage | 952 Mbits/sec |

| 2.5 Gbit/s USB NIC to Storage | 2.02 Gbits/sec |

Test 4 - vMotion Live Migration

The next test involves a real-world workload. I migrated a virtual machine with 12GB RAM between to ESXi hosts using different network configurations.

| Bandwidth | Time | |

| Embedded NIC (Connected to a Switch) | 112 MB/s | 124 seconds |

| 1 Gbit/s USB (Connected to a Switch) | 99 MB/s | 138 seconds |

| 2.5 Gbit/s USB (Cross-connected) | 230 MB/s | 57 seconds |

| 2.5 Gbit/s USB (Connected to a Switch) | 222 MB/s | 59 seconds |

Test 5 - Virtual Machine Disk Performance

For the next test, I've created a Virtual Machine and used HD Tune to run a couple of performance tests. All tests are using the identical setup, only from another ESXi Network Interface. A Switch was always between ESXi and Storage (No Cross-Connect).

| 1 Gbit/s Embedded NIC | 2.5 Gbit/s USB NIC | |

| Random Write 4 KB | 2704 IOPS | 4307 IOPS |

| Random Write 64 KB | 993 IOPS | 1653 IOPS |

| Random Write 1 MB | 97 IOPS | 211 IOPS |

| Random Read 4 KB | 2604 IOPS | 3994 IOPS |

| Random Read 64 KB | 436 IOPS | 1822 IOPS |

| Random Read 1 MB | 102 IOPS | 97 IOPS |

| Sequential Write | 102 MB/s | 198 MB/s |

| Sequential Read | 105 MB/s | 118 MB/s |

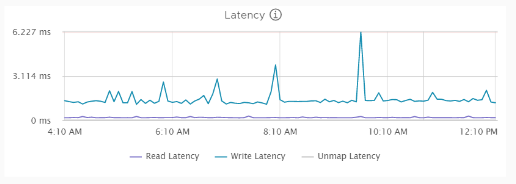

Test 6 - vSAN Latency - Real Workload

For the final test, I've configured two Intel NUCs with vSAN and migrated a fair amount of real workload to the vSAN Datastore including vCenter, NSX-T, Edges, and some Kubernetes VMs. Each configuration was tested for 24 hours. I'm only reporting write latency as this is the value I'm interested in. No need to test read latency which comes from the cache.

| latencyAvgWrite | |

| Embedded NIC (Connected to a Switch) | 1.502 ms |

| 2.5 Gbit/s USB (Cross-connected) | 1.415 ms |

| 2.5 Gbit/s USB (Connected to a Switch) | 1.596 ms |

Conclusion

From my tests, I am not quite confident that going to 2.5Gbit for performance is worth the effort. The additional bandwidth is nice for large streams like vMotion or file copies but for homelab setups, you will usually not hit the 1Gbit limit. You can achieve more IOPS with 2.5Gbit when using shared storage but this is nothing I notice with normal workloads.

Additional Note: USB network adapters are more likely to be wonky, compared to native network cards. One of my adapters keeps reconnecting itself every few hours. Keep an eye on vmkernel.log for the following behavior:

2020-10-03T06:22:26.736Z cpu1:1049160)DMA: 733: DMA Engine 'vusb1-dma-engine' destroyed. 2020-10-03T06:22:26.736Z cpu0:1258318)NetSched: 671: vusb1-0-tx: worldID = 1258318 exits 2020-10-03T06:22:27.537Z cpu4:1049172)DMA: 688: DMA Engine 'vusb1-dma-engine' created using mapper 'DMANull'. 2020-10-03T06:22:27.537Z cpu4:1049172)Uplink: 14291: Opening device vusb1 2020-10-03T06:22:27.537Z cpu0:1048644)Uplink: 14134: Detected pseudo-NIC for 'vusb1' 2020-10-03T06:22:32.322Z cpu1:1048644)NetqueueBal: 5046: vusb1: new netq module, reset logical space needed 2020-10-03T06:22:32.322Z cpu1:1048644)NetqueueBal: 5075: vusb1: plugins to call differs, reset logical space 2020-10-03T06:22:32.322Z cpu1:1048644)NetqueueBal: 5111: vusb1: device Up notification, reset logical space needed 2020-10-03T06:22:32.322Z cpu1:1048644)NetqueueBal: 3132: vusb1: rxQueueCount=0, rxFiltersPerQueue=0, txQueueCount=0 rxQueuesFeatures=0x0 2020-10-03T06:22:32.322Z cpu2:1262088)NetSched: 671: vusb1-0-tx: worldID = 1262088 exits