Many applications running in container platforms still require external resources like databases. In the last article, I've explained how to access TKC resources from VMware Cloud Director Tenant Org Networks. In This article, I'm going to explain how to access a database running on a Virtual Machine in VMware Cloud Director from a Tanzu Kubernetes Cluster that was deployed using the latest Cloud Service Extension (CSE) in VMware Cloud Director 10.2.

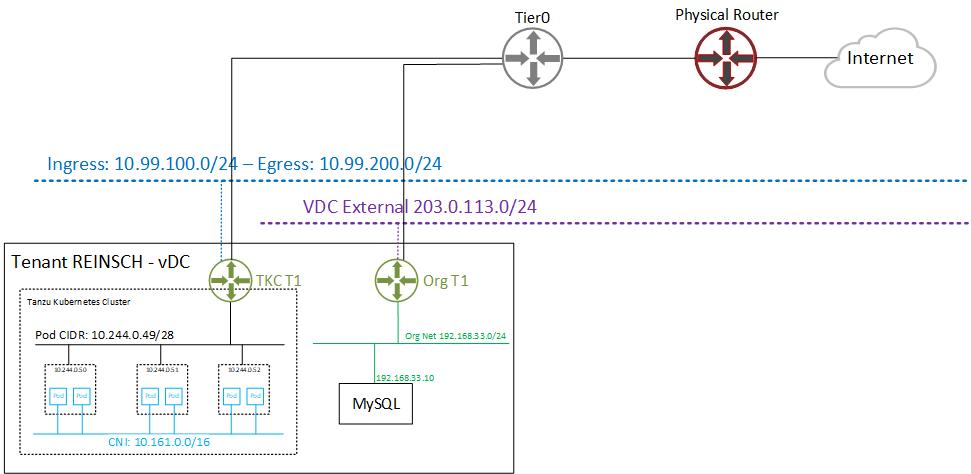

If you are not familiar with the vSphere with Tanzu integration in VMware Cloud Director, the following diagram shows the communication. I have a single Org VCD that has a MySQL Server running in an Org network. When leaving the Org Network, the private IP address is translated (SNAT) to an public IP from the VCD external network (203.0.113.0/24). The Customer also has a Tanzu Kubernetes Cluster (TKC) deployed using VMware Cloud Director. This creates another Tier1 Gateway, which is connected to the same upstream Tier0 Router. When the TKC communicates, it is also translated on the Tier 1 using an address from the Egress Pool (10.99.200.0/24).

So, both Networks can not communicate with each other directly. As of VMware Cloud Director 10.2.2, communication is only implemented to work in one direction - Org Network -> TKC. This is done using automatically configuring a SNAT on the Org T1 to its primary public address. With this address, the Org Network can reach all Kubernetes services that are exposed using an address from the Ingress Pool, which is the default when exposing services in TKC.

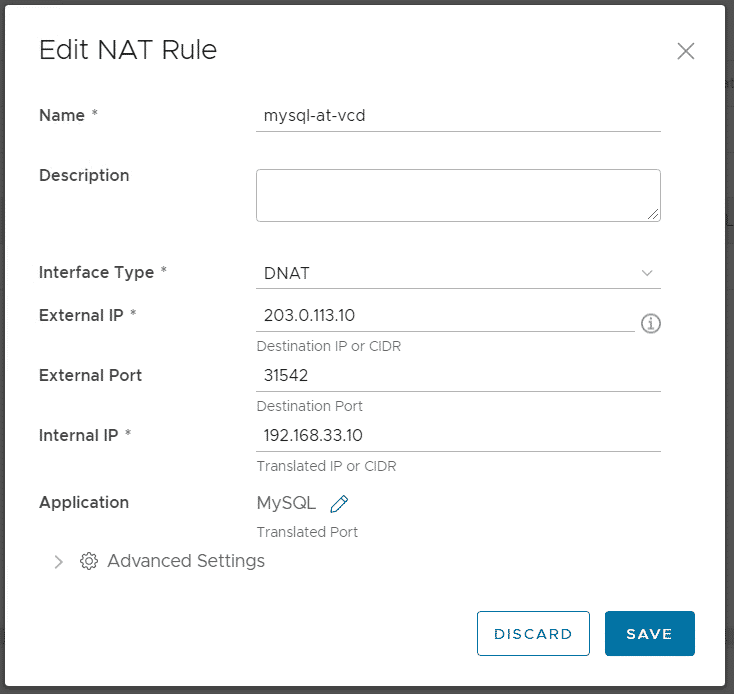

Now I want my MySQL Server, running in the Org Network, to be available for Pods on the TKC. My MySQL Server is running on 192.168.33.10. I create a DNAT rule using the primary address from my Tier 1 Gateway. To be able to configure multiple services without wasting public addresses, I translate a random external high port (31542).

DNAT: 203.0.113.10:31542 > 192.168.33.10:3306

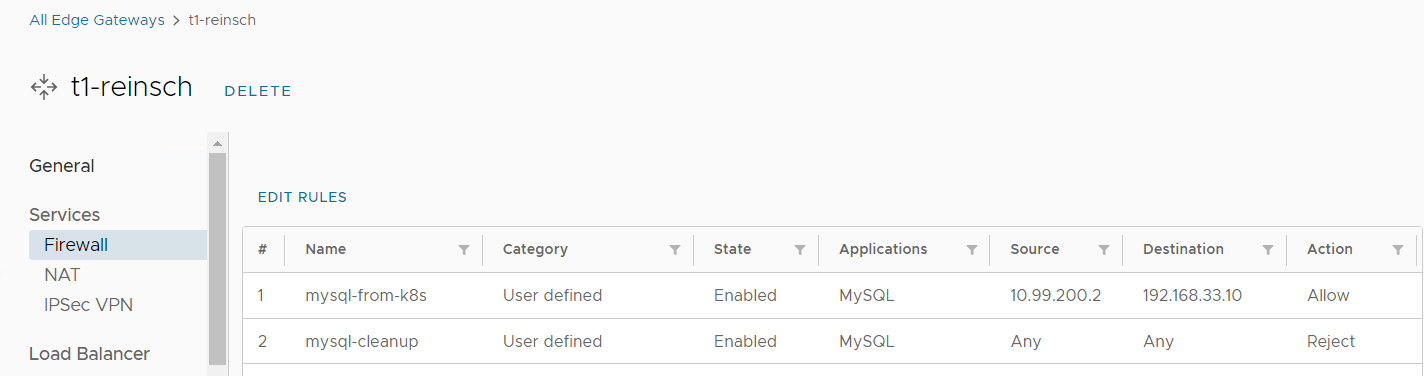

To mitigate security concerns when having a MySQL Server exposed to the Internet, create a Firewall rule to only allow traffic from the TKCs Egress address (10.99.200.2). This address is bound to the Kubernetes Namespace which is created when assigning a Kubernetes policy to an Organization VDC in Cloud Director. This address is not shared with other customers.

Now you should be able to access the MySQL DB from Pods using the address 203.0.113.10:31542. To give it a more Kubernetes-like behavior, create a Service and Endpoint using kubectl to map the external service to Kubernetes objects. This can be done by using the following YAML file:

mysql-at-vcd.yaml

kind: "Service"

apiVersion: "v1"

metadata:

name: "mysql-at-vcd"

spec:

ports:

- name: "mysql"

protocol: "TCP"

port: 3306

targetPort: 31542

nodePort: 0

---

kind: "Endpoints"

apiVersion: "v1"

metadata:

name: "mysql-at-vcd"

subsets:

- addresses:

- ip: "203.0.113.10"

ports:

- port: 31542

name: "mysql"Apply the YAML file:

# kubectl apply -f mysql-at-vcd.yaml service/mysql-at-vcd created endpoints/mysql-at-vcd created

You should now have two Kubernetes Objects:

# kubectl get service mysql-at-vcd NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE mysql-at-vcd ClusterIP 10.16.1.82 3306/TCP 11m # kubectl get endpoints mysql-at-vcd NAME ENDPOINTS AGE mysql-at-vcd 203.0.113.10:31542 12m

You can now access the MySQL Service by using the DNS Name mysql-at-vcd. The internal DNS will resolve to the Cluster-IP 10.16.1.82 which is redirected to the endpoint 203.0.113.10:31542. You can easily test the connection by bringing up a mysql-client pod.

# kubectl run -it --rm --image=mysql:5.6 --restart=Never mysql-client -- mysql -h mysql-at-vcd -u admin -p vmware If you don't see a command prompt, try pressing enter. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | test | | vcd | +--------------------+

Hi, great article. Is it possible in Tanzu with vSphere with vCloud Diractor to give pods access to internet? How it can be done with eagress network being in private adress space?

If you want Internet access for pods, you have to SNAT the egress address on the Tier-0 Gateway (or external Routers).