Since VMware has introduced vSphere with Tanzu support in VMware Cloud Director 10.2, I'm struggling to find a proper way to implement a solution that allows customers bidirectional communication between Virtual Machines and Pods. In earlier Kubernetes implementations using Container Service Extension (CSE) "Native Cluster", workers and the control plane were directly placed in Organization networks. Communication between Pods and Virtual Machines was quite easy, even if they were placed in different subnets because they could be routed through the Tier1 Gateway.

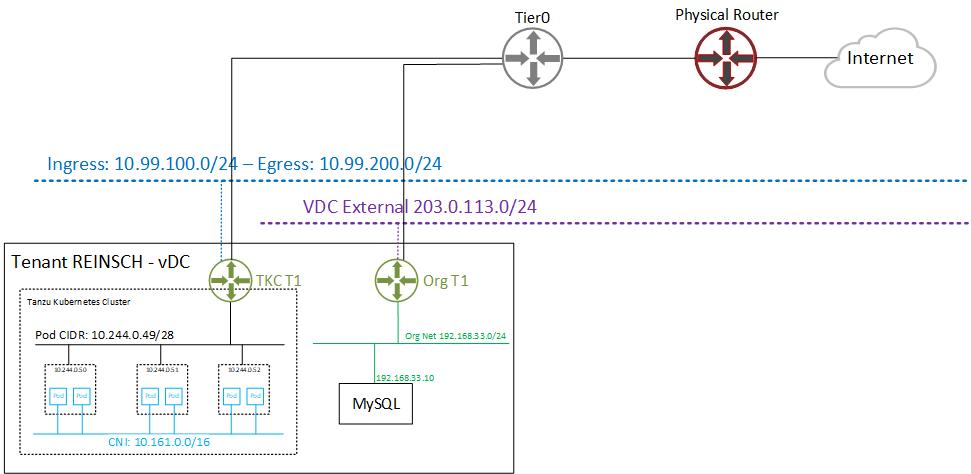

With Tanzu meeting VMware Cloud Director, Kubernetes Clusters have their own Tier1 Gateway. While it would be technically possible to implement routing between Tanzu and VCD Tier1s through Tier0, the typical Cloud Director Org Network is hidden behind a NAT. There is just no way to prevent overlapping networks when advertising Tier1 Routers to the upstream Tier0. The following diagram shows the VCD networking with Tanzu enabled.

With Cloud Director 10.2.2, VMware further optimized the implementation by automatically setting up Firewall Rules on the TKC Tier1 to only allow the tenants Org Networks to access Kubernetes services. They also published a guide on how customers could NAT their public IP addresses to TKC Ingress addressed to make them accessible from the Internet. The method is described here (see Publish Kubernetes Services using VCD Org Networks). Unfortunately, the need to communicate from Pods to Virtual Machines in VCD seems still not to be in VMware's scope.

While developing a decent solution by using Kubernetes Endpoints, I came up with a questionable workaround. While I highly doubt that these methods are supported and useful in production, I still want to share them, to show what actually could be possible.

This is probably a bad idea. You have been warned!

The task is simple - Using the picture above, I want to access the MySQL Database (192.168.33.10) from Containers sitting in the Pod Network 10.244.0.49/28. While the Pod, Ingress, and Egress networks are known to Tier0 by default (This is managed by vSphere with Tanzu), there is no way to announce the Org Network (192.168.33.0/24) without risking overlapping networks with other Tenants. Given that, routing through Tier0 is rules out and a direct connection from the TKC Tier1 to the Org Tier1 needs to be implemented.

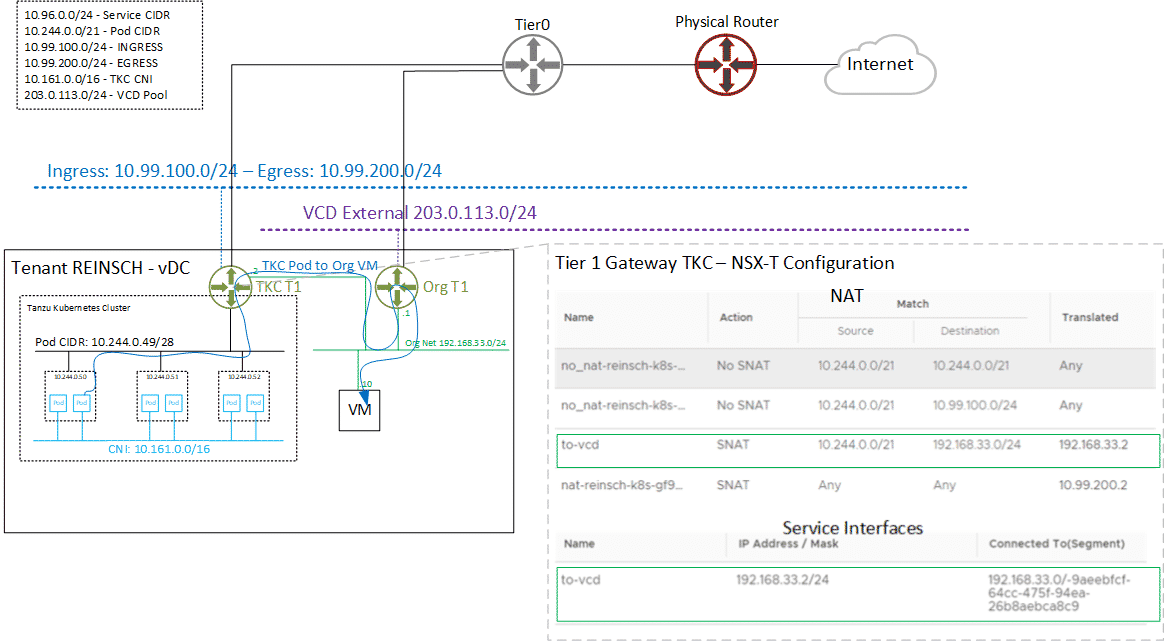

The solution I came up with is to add an Interface to the TKC Tier1 and connecting that Interface to the Org Tier1. This requires two configurations on the TKC Tier1 in NSX-T:

- Service Interface with address 192.168.33.2 in the Org Network 192.168.33.0/24

- SNAT Rule to translate destination 192.168.33.0/24 to the Service Interface 192.168.33.2

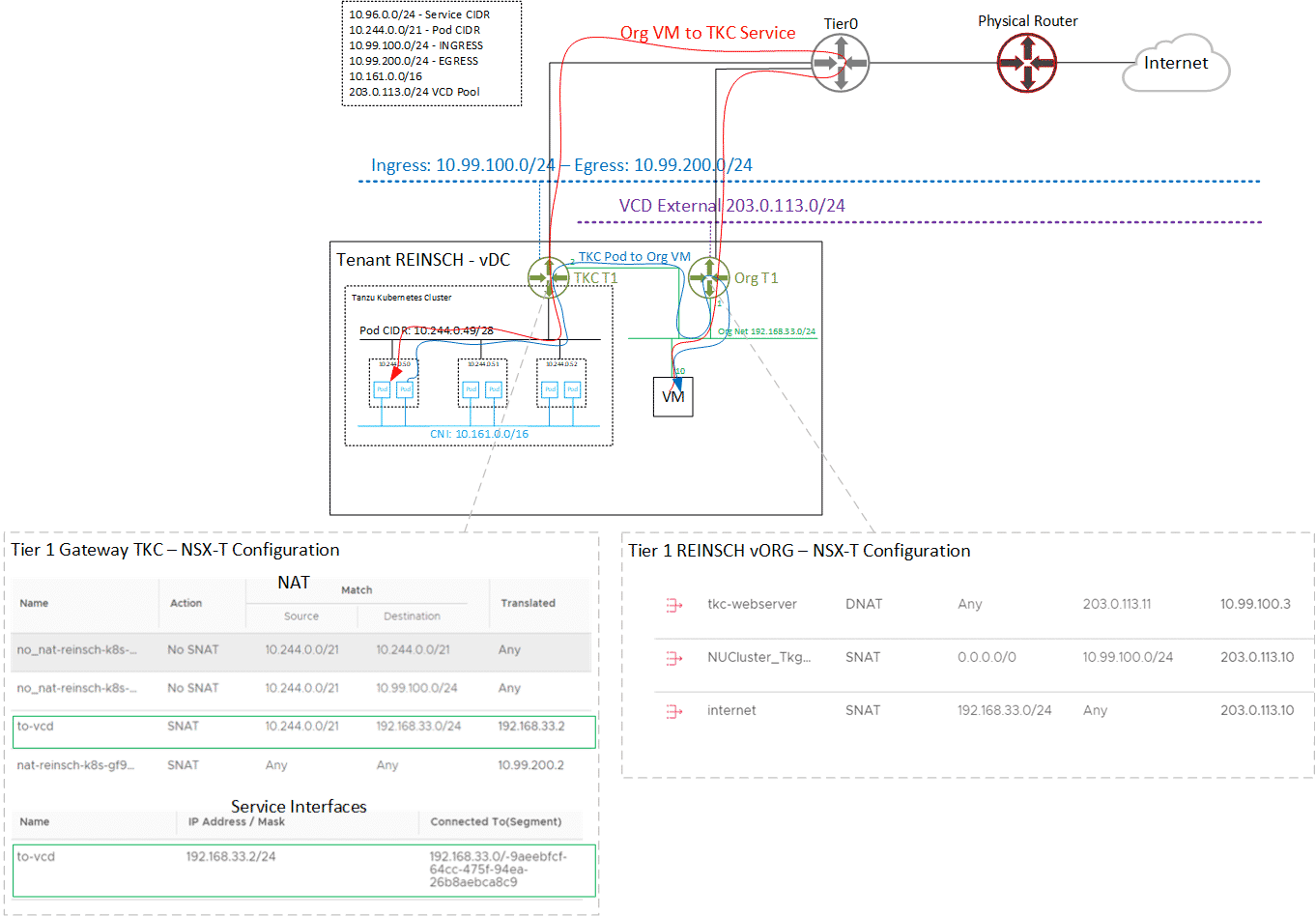

The following diagram shows the communication path in both directions.

- Org VM to TKC Service communication uses the supported NAT through Tier0.

- TKC Pod to Org VM communication uses the Service Interface.

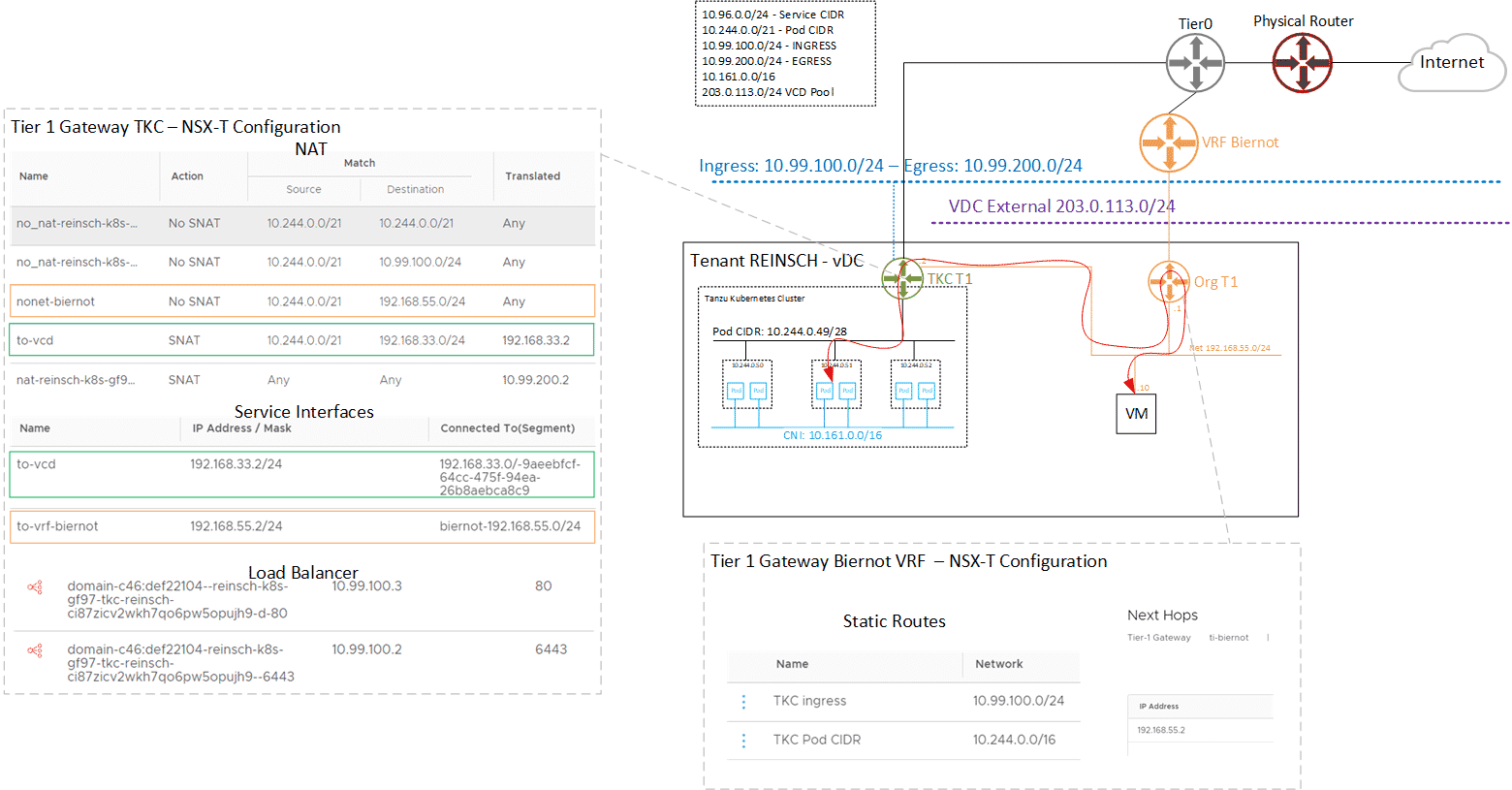

Tanzu Kubernetes Cluster to VRF Routing

Now I want to take it a step further and route the Tanzu Kubernetes Cluster Tier1 into a VRF. I want to allow routed communication between the Org Network 192.168.0.55/24, connected to a VRF, and the TKC. This required some additional steps:

- TKC Tier1: Service Interface with address 192.168.55.2 in the Org Network 192.168.55.0/24

- TKC Tier1: NO SNAT Rule for Destination 192.168.55.0/24

- VRF Tier1: Static Route to the Ingress Pool 10.99.100.0/24 - Next Hop: 192.168.55.2

- VRF Tier1: Static Route to the Pod Network 10.244.0.0/16 - Next Hop: 192.168.55.2

And now we have a routed connection between TKC and VCD Org Networks (Right Click > Open Image in new Tab for a full-size picture):

Hello there!

Have you bumped into a better solution since? The issue with this solution is that the service interface needs to be created inside NSX-T. So only the admin (not the tenant) can do it?

And every time the tenant recreate the TKG cluster, a new config needs to be done by the admin on the backend.