VMware Cloud Director 10.2.2 brings a couple of enhancements to the vSphere with Tanzu integration. While we are still waiting for VRF support in vSphere with Tanzu to fully separate Supervisor Namespaces, the implementation introduced in VCD 10.2.2 should be valid for production workloads.

This article explains new features and issues I had during the implementation:

- VCD with Supervisor Control Plane communication

- Tanzu Certificate Issues

- Tanzu Kubernetes Cluster Tenant Network Isolation

- Publish Kubernetes Services using VCD Org Networks

VCD with Supervisor Control Plane communication

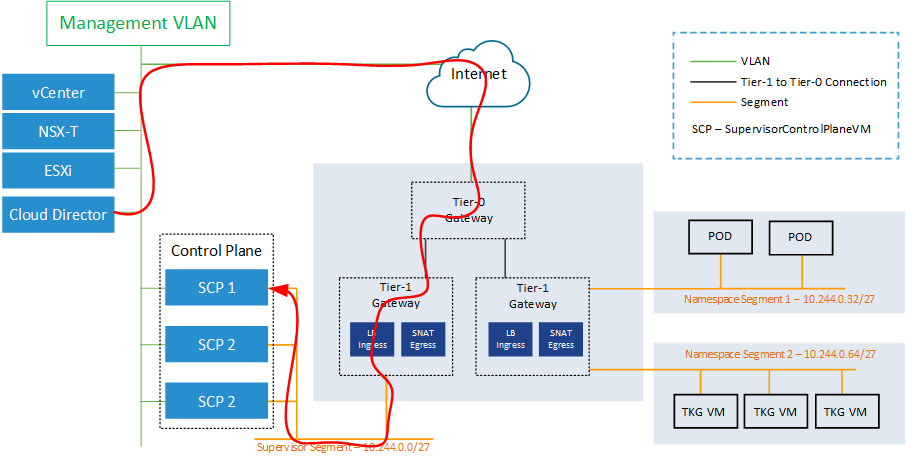

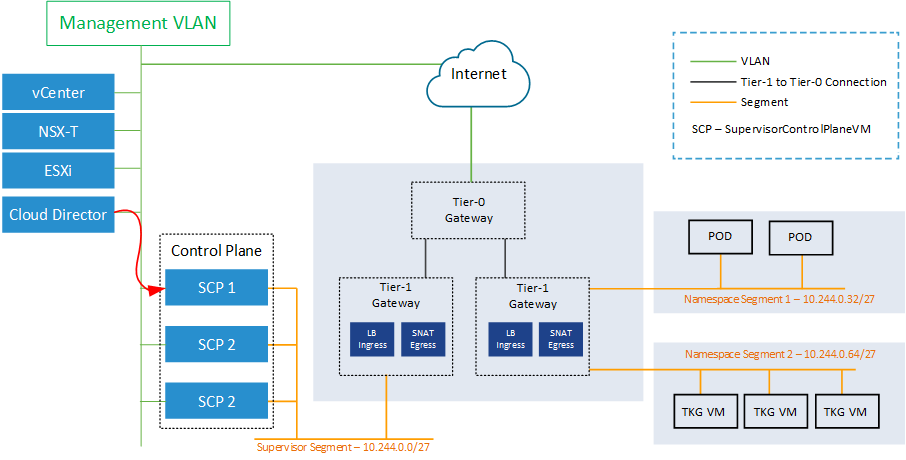

In Cloud Director 10.2.0, which was the first release to support vSphere with Tanzu, the Cloud Director used the NSX-T based Load Balancer from the Ingress Pool to communicate with the Supervisor Controle Plane. This is typically the first address from the "Ingress CIDR" when enabling Workload Management. While this is the endpoint to which developers are supposed to communicate, using it in VCD seems to be fine in the first place. But as the Cloud Director is a management component that sits very close to NSX-T and vCenter Server, it might be a better idea to use the same communication path as those tools.

With Cloud Director 10.2.2, the communication does now use the same path as the vCenter Server. It no longer communicates through the Load-balanced Pod Network. The communication is still resilient as the management part of the SCP uses a virtual IP address that is moved to another node in case of single-node failures. This is typically the first address from the "Starting IP Address" in the Management Network configuration page when enabling Workload Management.

In my opinion, this path is way more convenient and solves some security considerations that might come up when having the Cloud Director communicate through the "public" part of vSphere with Tanzu.

Tanzu Certificate Issues

Due to the communication change explained above, you are no longer using the "Workload Platform Management" certificate, which is properly signed by the vCenter CA and can be replaced using the vSphere Client within Cluster > Configure > Namespaces > Certificates. Instead, you are using the so-called "API Server Management Endpoint", which uses another certificate that is not trusted by Cloud Director. Unfortunately, when you use the Cloud Director to import the certificate from the management URL, communication still fails with certificate Issues. This problem also happens when you just add the PVDC and trust the Kubernetes certificate that is detected by Cloud Director.

When adding a new Kubernetes activated PVDC, you can easily identify the issue by checking Kubernetes Policies. In VCD, navigate to Resources > Cloud Resources > Provider VDCs > Select a PVDC > Policies > Kubernetes. You should see the automatically created default policy containing all available Virtual Machine classes.

In /opt/vmware/vcloud-director/logs/vcloud-container-info.log, you see the following error messages:

2021-04-12 17:24:22,209 | WARN | Backend-activity-pool-198 | RefreshSupervisorClusterStatusActivity | [Activity Execution] Failed to load virtual machine classes from endpoint 10.99.2.10 - Handle: urn:uuid:07fb2731-aa60-4be8-8295-8d6d453b8712 | activity=(com.vmware.vcloud.vimproxy.internal.impl.PCEventProcessingActivity,urn:uuid:5fceaaf8-3300-3ecb-8fc5-d86b7bfbc110) activity=(com.vmware.ssdc.backend.RefreshSupervisorClusterStatusActivity,urn:uuid:07fb2731-aa60-4be8-8295-8d6d453b8712)

2021-04-12 17:24:22,077 | ERROR | Backend-activity-pool-198 | RefreshSupervisorClusterStatusActivity | [Activity Execution] Could not interact with supervisor cluster. Certificate is not set up for K8s cluster control plane: 10.99.2.10 - Handle: urn:uuid:07fb2731-aa60-4be8-8295-8d6d453b8712, Current Phase: RefreshSupervisorClusterStatusActivity$RefreshKubernetesStatusPhase | activity=(com.vmware.vcloud.vimproxy.internal.impl.PCEventProcessingActivity,urn:uuid:5fceaaf8-3300-3ecb-8fc5-d86b7bfbc110) activity=(com.vmware.ssdc.backend.RefreshSupervisorClusterStatusActivity,urn:uuid:07fb2731-aa60-4be8-8295-8d6d453b8712)

Caused by: org.springframework.web.client.ResourceAccessException: I/O error on POST request for "https://10.99.2.10:443/wcp/login": PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target; nested exception is javax.net.ssl.SSLHandshakeException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

Caused by: javax.net.ssl.SSLHandshakeException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

While Cloud Director 10.2 with early vCenter versions had a similar problem that could be solved by trusting and/or replacing the "Workload Platform Management" certificate, this is another problem. To solve the issue, import the "tls_management_endpoint_certificate" to Cloud Director Trusted Certificates:

- Open vSphere Client

- Navigate to Menu > Development Center

- Select the API Explorer tab

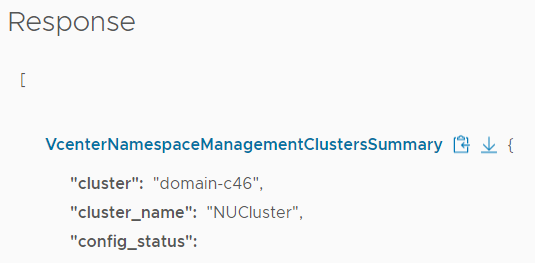

- Expand the namespace_management/clusters category

- Expand GET /api/vcenter/namespace-management/clusters

- Scroll down a bit and press EXECUTE

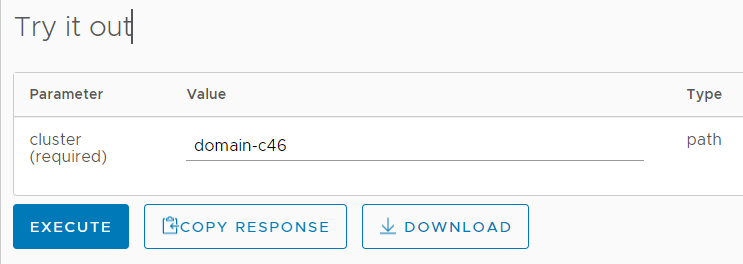

- In the response, search for your cluster and write down the Cluster ID (domain-c46 in this example)

- Expand GET /api/vcenter/namespace-management/clusters/{cluster}

- Enter the Cluster ID (domain-c46) from step 7 and press EXECUTE

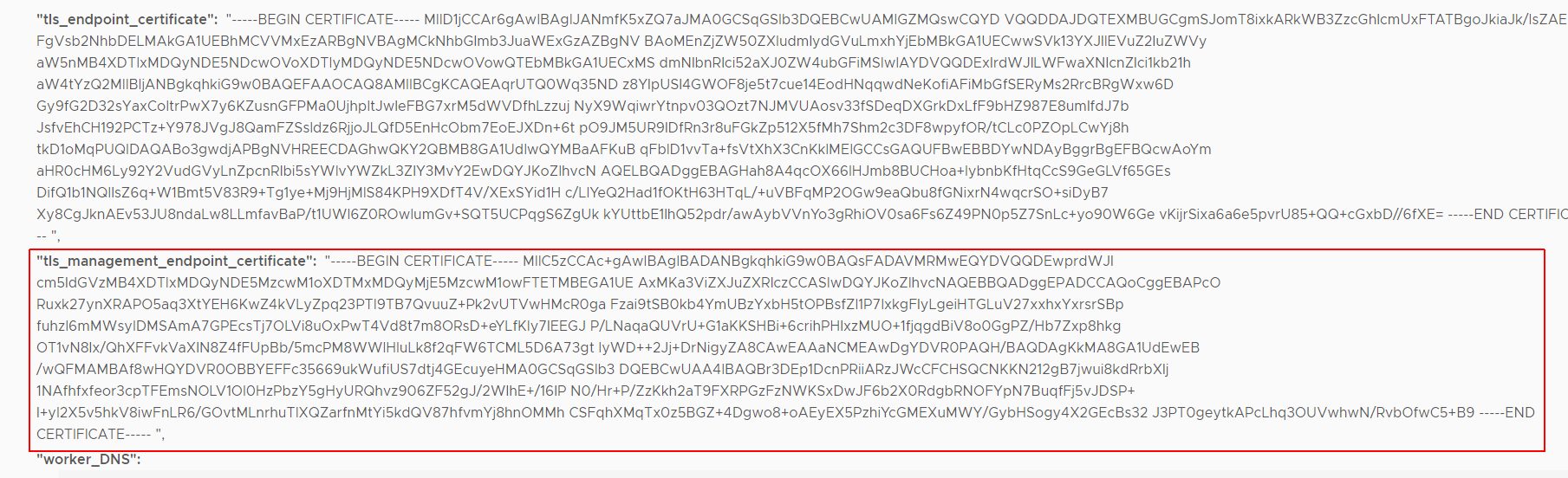

- In the response, search for tls_management_endpoint_certificate

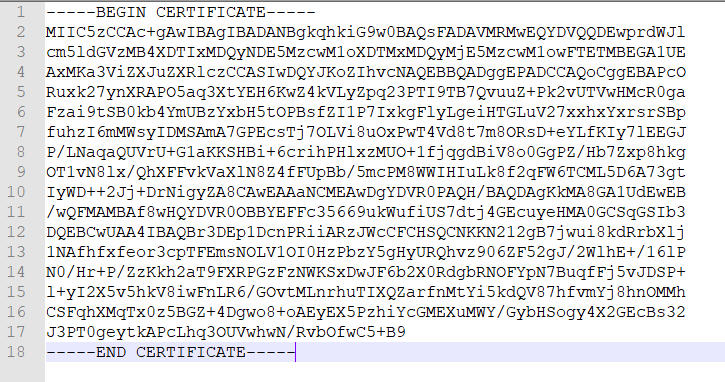

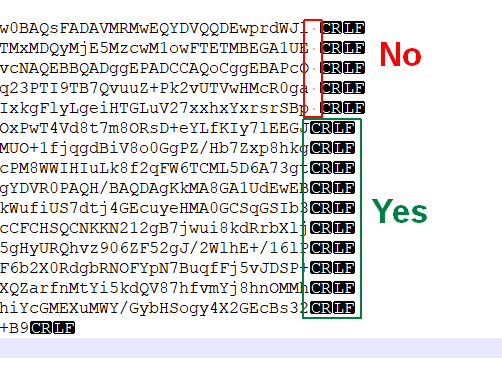

- Copy the content to a text file. Make sure to bring it to the proper format. The file should look like this:

- Double-check that there are no blanks at the end of each line!

- Save the file with .pem extension

- Open VMware Cloud Director Administrator UI

- Navigate to Administration > Certificate Management > Trusted Certificates

- Press IMPORT

- Select the .pem file and press IMPORT

- Navigate to Resources > Infrastructure Resources > vCenter Service Instances

- Select the vCenter Server and press RECONNECT

The Cloud Director should now be able to communicate with the Kubernetes Control Plane. After a couple of minutes, you should see the Kubernetes Policy. You can also check /opt/vmware/vcloud-director/logs/vcloud-container-info.log on the Cloud Director Appliance for further certificate errors.

Tanzu Kubernetes Cluster Tenant Network Isolation

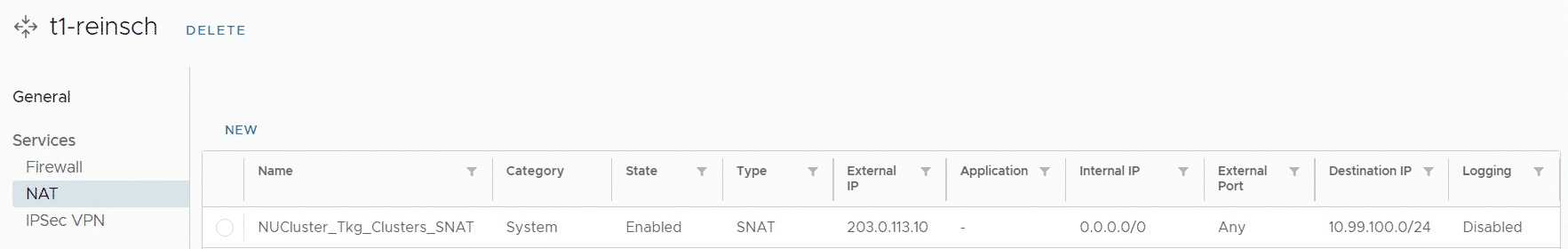

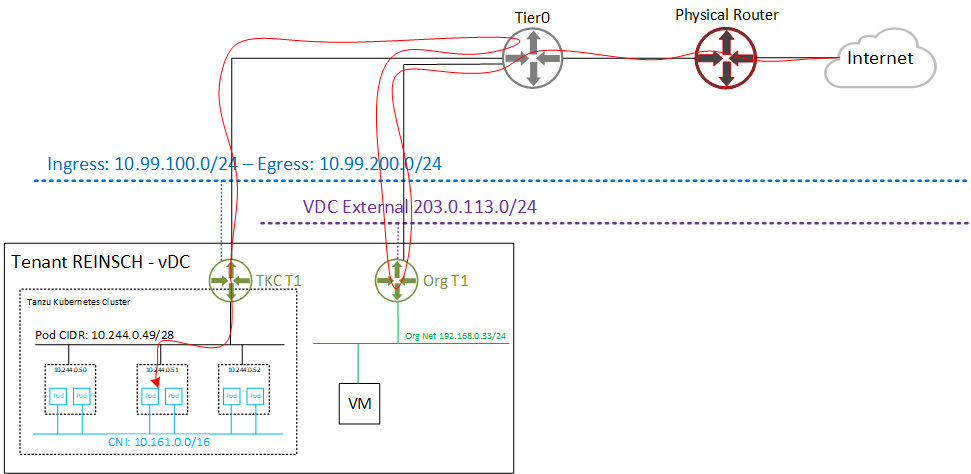

When a customer creates a Tanzu Kubernetes Cluster using VMware Cloud Director, the TKC Ingress is only available from the Tenants Org network. This is achieved by automated NAT and Firewall rules.

On the Tenants Edge Gateway, an automated SNAT rule is created using the edge's primary public IP address. The NAT is only valid when communicating to the TKC Ingress network (10.99.100.0/24 in this example). This allows all Org Networks, connected to the Edge Gateway to communicate with the TKC.

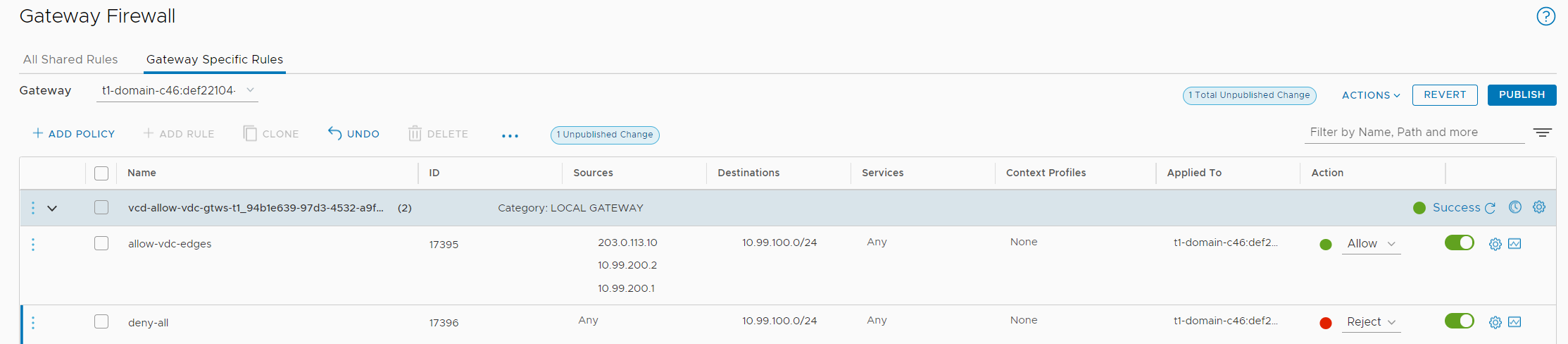

The Customers Kubernetes Namespace (which is created when assigning a Kubernetes Policy to the OVDC) has its own T1 Gateway. On this Gateway, a firewall policy is created that only allows traffic from the Tenant's Public IP Address (203.0.113.10). The two other Source addresses are the Supervisor Control Plane Egress (10.99.200.1) and the TKC's own Egress (10.99.200.2).

Publish Kubernetes Services using VCD Org Networks

If you want to make applications running in the TKC available to the Internet, you can simply use the Cloud Director Tenants Public IP addresses to create a NAT to TKC Ingress addresses.

Create a Webserver in the TKC using kubectl:

# kubectl create deployment webserver --image nginx # kubectl expose deployment webserver --port=80 --type=LoadBalancer # kubectl get service webserver NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE webserver LoadBalancer 10.16.1.238 10.99.100.3 80:32319/TCP 17d

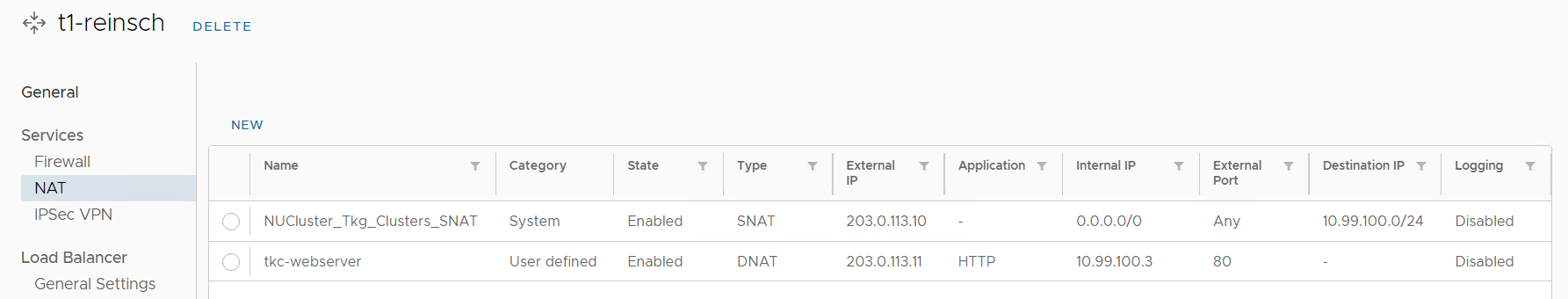

Note the services External IP (10.99.100.3). This is an address from the "Ingress CIDR", configured when enabling Workload Management. On the Tenants Edge Gateway, create a DNAT using a Public IP address from the Tenants pool as External IP and the TKC Services External IP as Internal IP.

The following diagram shows the traffic flow when someone accesses the Webserver from the Internet.

Hello,

Do you recommend separating production Tier1 and Tanzu Tier1 on separate Edge clusters? Or can it run on the same Cluster Edge?

You can (and should!) separate both networks that are connected to the SupervisorControl Plane (Management Network and Tanzu Ingress/Egress Network in separate VRF). If you are placing the management part in an NSX-T Segment, I recommend connecting it to a Tier-0 that is only connected to your management backend and not the Tier-0 that VCD is using to connect to the Internet (or External Networks).

Given that, you should have two Edge Clusters (Two Tier-0 on the same Edge Cluster works, but is not best practice).

If you share the Tier-0, you don't have to place the Tier-1 on a dedicated Edge Cluster.

sorry i have some problem with vCD.

when i try to integrate tanzu with vcd , if i used private ip for ingress and egress ip ,

i know i can DNAT my Ingress IP to Edge external ip

but my tkg cluster , can't access to internet. also i can't SNAT that IPs(10.0.x.x) in vCD. because Tenant T1 and TKG T1 are diffrent.

so my pods can't access internet with cluster Egress (10.0.x.x) IP , and no SNAT work for them !

what i must do for that ?!

thank you

VCD Managed T1 and WCP Managed T1 are completely different and you can't use your VCD public addresses to SNAT TKG to the Internet. Tanzu Basic is just "managed" from VCD, not "integrated" as you might expect.

VMware has a Reference Architecture for Tanzu: https://cloudsolutions.vmware.com/content/dam/digitalmarketing/microsites/en/images/cloud-solutions/pdfs/VCD_VC_Ref_Arch_TKGs.pdf

If you want a K8s Cluster in your Org Networks, CSE might be an option.

Hello !

I already have added pvdc in vcloud director . Now I have installed tanzu vshpere on this cluster . Can i activate k8s functionality on it in vcloud director or is it only possible with new addition of pvdc ?