This article recaps Issues that I had during the integration of VMware Container Service Extension 3.1 to allow the deployment of Tanzu Kubernetes Grid Clusters (TKGm) in VMware Cloud Director 10.3.

This article recaps Issues that I had during the integration of VMware Container Service Extension 3.1 to allow the deployment of Tanzu Kubernetes Grid Clusters (TKGm) in VMware Cloud Director 10.3.

If you are interested in an Implementation Guide, refer to Deploy CSE 3.1 with TKGm Support in VCD 10.3 and First Steps with TKGm Guest Clusters in VCD 10.3.

- CSE Log File Location

- DNS Issues during Photon Image Creation

- Disable rollbackOnFailure to troubleshoot TKGm deployment errors

- Template cookbook version 1.0.0 is incompatible with CSE running in non-legacy mode

- https://[IP-ADDRESS] should have a https scheme and match CSE server config file

- 403 Client Error: Forbidden for url: https://[VCD]/oauth/tenant/demo/register

- NodeCreationError: failure on creating nodes ['mstr-xxxx']

- Force Delete TKGm Clusters / Can't delete TKGm Cluster / Delete Stuck in DELETE:IN_PROGRESS

CSE Log File Location

Application Log Files

CSE Application Log files are in the .cse-log directory, which you can find in the installation directory.

- cse-install_[DATE]: Installation Log file you can consult when the cse install command returns errors. It contains Cloud Director and Template Build logs.

- cse-server-debug.log: This log contains important information about TKGm Guest deployments. When the deployment fails, check this log file for the reason.

- cse-server-info.log: General information about the CSE Service.

When CSE runs as service (as explained here) you can get the service log with the following command:

# journalctl -u cse.service

DNS Issues during Photon Image Creation

The template creation (cse install) fails with the following error:

curl#6: Couldn't resolve host name Error: Failed to synchronize cache for repo 'VMware Photon Linux 2.0(x86_64) Updates' from 'https://packages.vmware.com/photon/2.0/photon_updates_2.0_x86_64'

The current catalog for CSE Native contains 4 Ubuntu Images and one PhotonOS Image. The install script for Ubuntu Images has a hardcoded option to set the DNS server to 8.8.8.8. This step is missing in the Photon OS Images, which means that it uses the DNS Server configured in the Routed Network (Or provided by DHCP Server). If no DNS is provided, the build process fails.

Solution: Make sure that the network used for the CSE tenant has a valid network configuration including DHCP or. Pool, DNS and can access the Internet.

Disable rollbackOnFailure to troubleshoot TKGm deployment errors

When the creation of TKGm Guest Clusters fails, they are automatically deleted:

"Error creating cluster 'k8s-fgr'. Deleting cluster (rollback=True)"

Currently, there is no option to disable the automatic rollback on failures in the UI. If you access the failed virtual machine for troubleshooting, you have to disable the rollbackOnFailure option using an API Call.

The option is spec.settings.rollbackOnFailure.

To create clusters with the option disabled, use the following API Call:

POST {{ vcd_url }}/api/cse/3.0/cluster

{

"apiVersion": "cse.vmware.com/v2.0",

"kind": "TKGm",

"metadata": {

"additionalProperties": true,

"orgName": "org-fgr",

"virtualDataCenterName": "vdc-fgr",

"name": "kube-fgr",

"site": "https://vcloud.virten.lab"

},

"spec": {

"additionalProperties": true,

"topology": {

"controlPlane": {

"count": 1,

"sizingClass": "System Default",

"storageProfile": "StorageGold",

"cpu": null,

"memory": null

},

"workers": {

"count": 2,

"sizingClass": "System Default",

"storageProfile": "StorageGold",

"cpu": null,

"memory": null

},

"nfs": {

"count": 0,

"sizingClass": null,

"storageProfile": null

}

},

"settings": {

"ovdcNetwork": "192.168.0.0_24",

"sshKey": "ssh-rsa AAAAB3NzaC1yc2EAAA[....] fgr",

"rollbackOnFailure": true,

"network": {

"expose": true,

"pods": {

"cidrBlocks": [

"100.96.0.0/11"

]

},

"services": {

"cidrBlocks": [

"100.64.0.0/13"

]

}

}

},

"distribution": {

"templateName": "ubuntu-2004-kube-v1.20.5-vmware.2-tkg.1-6700972457122900687",

"templateRevision": 1

}

}

}

Template cookbook version 1.0.0 is incompatible with CSE running in non-legacy mode

CSE installation fails with the following error:

Template cookbook version 1.0.0 is incompatible with CSE running in non-legacy mode

This problem happens when you are mixing old and new versions of the CSE config.yaml. When you are using CSE 3.1 in non-legacy mode (legacy_mode: false), which you definitely should do, you have to provide the v2 cookbook: https://raw.githubusercontent.com/vmware/container-service-extension-templates/master/template_v2.yaml

Cookbook in Legacy Mode: http://raw.githubusercontent.com/vmware/container-service-extension-templates/master/template.yaml

https://[IP-ADDRESS] should have a https scheme and match CSE server config file

Deployment of a TKGm Guest Cluster fails and you can see the following error message in cse-server-debug.log:

21-10-18 12:57:42 | cluster_service_2_x_tkgm:300 - create_cluster | Request Id: fa5ba646-2711-4d75-95ad-430bae10e056 | ERROR :: Failed to create cluster k8s in org demo and VDC dc-demo

Traceback (most recent call last):

File "/opt/cse/python/lib/python3.7/site-packages/container_service_extension/rde/backend/cluster_service_2_x_tkgm.py", line 228, in create_cluster

f"'{vcd_site}' should have a https scheme"

container_service_extension.exception.exceptions.CseServerError: 'https://192.168.222.3' should have a https scheme and match CSE server config file.This problem happens when you are inconsistent in using IP addresses and Hostnames when accessing VCD. When VCD is configured with an FQDN as external address, you have to use this FQDN in the CSE Server config.yaml. Do not use FQDN as external Address and IP Address in config.yaml.

403 Client Error: Forbidden for url: https://[VCD]/oauth/tenant/demo/register

Deployment of a TKGm Guest Cluster fails and you can see the following error message in cse-server-debug.log:

403 Client Error: Forbidden for url: https://vcloud.virten.lab/oauth/tenant/demo/register

This error is caused by missing permissions for the Org Admin user that is creating the TKGm Cluster. Make sure that the Tenant has been published the ACCESS CONTROL > User > Manage > Manage user's own API token right as a Rights Bundle. See Step 4 in the CSE 3.1 Deployment Guide for instructions.

NodeCreationError: failure on creating nodes ['mstr-xxxx']

Deployment of a TKGm Guest Cluster fails and you can see the following error message in cse-server-debug.log:

During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/opt/cse/python/lib/python3.7/site-packages/container_service_extension/rde/backend/cluster_service_2_x_tkgm.py", line 855, in _create_cluster_async refresh_token=refresh_token File "/opt/cse/python/lib/python3.7/site-packages/container_service_extension/rde/backend/cluster_service_2_x_tkgm.py", line 2171, in _add_control_plane_nodes raise exceptions.NodeCreationError(node_list, str(err)) container_service_extension.exception.exceptions.NodeCreationError: failure on creating nodes ['mstr-xm1g'] Error:None 21-11-17 09:45:38 | cluster_service_2_x_tkgm:954 - _create_cluster_async | ERROR :: Error creating cluster 'k8s-fgr' Traceback (most recent call last): File "/opt/cse/python/lib/python3.7/site-packages/container_service_extension/rde/backend/cluster_service_2_x_tkgm.py", line 2151, in _add_control_plane_nodes logger=LOGGER File "/opt/cse/python/lib/python3.7/site-packages/container_service_extension/common/utils/pyvcloud_utils.py", line 768, in wait_for_completion_of_post_customization_procedure raise exceptions.ScriptExecutionError container_service_extension.exception.exceptions.ScriptExecutionError: None

This is a non-CSE-related issue. During the deployment, the Master node has to access its own kube apiserver by its public IP address. This does not work when you've configured the SNAT in NSX-T to with 0.0.0.0/0 as the source network. When you use 0/0 as SNAT, the communication is not source natted, which yon can see using tcpdump (Packet arrives with private address, instead of SNAT address):

11:24:01.246339 IP mstr-etl6.53834 > 203.0.113.11.6443: Flags [S], seq 1256437043, win 64240, options [mss 1460,sackOK,TS val 2118656026 ecr 0,nop,wscale 7], length 0 11:24:01.246485 IP mstr-etl6.53834 > mstr-etl6.6443: Flags [S], seq 1256437043, win 64240, options [mss 1460,sackOK,TS val 2118656026 ecr 0,nop,wscale 7], length 0

Change the SNAT Source from 0.0.0.0/0 to the actual internal network (eg. 192.168.0.0/24). Packet arrives with SNAT address:

11:26:28.566154 IP mstr-etl6.53898 > 203.0.113.11.6443: Flags [.], ack 267600, win 1997, options [nop,nop,TS val 2118803346 ecr 1922403998], length 0 11:26:28.566170 IP mstr-etl6.53898 > 203.0.113.11.6443: Flags [.], ack 268169, win 1993, options [nop,nop,TS val 2118803346 ecr 1922403998], length 0 11:26:28.566241 IP 203.0.113.10.17029 > mstr-etl6.6443: Flags [.], ack 267600, win 1997, options [nop,nop,TS val 2118803346 ecr 1922403998], length 0 11:26:28.566242 IP 203.0.113.10.17029 > mstr-etl6.6443: Flags [.], ack 268169, win 1993, options [nop,nop,TS val 2118803346 ecr 1922403998], length 0

Force Delete TKGm Clusters / Can't delete TKGm Cluster / Delete Stuck in DELETE:IN_PROGRESS

When you can't delete a TKGm Cluster you can force the deletion with the following API Calls:

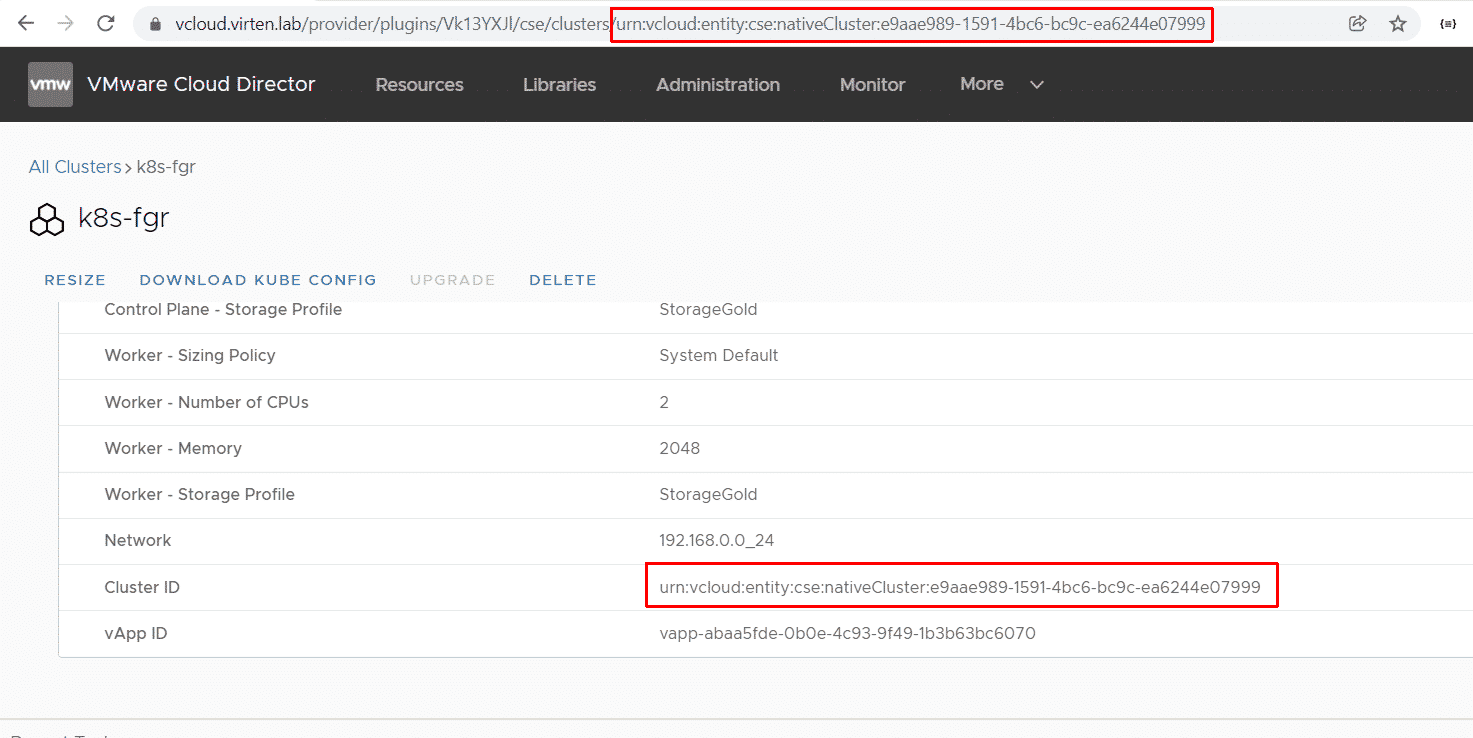

POST {{ vcd_url }}/cloudapi/1.0.0/entities/[Cluster-ID]/resolve

DELETE {{ vcd_url }}/cloudapi/1.0.0/entities/[Cluster-ID]?invokeHooks=false

When the DELETE call was successfull, you can delete the vAPP that has been created by CSE.

The Cluster ID (eg: urn:vcloud:entity:cse:nativeCluster:f0f1e578-7878-44b3-a34d-42bfca31759b) can be copied from the Container Extension Plugin in Cloud Director: