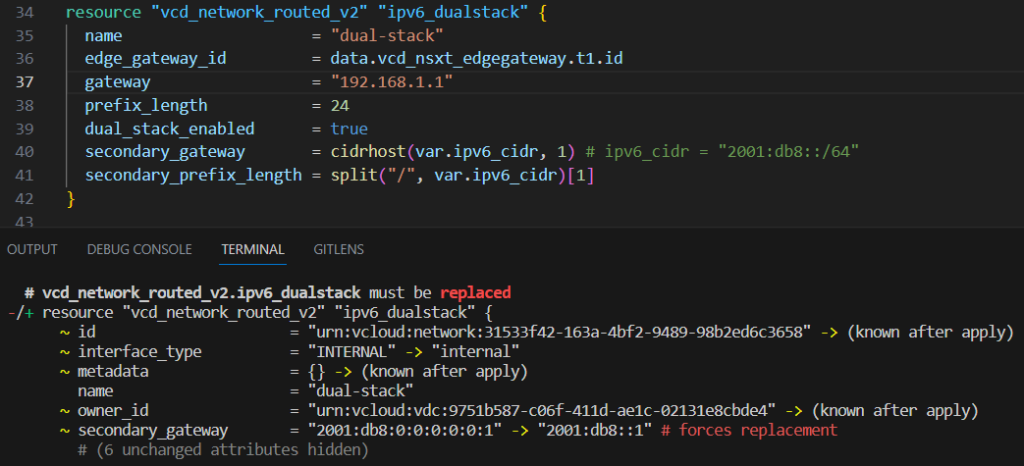

Deploy High Available Firewall Appliances in VMware Cloud Director

When customers are deploying their services to a Cloud Datacenter delivered with VMware Cloud Director they quite often want to use their own virtual Firewall Appliance rather than the Edge and Distributed firewall that is built into the NSX infrastructure. Many Administrators prefer to use their well-known CheckPoint, Fortinet, or pfSense for seamless configuration management. While using standalone virtual Firewall Appliances is not an issue in general, there are some caveats with HA deployments, which can be addressed with features implemented in recent versions of VMware Cloud Director.

This article explains how to deploy High-Available Firewall Appliances in VMware Cloud Director 10.5

Read More »Deploy High Available Firewall Appliances in VMware Cloud Director

The

The

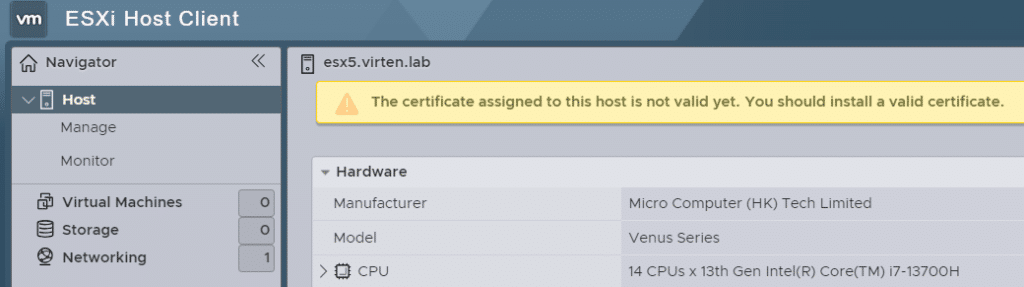

In the VMware Homelab Community, the Intel NUC (Next Unit of Computing) has been a prominent player for several years. They are small, silent, transportable, and have very low power consumption, making them a great server for your home lab. With the recently announced deprecation of their NUC Plattform, many homelabbers need an affordable and reliable alternative option for their VMware Homelab.

In the VMware Homelab Community, the Intel NUC (Next Unit of Computing) has been a prominent player for several years. They are small, silent, transportable, and have very low power consumption, making them a great server for your home lab. With the recently announced deprecation of their NUC Plattform, many homelabbers need an affordable and reliable alternative option for their VMware Homelab.