11th Gen Intel NUC - Which is the best candidate to run ESXi?

Intel has finally announced their 11th Generation NUCs. For the first time, all three product lines are announced at the same time. The NUC series is very popular to be used in homelabs or for running VMware ESXi. They are small, silent, transportable, and have very low power consumption.

- Enthusiast (Phantom Canyon) - Successor to the 8th Gen Hades Canyon

- Pro (Tiger Canyon) - Successor to the 8th Gen Provo Canyon

- Performance (Panther Canyon) - Successor to the 10th Gen Frost Canyon

In this article, I'm going to take a look at the 3 different product lines and how they compare to each other and previous NUCs.

Read More »11th Gen Intel NUC - Which is the best candidate to run ESXi?

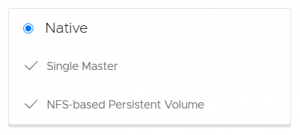

With the release of Cloud Director 10.2, the Container Service Extension 3.0 has been released. With CSE 3.0 you can extend your cloud offering by providing Kubernetes as a Service. Customers can create and manage their own K8s clusters directly in the VMware Cloud Director portal.

With the release of Cloud Director 10.2, the Container Service Extension 3.0 has been released. With CSE 3.0 you can extend your cloud offering by providing Kubernetes as a Service. Customers can create and manage their own K8s clusters directly in the VMware Cloud Director portal.

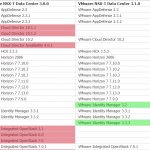

I've published a new tool that allows you to quickly compare the list of supported product versions for VMware products. The tool's goal is to make the upgrade process easier. You no longer have to manually check the Interop Matrix for compatible product versions.

I've published a new tool that allows you to quickly compare the list of supported product versions for VMware products. The tool's goal is to make the upgrade process easier. You no longer have to manually check the Interop Matrix for compatible product versions. When you are running an ESXi based homelab, you might have considered using vSAN as the storage technology of choice. Hyperconverged storages are a growing alternative to SAN-based systems in virtual environments, so using them at home will help to improve your skillset with that technology.

When you are running an ESXi based homelab, you might have considered using vSAN as the storage technology of choice. Hyperconverged storages are a growing alternative to SAN-based systems in virtual environments, so using them at home will help to improve your skillset with that technology. This is a list of all available performance metrics that are available in vSphere vCenter Server 7.0. Performance counters can be views for Virtual Machines, Hosts, Clusters, Resource Pools, and other objects by opening Monitor > Performance in the vSphere Client.

This is a list of all available performance metrics that are available in vSphere vCenter Server 7.0. Performance counters can be views for Virtual Machines, Hosts, Clusters, Resource Pools, and other objects by opening Monitor > Performance in the vSphere Client.