How to configure Multiple TLS Certificates with SNI in NSX-T Load Balancer

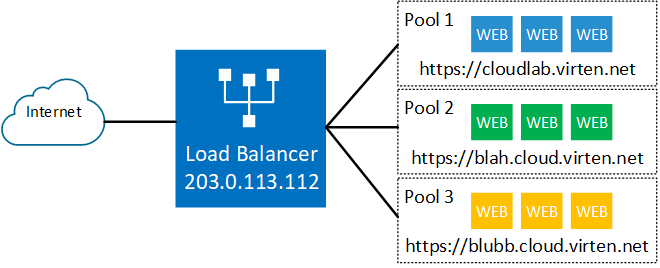

When you want to use the same public IP address for multiple websites, you have to leverage the SNI extension. Server Name Indication (SNI) is an extension to the Transport Layer Security (TLS) protocol which allows a client to indicate which hostname it wants to connect to. This allows a server to present specific certificates on the same IP address and hence allows multiple secure (HTTPS) websites to be served by the same server.

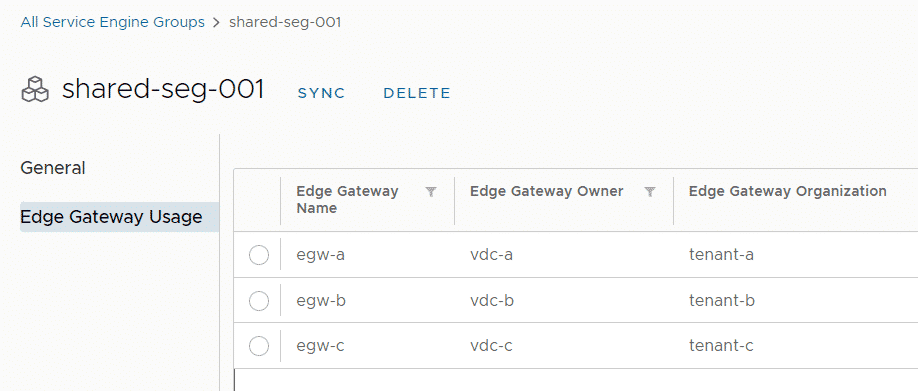

The NSX-T Load Balancer supports SNI Certificates on a single Virtual Server (IP Address) with different Server Pools in the backend. This article explains how to configure SNI-based Load Balancing with 3 different secure HTTPS Websites on a single IP Address with the NSX-T 3.1 Load Balancer.

Read More »How to configure Multiple TLS Certificates with SNI in NSX-T Load Balancer

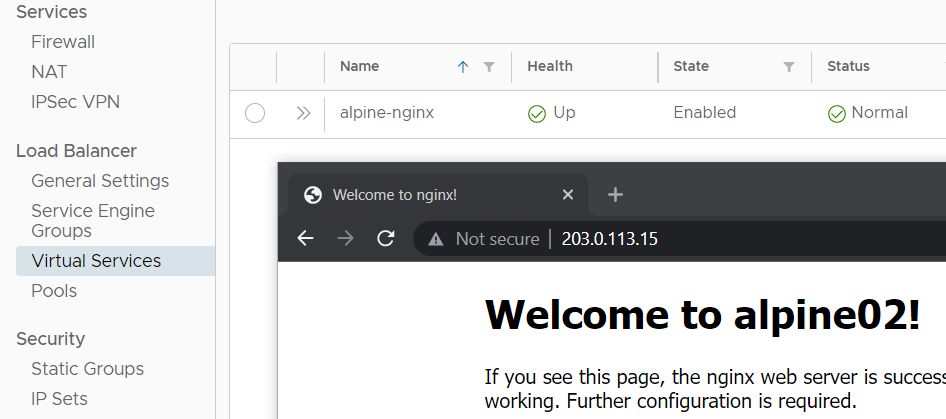

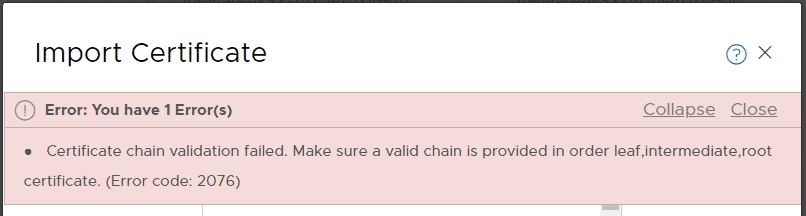

With the NSX Advanced Load Balancer integration in Cloud Director 10.2 or later, you can enable SSL offloading to secure your customer's websites. This article explains how to request a Let's Encrypt certificate, import it to VMware Cloud Director and enable SSL offloading in NSX-ALB. This allows tenants to publish websites in a secure manner.

With the NSX Advanced Load Balancer integration in Cloud Director 10.2 or later, you can enable SSL offloading to secure your customer's websites. This article explains how to request a Let's Encrypt certificate, import it to VMware Cloud Director and enable SSL offloading in NSX-ALB. This allows tenants to publish websites in a secure manner.