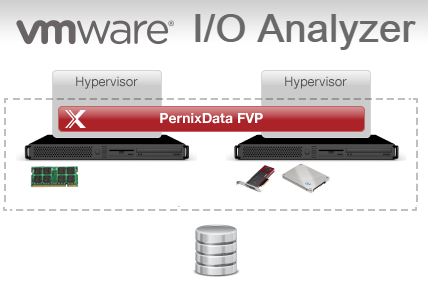

Evaluate PernixData FVP with replayed Production IO Traces

Using synthetic workloads to test drive PernixData FVP might result into odd findings. The most meaningful approach to test FVP is to deploy the software to production in monitor mode, let Architect do its magic and enable acceleration after checking the recommendations after a couple of days. Despite it is possible to deploy FVP, test drive, and remove it, without any downtime to virtual machines, this approach might not fit to all environments.

If you have separate DEV/QA environments with sophisticated load generators, the solution is obviously. If you don't have that, there is another option by record production I/O traces and replay them in a FVP accelerated test platform.

Read More »Evaluate PernixData FVP with replayed Production IO Traces