Client VPN with WireGuard in VMware Cloud Director backed by NSX-T

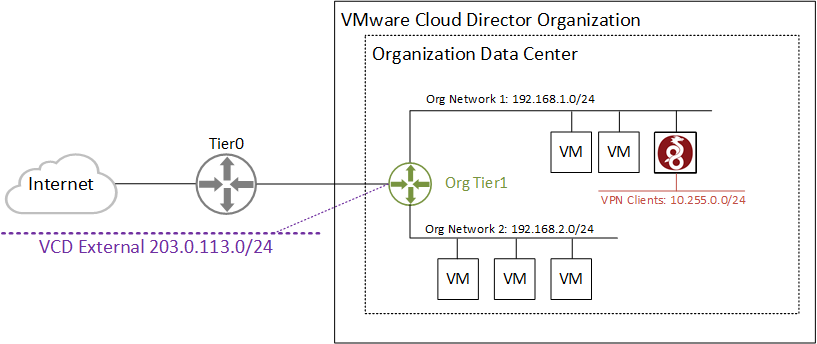

When you are running an NSX-T backed VMware Cloud Director, your customers might have the requirement to implement a remote access VPN solution. While Client VPN was possible with NSX-v, it is completely missing in NSX-T. This article explains how a customer can implement a Client VPN solution based on WireGuard. The implementation is fully in the hand of the customer and does not need any VCD modification or Service Provider interaction.

Wireguard is not part of VMware Cloud Director but can be installed easily on a Virtual Machine in the tenant's network. The following diagram shows the environment that is used throughout the article.

Read More »Client VPN with WireGuard in VMware Cloud Director backed by NSX-T

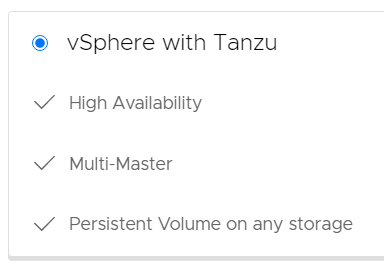

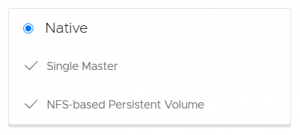

With the release of Cloud Director 10.2, the Container Service Extension 3.0 has been released. With CSE 3.0 you can extend your cloud offering by providing Kubernetes as a Service. Customers can create and manage their own K8s clusters directly in the VMware Cloud Director portal.

With the release of Cloud Director 10.2, the Container Service Extension 3.0 has been released. With CSE 3.0 you can extend your cloud offering by providing Kubernetes as a Service. Customers can create and manage their own K8s clusters directly in the VMware Cloud Director portal.

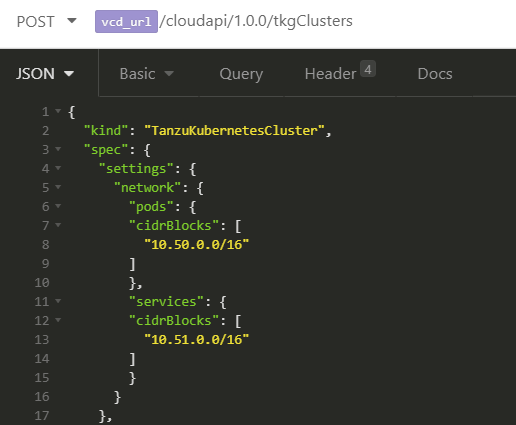

A major problem when deploying "vSphere with Tanzu" Clusters in VMware Cloud Director 10.2 is that the defaults for TKG Clusters are overlapping with the defaults for the Supervisor Cluster configured in vCenter Server during the Workload Management enablement.

A major problem when deploying "vSphere with Tanzu" Clusters in VMware Cloud Director 10.2 is that the defaults for TKG Clusters are overlapping with the defaults for the Supervisor Cluster configured in vCenter Server during the Workload Management enablement.