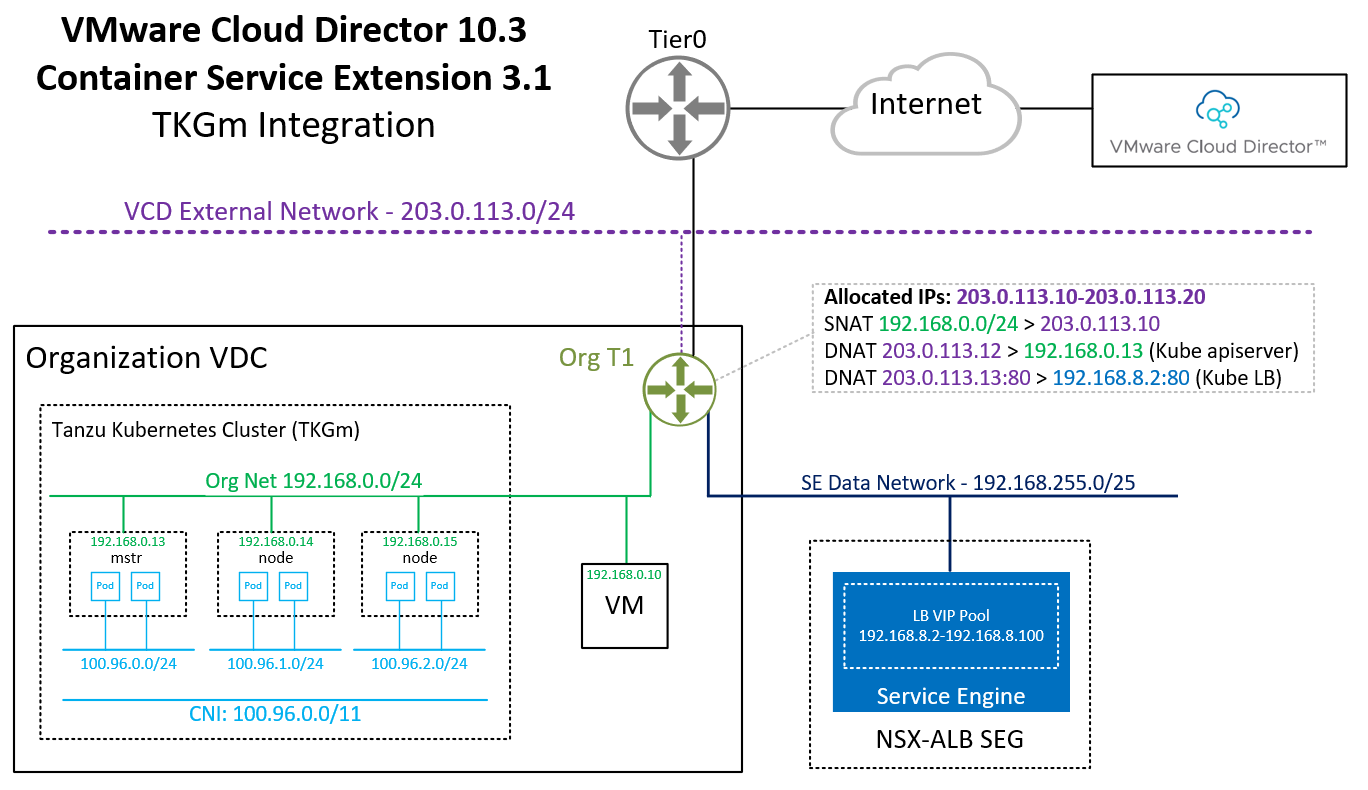

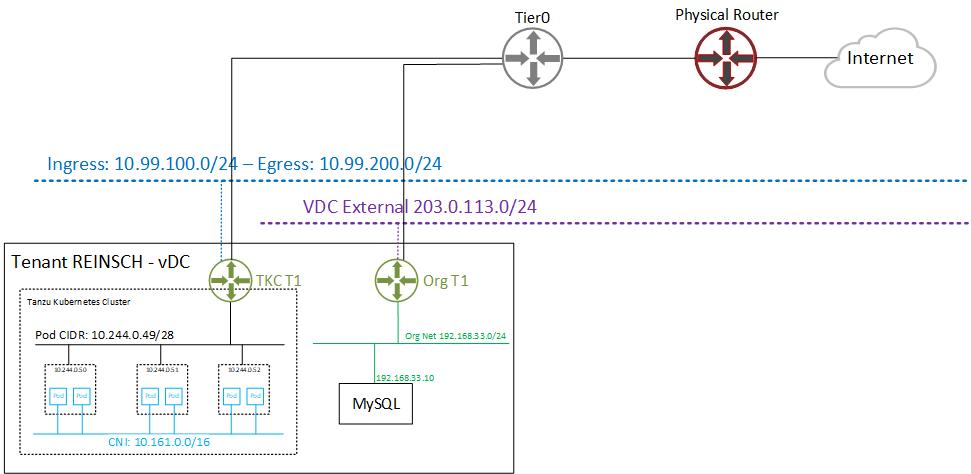

Many applications running in container platforms still require external resources like databases. In the last article, I've explained how to access TKC resources from VMware Cloud Director Tenant Org Networks. In This article, I'm going to explain how to access a database running on a Virtual Machine in VMware Cloud Director from a Tanzu Kubernetes Cluster that was deployed using the latest Cloud Service Extension (CSE) in VMware Cloud Director 10.2.

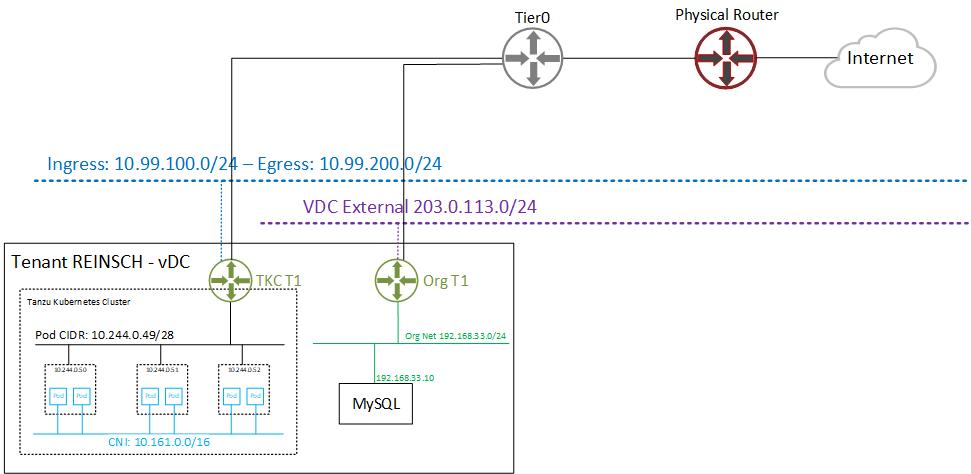

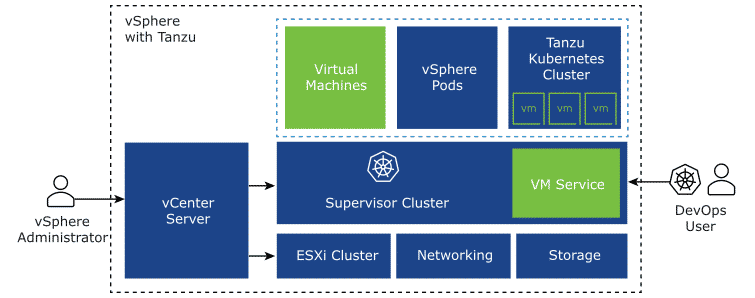

If you are not familiar with the vSphere with Tanzu integration in VMware Cloud Director, the following diagram shows the communication. I have a single Org VCD that has a MySQL Server running in an Org network. When leaving the Org Network, the private IP address is translated (SNAT) to an public IP from the VCD external network (203.0.113.0/24). The Customer also has a Tanzu Kubernetes Cluster (TKC) deployed using VMware Cloud Director. This creates another Tier1 Gateway, which is connected to the same upstream Tier0 Router. When the TKC communicates, it is also translated on the Tier 1 using an address from the Egress Pool (10.99.200.0/24).

So, both Networks can not communicate with each other directly. As of VMware Cloud Director 10.2.2, communication is only implemented to work in one direction - Org Network -> TKC. This is done using automatically configuring a SNAT on the Org T1 to its primary public address. With this address, the Org Network can reach all Kubernetes services that are exposed using an address from the Ingress Pool, which is the default when exposing services in TKC.

Read More »Access Org Network Services from TKC Guest Cluster in VMware Cloud Director with Tanzu

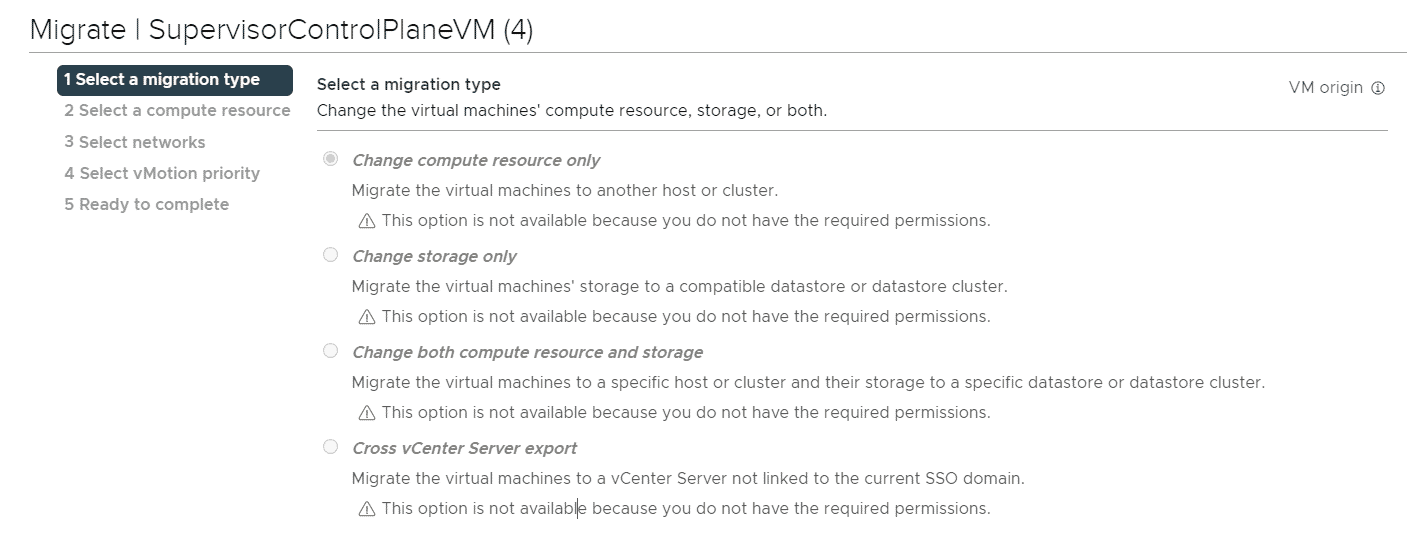

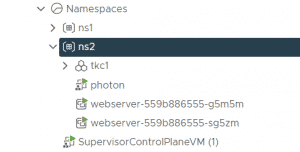

This article explains how you can create Virtual Machines in Kubernetes Namespaces in vSphere with Tanzu. The deployment of Virtual Machines in Kubernetes namespaces using kubectl was shown in demonstrations but is currently (as of vSphere 7.0 U2) not supported. Only with third-party integrations like TKG, it is possible to create Virtual Machines by leveraging the vmoperator.

This article explains how you can create Virtual Machines in Kubernetes Namespaces in vSphere with Tanzu. The deployment of Virtual Machines in Kubernetes namespaces using kubectl was shown in demonstrations but is currently (as of vSphere 7.0 U2) not supported. Only with third-party integrations like TKG, it is possible to create Virtual Machines by leveraging the vmoperator.