Since VMware has introduced vSphere with Tanzu support in VMware Cloud Director 10.2, I'm struggling to find a proper way to implement a solution that allows customers bidirectional communication between Virtual Machines and Pods. In earlier Kubernetes implementations using Container Service Extension (CSE) "Native Cluster", workers and the control plane were directly placed in Organization networks. Communication between Pods and Virtual Machines was quite easy, even if they were placed in different subnets because they could be routed through the Tier1 Gateway.

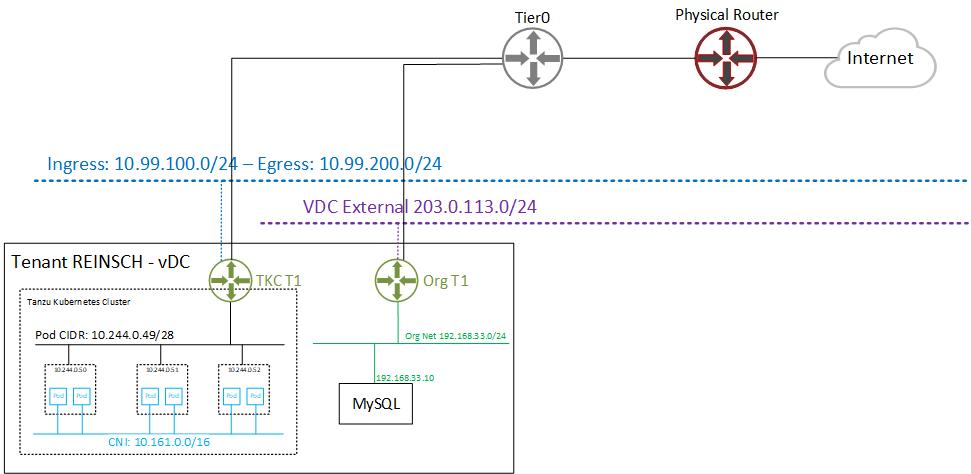

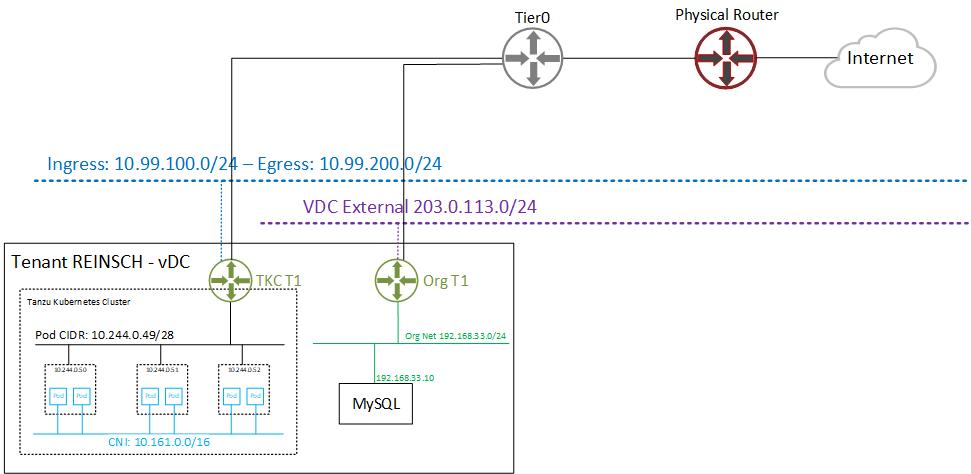

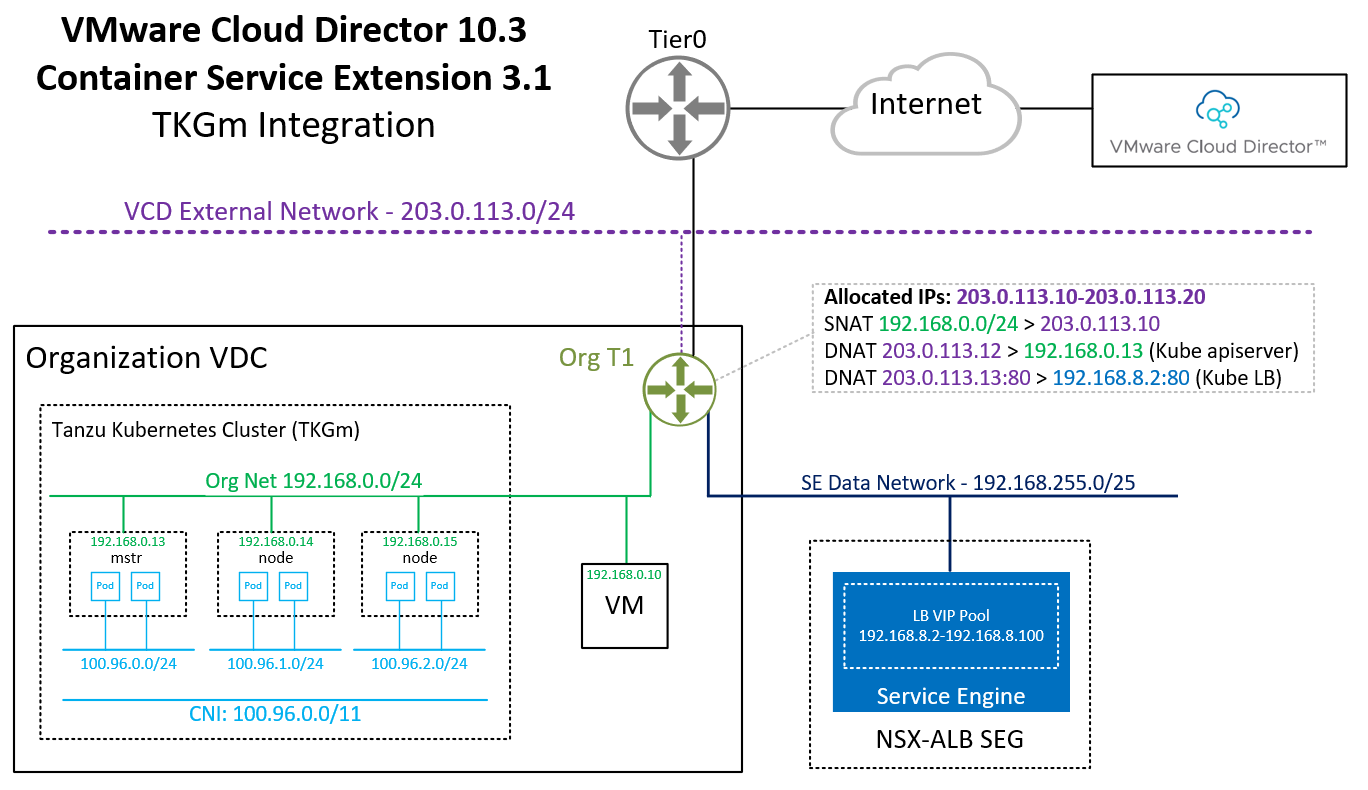

With Tanzu meeting VMware Cloud Director, Kubernetes Clusters have their own Tier1 Gateway. While it would be technically possible to implement routing between Tanzu and VCD Tier1s through Tier0, the typical Cloud Director Org Network is hidden behind a NAT. There is just no way to prevent overlapping networks when advertising Tier1 Routers to the upstream Tier0. The following diagram shows the VCD networking with Tanzu enabled.

With Cloud Director 10.2.2, VMware further optimized the implementation by automatically setting up Firewall Rules on the TKC Tier1 to only allow the tenants Org Networks to access Kubernetes services. They also published a guide on how customers could NAT their public IP addresses to TKC Ingress addressed to make them accessible from the Internet. The method is described here (see Publish Kubernetes Services using VCD Org Networks). Unfortunately, the need to communicate from Pods to Virtual Machines in VCD seems still not to be in VMware's scope.

While developing a decent solution by using Kubernetes Endpoints, I came up with a questionable workaround. While I highly doubt that these methods are supported and useful in production, I still want to share them, to show what actually could be possible.

Read More »Direct Org Network to TKC Network Communication in Cloud Director 10.2

This article recaps Issues that I had during the integration of VMware Container Service Extension 3.1 to allow the deployment of Tanzu Kubernetes Grid Clusters (TKGm) in VMware Cloud Director 10.3.

This article recaps Issues that I had during the integration of VMware Container Service Extension 3.1 to allow the deployment of Tanzu Kubernetes Grid Clusters (TKGm) in VMware Cloud Director 10.3.

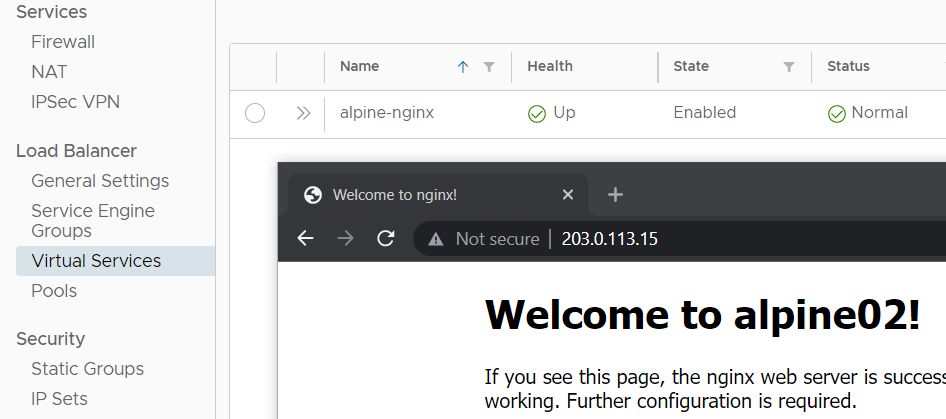

With the NSX Advanced Load Balancer integration in Cloud Director 10.2 or later, you can enable SSL offloading to secure your customer's websites. This article explains how to request a Let's Encrypt certificate, import it to VMware Cloud Director and enable SSL offloading in NSX-ALB. This allows tenants to publish websites in a secure manner.

With the NSX Advanced Load Balancer integration in Cloud Director 10.2 or later, you can enable SSL offloading to secure your customer's websites. This article explains how to request a Let's Encrypt certificate, import it to VMware Cloud Director and enable SSL offloading in NSX-ALB. This allows tenants to publish websites in a secure manner.